title: Predictive Coding: a Theoretical and Experimental Review url: http://arxiv.org/abs/2107.12979v4

PREDICTIVE CODING: A THEORETICAL AND EXPERIMENTAL REVIEW

Beren Millidge

School of Informatics University of Edinburgh beren@millidge.name

Anil K Seth

Sackler Center for Consciousness Science School of Engineering and Informatics University of Sussex CIFAR Program on Brain, Mind, and Consciousness A.K.Seth@sussex.ac.uk

Christopher L Buckley

Evolutionary and Adaptive Systems Research Group School of Engineering and Informatics University of Sussex C.L.Buckley@sussex.ac.uk

14th July, 2022

ABSTRACT

Predictive coding offers a potentially unifying account of cortical function – postulating that the core function of the brain is to minimize prediction errors with respect to a generative model of the world. The theory is closely related to the Bayesian brain framework and, over the last two decades, has gained substantial influence in the fields of theoretical and cognitive neuroscience. A large body of research has arisen based on both empirically testing improved and extended theoretical and mathematical models of predictive coding, as well as in evaluating their potential biological plausibility for implementation in the brain and the concrete neurophysiological and psychological predictions made by the theory. Despite this enduring popularity, however, no comprehensive review of predictive coding theory, and especially of recent developments in this field, exists. Here, we provide a comprehensive review both of the core mathematical structure and logic of predictive coding, thus complementing recent tutorials in the literature (Bogacz, 2017; Buckley, Kim, McGregor, & Seth, 2017). We also review a wide range of classic and recent work within the framework, ranging from the neurobiologically realistic microcircuits that could implement predictive coding, to the close relationship between predictive coding and the widely-used backpropagation of error algorithm, as well as surveying the close relationships between predictive coding and modern machine learning techniques.

Contents

1 Introduction 3

| 2 | Predictive Coding | 6 | ||||||

|---|---|---|---|---|---|---|---|---|

| 2.1 | Predictive Coding as Variational Inference | 6 | ||||||

| 2.2 Multi-layer Predictive Coding |

||||||||

| 2.3 Dynamical Predictive Coding and Generalized Coordinates |

||||||||

| Predictive Coding and Precision |

13 | |||||||

| 2.5 Predictive Coding in the Brain? |

||||||||

| 3 | Paradigms of Predictive Coding 21 |

|||||||

| 3.1 Unsupervised predictive coding |

||||||||

| 3.1.1 Autoencoding and predictive coding |

22 | |||||||

| 3.1.2 Temporal Predictive Coding |

23 | |||||||

| 3.1.3 Spatial Predictive Coding |

23 | |||||||

| 3.2 | Supervised predictive coding: Forwards and Backwards | 24 | ||||||

| 3.3 | Relaxed Predictive Coding |

25 | ||||||

| 3.4 | Deep Predictive Coding | 27 | ||||||

| 4 | Relationship to Other Algorithms | 28 | ||||||

| 4.1 Predictive Coding and Backpropagation of error |

||||||||

| 4.2 Linear Predictive Coding and Kalman Filtering |

||||||||

| 4.3 Predictive Coding, Normalization, and Normalizing Flows |

||||||||

| 4.4 Predictive Coding as Biased Competition |

||||||||

| 4.5 Predictive Coding and Active Inference |

||||||||

| 4.5.1 Costs of action |

36 | |||||||

| 4.5.2 Active inference and PID control |

36 | |||||||

| 5 | Discussion and Future Directions 39 |

|||||||

| 6 | Appendix A: Predictive Coding Under the Laplace Approximation 52 |

|||||||

| 7 | Appendix B: Precision as Natural Gradients 53 |

|||||||

| 8 | Appendix C: Challenges for a Neural Implementation of Backpropagation by predictive Coding 55 |

|||||||

| 9 | Appendix D: Kalman Filter Derivations 56 |

1 Introduction

Predictive coding theory is an influential theory in computational and cognitive neuroscience, which proposes a potential unifying theory of cortical function (Clark, 2013; K. Friston, 2003, 2005, 2010; Rao & Ballard, 1999; A. K. Seth, 2014) – namely that the core function of the brain is simply to minimize prediction error, where the prediction errors signal mismatches between predicted input and the input actually received 1 . This minimization can be achieved in multiple ways: through immediate inference about the hidden states of the world, which can explain perception (Beal et al., 2003), through updating a global world-model to make better predictions, which could explain learning (K. Friston, 2003; Neal & Hinton, 1998), and finally through action to sample sensory data from the world that conforms to the predictions (K. J. Friston, Daunizeau, & Kiebel, 2009), which potentially provides an account adaptive behaviour and control (K. Friston et al., 2015). Prediction error minimization can also be influenced by modulating the precision of sensory signals, which corresponds to modulating the 'signal to noise ratio' in how prediction errors can be used to update prediction, and which may shed light on the neural implementation of attention mechanisms (Feldman & Friston, 2010; Kanai, Komura, Shipp, & Friston, 2015). Predictive coding boasts an extremely developed and principled mathematical framework in terms of a variational inference algorithm (Blei, Kucukelbir, & McAuliffe, 2017; Ghahramani, Beal, et al., 2000; Jordan, Ghahramani, Jaakkola, & Saul, 1998), alongside many empirically tested computational models with close links to machine learning (Beal et al., 2003; Dayan, Hinton, Neal, & Zemel, 1995; Hinton & Zemel, 1994), which address how predictive coding can be used to solve challenging perceptual inference and learning tasks similar to the brain. Moreover, predictive coding also has been translated into neurobiologically plausible microcircuit process theories (Bastos et al., 2012; Shipp, 2016; Shipp, Adams, & Friston, 2013) which are increasingly supported by neurobiological evidence. Predictive coding as a theory also offers a single mechanism that accounts for diverse perceptual and neurobiological phenomena such as end-stopping (Rao & Ballard, 1999), bistable perception (Hohwy, Roepstorff, & Friston, 2008; Weilnhammer, Stuke, Hesselmann, Sterzer, & Schmack, 2017), repetition suppression (Auksztulewicz & Friston, 2016), illusory motions (Lotter, Kreiman, & Cox, 2016; Watanabe, Kitaoka, Sakamoto, Yasugi, & Tanaka, 2018), and attentional modulation of neural activity (Feldman & Friston, 2010; Kanai et al., 2015). As such, and perhaps uniquely among neuroscientific theories, predictive coding encompasses all three layers of Marr's hierarchy by providing a well-characterised and empirically supported view of 'what the brain is doing' at all of the computational, algorithmic, and implementational levels (Marr, 1982).

The core intuition behind predictive coding is that the brain is composed of a hierarchy of layers, which each make predictions about the activity of the layer immediately below them in the hierarchy (Clark, 2015) 2 . These downward descending predictions at each level are compared with the activity and inputs of each layer to form prediction errors – which is the information in each layer which could not be successfully predicted. These prediction errors are then fed upwards to serve as inputs to higher levels, which can then be utilized to reduce their own prediction error. The idea is that, over time, the hierarchy of layers instantiates a range of predictions at multiple scales, from the fine details in local variations of sensory data at low levels, to global invariant properties of the causes of sensory data (e.g., objects, scenes) at higher or deeper levels3 . Predictive coding theory claims that goal of the brain as a whole, in some sense, is to minimize these prediction errors, and in the process of doing so performs both perceptual inference and learning. Both of these processes can be operationalized via the minimization of prediction error, first through the optimization of neuronal firing rates on a fast timescale, and then the optimization of synaptic weights on a slow timescale (K. Friston,

1 For a contrary view and philosophical critique see Cao (2020).

For much of this work we consider a simple hierarchy with only a single layer above and below. Of course, connectivity in the brain is more heterarchical with many 'skip connections'. Predictive coding can straightforwardly handle these more complex architectures in theory, although few works have investigated the performance characteristics of such heterarchical architectures in practice.

3This pattern is widely seen in the brain (Grill-Spector & Malach, 2004; Hubel & Wiesel, 1962) and also in deep (convolutional) neural networks (Olah, Mordvintsev, & Schubert, 2017), but it is unclear whether this pattern also holds for deep predictive coding networks, primarily due to the relatively few instances of deep convolutional predictive coding networks in the literature so far.

2008). Predictive coding proposes that using a simple unsupervised loss function, such as simply attempting to predict incoming sensory data, is sufficient to develop complex, general, and hierarchically rich representations of the world in the brain, an argument which has found recent support in the impressive successes of modern machine learning models trained on unsupervised predictive or autoregressive objectives (Brown et al., 2020; Kaplan et al., 2020; Radford et al., 2019). Moreover, in contrast to modern machine learning algorithms which are trained to end with a global loss at the output, in predictive coding prediction errors are computed at every layer which means that each layer only has to focus on minimizing local errors rather than a global loss. This property potentially enables predictive coding to learn in a biologically plausible way using only local and Hebbian learning rules (K. Friston, 2003; Millidge, Tschantz, & Buckley, 2020; Whittington & Bogacz, 2017).

While predictive coding as a neuroscientific theory originated in the 1980s and 1990s (Mumford, 1992; Rao & Ballard, 1999; Srinivasan, Laughlin, & Dubs, 1982), and was first developed into its modern mathematical form of a comprehensive theory of cortical responses in the mid 2000s (K. Friston, 2003, 2005, 2008), it has deep intellectual antecedents. These precursors include Helmholtz's notion of perception as unconscious inference and Kant's notion that a priori structure is needed to make sense of sensory data (Hohwy et al., 2008; A. Seth, 2020), as well as early ideas of compression and feedback control in cybernetics and information theory (Conant & Ross Ashby, 1970; Shannon, 1948; Wiener, 2019). One of the core notions in predictive coding is the idea that the brain encodes a model of the world (or more precisely, of the causes of sensory signals), which is used to make constant predictions about the world, which are then compared against sensory data. On this view, perception is not the result of an unbiased feedforward, or bottom-up, processing of sensory data, but is instead a process of using sensory data to update predictions generated internally by the brain. Perception, thus, becomes a 'controlled hallucination' (Clark, 2013; A. Seth, 2020) in which top-down perceptual predictions are reined in by sensory prediction error signals. This view of 'perception as unconscious inference' originated with the German physicist and physiologist Hermann von Helmholtz (Helmholtz, 1866), who studied the way the brain "cancels out" visual distortions and flow resulting from its own (predictable movement), such as during voluntary eye movements, but does not do so for external perturbations, such as when external pressure is applied to the eyeball, in which case we consciously experience visual movement arising from this (unpredicted) ocular motion. Helmholtz thus argued that the brain must maintain both a record of its own actions, in the form of a 'corollary discharge' as well as a model of the world sufficient to predict the visual effects of these actions (i.e. a forward model) in order to so perfectly cancel self-caused visual motion (Huang & Rao, 2011).

Another deep intellectual influence in predictive coding comes from information theory (Shannon, 1948), and especially the minimum redundancy principle of Barlow (Barlow, 1961, 1989; Barlow et al., 1961). Information theory tells us that information is inseparable from a lack of predictability. If something is predictable before observing it, it cannot give us much information. Conversely, to maximize the rate of information transfer, the message must be minimally predictable and hence minimally redundant. Predictive coding as a means to remove redundancy in a signal was first applied in signal processing, where it was used to reduce transmission bandwidth for video transmission. For a review see Spratling (2017). Initial schemes used a simple approach of subtracting the new (to-be-transmitted) frame from the old frame (in effect using a trivial prediction that the new frame is always the same as the old frame), which works well in reducing bandwidth in many settings where there are only a few objects moving in the video against a static background. More advanced methods often predict each new frame using a number of past frames weighted by some coefficient, an approach known as linear predictive coding. Then, as long as the coefficients are transmitted at the beginning of the message, the receiving system can reconstruct signals compressed by this system. Barlow applied this principle to signalling in neural circuits, arguing that the brain faces considerably evolutionary pressures for information-theoretic efficiency, since neurons are energetically costly, and thus redundant firing would be potentially wasteful and damaging to an organism's evolutionary fitness. Because of this, we should expect the brain to utilize a highly optimized code which is minimally redundant. Predictive coding, as we shall see, precisely minimizes this redundancy, by only transmitting the errors or residuals of sensory input which cannot be explained by top-down predictions, thus removing the most redundancy possible at each layer (Huang & Rao, 2011). Finally, predictive coding

also inherits intellectually from ideas in cybernetics, control and filtering theory (Conant & Ross Ashby, 1970; Kalman, 1960; A. K. Seth, 2014; Wiener, 2019). Cybernetics as a science is focused on understanding the dynamics of interacting feedback loops for perception and control, based especially around the concept of error minimization. Control and filtering theory have, in a related but distinct way, been based around methods to minimize residual errors in both perception or action according to some objective for decades. As we shall see, standard methods such as Kalman Filtering (Kalman, 1960) or PID control Johnson and Moradi (2005) can be shown as special cases of predictive coding under certain restrictive assumptions.

The first concrete discussion of predictive coding in the neural system arose as a model of neural properties of the retina (Srinivasan et al., 1982), specifically as a model of centre-surround cells which fire when presented with either a light-spot against a dark background (on-centre, off-surround), or alternatively a dark spot against a light background (off-centre, on surround) cells. It was argued that this coding scheme helps to minimize redundancy in the visual scene specifically by removing the spatial redundancy in natural visual scenes – that the intensity of one 'pixel' helps predict quite well the intensities of neighbouring pixels. If, however, the intensity of a pixel was predicted by the intensity of the surround, and this prediction is subtracted from the actual intensity, then the centre-surround firing pattern emerges (Huang & Rao, 2011). Mathematically, this idea of retinal cells removing the spatial redundancy of the visual input is derived from the fact that the optimal spatial linear filter which minimizes the redundancy in the representation of the visual information closely resembles the centre-surround receptive fields which are well established in retinal ganglion cells (Huang & Rao, 2011). This predictive coding approach was also applied to coding in the lateral geniculate nucleus (LGN), the thalamic structure that retinal signals pass through en-route to cortex, which was hypothesised to help remove temporal correlations in the input by subtracting out the retinal signal at previous timesteps using recurrent lateral inhibitory connectivity (Huang & Rao, 2011; Marino, 2020)

Mumford (1992) was perhaps the first to extend this theory of the retina and the LGN to a fully-fledged general theory of cortical function. His theory was motivated by simple observations about the neurophysiology of cortico-cortical connections. Specifically, the existence of separate feedforward and feedback paths, where the feedforward paths originated in the superficial layers of the cortex, and the feedback pathways originated primarily in the deep layers. He also noted the reciprocal connectivity observed almost uniformly between cortical regions – if a region projects feedforward to another region, it almost always also receives feedback inputs from that region. He proposed that the deep feedback projections convey abstract 'templates' which each cortical region then matches to its incoming sensory data. Then, inspired by the minimum redundancy principle of Barlow (Barlow et al., 1961), he proposed that instead of faithfully transmitting the sensory input upwards, each layer transmits only the 'residual' resulting after attempting to find the best fit match to the 'template'.

While Mumford's theory contained most aspects of classical predictive coding theory in the cortex, it was not accompanied by any simulations or empirical work and so its potential as a framework for understanding the cortex was not fully appreciated. The seminal work of Rao and Ballard in 1999 (Rao & Ballard, 1999) had its impact precisely by doing this. They created a small predictive coding network according to the principles identified by Mumford, and empirically investigated its behaviour, demonstrating that the complex and dynamic interplay of predictions and prediction errors could explain several otherwise perplexing neurophysiological phenomena, specifically 'extra-classical' receptive field effects such as endstopping neurons. Extra-classical refers to the classical view in visual neuroscience of the visual system being composed of a hierarchy of feature-detectors, which originated in the pioneering work of (Hubel & Wiesel, 1962). According to this classical view, the visual cortex forms a hierarchy which ultimately bottoms out at the retina. At each layer, there are neurons sensitive to different features in the visual input, with neurons at the bottom of the hierarchy responding to simple features such as patches of light and dark, while neurons at the top respond to complex features such as faces. The feature detectors at higher levels of the hierarchy are computed by combining several lower-level simpler feature detectors. For instance, as a crude illustration, a face detector might be created by combining several spot detectors (eyes) with some bar detectors (mouths and noses). However, it was quickly noticed that some receptive fields displayed properties which could not be explained simply as compositions

of lower-level feature detectors. Most significantly, many receptive field properties, especially in the cortex, showed context sensitivities, with their activity depending on the context outside of their receptive field. For instance, the 'end-stopping' neurons fired if a bar was presented which ended just outside the receptive field of the cell, but not if it continued for a long distance beyond it. Within the classical feedforward view, such a feature detector should be impossible, since it would have no access to information outside of its receptive field. Rao and Ballard showed that a predictive coding network, constructed with both bottom up prediction error neurons and neurons providing top-down predictions, enables the replication of several extra-classical receptive field properties, such as endstopping, within the network. This capability is made possible by the top-down predictions conveyed by the hierarchical predictive coding network. In effect, the predictive coding network conveys a downward prediction of the continuation of the bar, in line with ideas in gestalt perception. When this prediction is violated a prediction error is generated and the neuron fires, thus reproducing the extra-classical prediction error effect. Moreover, in the Rao and Ballard model prediction error, value estimation, and weight updates follow from gradient descents on a single energy function. This model was later extended by Karl Friston in a series of papers (K. Friston, 2003, 2005, 2008), which placed the model on a firm theoretical grounding as a variational inference algorithm, as well as integrating predictive coding with the broader free energy principle (K. Friston, 2010; K. Friston, Kilner, & Harrison, 2006) by identifying the energy function of Rao and Ballard with the variational free energy of variational inference. This identification enables us to understand the Rao and Ballard learning rules as performing well-specified approximate Bayesian inference.

Following the impetus of these landmark developments, as well as much subsequent work, predictive coding has become increasingly influential over the last two decades in cognitive and theoretical neuroscience, especially for its ability to offer a supposedly unifying, albeit abstract, perspective on the daunting multi-level complexity of the cortex. In this review, we aim to provide a coherent overview and introduction to the mathematical framework of predictive coding, as defined using the probabilistic modelling framework of K. Friston (2005), as well as a comprehensive review of the many directions predictive coding theory has evolved in since then. For readers wishing to gain a deeper appreciation and understanding of the underlying mathematics, we also advise them to read these two didactic tutorials on the framework (Bogacz, 2017; Buckley et al., 2017). We also advise reading Spratling (2017) for a quick review of major predictive coding algorithms and Marino (2020) for another overview of predictive coding and close investigation of its relationship to variational autoencoders (Kingma & Welling, 2013) and normalizing flows (Rezende & Mohamed, 2015). In this review, we survey the development and performance of computational models designed to probe the performance of predictive coding on a wide variety of tasks, including those that try to combine predictive coding with ideas from machine learning to allow it to scale up to complex tasks. We also review the work that has been done on translating the relatively abstract mathematical formalism of predictive coding into hypothesized biologically plausible neural microcircuits that could, in principle, be implemented by the brain, as well as the empirical neuroscientific work explicitly seeking experimental confirmation or refutation of the many predictions made by the theory. We also look deeply at more theoretical matters, such as the extensions of predictive coding using dynamical models which utilizes generative models over multiple dynamical orders of motion, the relationship of learning in predictive coding to the backpropagation of error algorithm widely used in machine learning, and the development of the theory of precision which enables predictive coding to encode not just direct predictions of sensory stimuli but also predictions as to their intrinsic uncertainty. Finally, we review extensions of the predictive coding framework that generalize beyond perception to also include action, drawing on the close relationship between predictive coding and classical methods in filtering and control theory.

2 Predictive Coding

2.1 Predictive Coding as Variational Inference

A crucial advance in predictive coding theory occurred when it was recognized that the predictive coding algorithm could be cast as an approximate Bayesian inference process based upon Gaussian generative models (K. Friston, 2003, 2005, 2008). This perspective illuminates the close connection between predictive coding as motivated through the information-theoretic minimum-redundancy approach, and the Helmholtzian idea of perception as unconscious inference. Indeed, the two are fundamentally inseparable owing to the close mathematical connections between information theory and probability theory. Intuitively, information can only be defined according to some 'expected' distribution, just as predictability or redundancy can only be defined against some kind of prediction. Prediction, moreover, presupposes some kind of model to do the predicting. The explicit characterisation of this model in probabilistic terms as a generative model completes the link to probability theory and, ultimately Bayesian inference. Friston's approach, crucially, reformulates the mostly heuristic Rao and Ballard model in the language of variational Bayesian inference, thus allowing for a detailed theoretical understanding of the algorithm, as well as tying it the broader project of the Bayesian Brain (Deneve, 2005; Knill & Pouget, 2004). Crucially, Friston showed that the energy function in Rao and Ballard can be understood as a variational free-energy of the kind that is minimized through variational inference. This connection demonstrates that predictive coding can be directly interpreted as performing approximate Bayesian inference to infer the causes of sensory signals, thus providing a mathematically precise characterisation of the Helmholtzian idea of perception as inference.

Variational inference describes a broad family of methods which have been under extensive development in machine learning and statistics since the 1990s (Beal et al., 2003; Blei et al., 2017; Ghahramani et al., 2000; Jordan et al., 1998). They originally evolved out of methods for approximately solving intractable optimization problems in statistical physics (Feynman, 1998). In general, variational inference approximates an intractable inference problem with a tractable optimization problem. Intuitively, we postulate, and optimize the statistics of an approximate 'variational' density, which we then try to match to the desired inference distribution 4 .

To formalize this, let us assume we have some observations (or data) o, and we wish to infer the latent state x. We also assume we have a generative model of the data generating process p(o, x) = p(o|x)p(x). By Bayes rule, we can compute the true posterior directly as p(x|o) = p(o,x) p(o) , however, the normalizing factor p(o) = R dxp(o, x) is often intractable because it requires an integration over all latent variable states. The marginal p(o) is often referred to as the evidence, since it effectively scores the likelihood of the data under a given model, averaged over all possible values of the model parameters. Computing the marginal p(o) (model evidence) is intrinsically valuable since it is a core quantity in Bayesian model comparison methods, where it is used to compare the ability of two different generative models to fit the data.

Since directly computing the true posterior p(x|o) through Bayes rule is generally intractable, variational inference aims to approximate this posterior using an auxiliary posterior q(x|o; φ), with parameters φ. This variational q distribution is arbitrary and under the control of the modeller. For instance, suppose we define q(x|o; φ) to be a Gaussian distribution. Then the parameters φ = {µ, Σ} become the mean µ and the variance Σ of the Gaussian. The goal then is to fit this approximate posterior to the true posterior by minimizing the divergence between the true and approximate posterior with respect to the parameters. Mathematically, this problem can be written as,

$$q^*(x|o;\phi) = \underset{\phi}{\operatorname{argmin}} \ \mathbb{D}[q(x|o;\phi)||p(x|o)] \tag{1}$$

Where D[p|q] is a function that measures the divergence between two distributions p and q. Throughout, we take D[] to be the KL divergence D[Q||P] = DKL[Q||P] = R dQQ ln Q P , although other divergences are possible (Banerjee,

4This contrasts with the other principle method for approximating intractable inference procedures – Markov Chain Monte-Carlo (MCMC) (Brooks, Gelman, Jones, & Meng, 2011; Hastings, 1970; Metropolis, Rosenbluth, Rosenbluth, Teller, & Teller, 1953). This class of methods sample stochastically from a Markov Chain with a stationary distribution equal to the true posterior. MCMC methods asymptotically converge to the true posterior, while variational methods typically do not (unless the class of variational distributions includes the true posterior). However, variational methods typically converge faster and are computationally cheaper, leading to a much wider use in contemporary machine learning and statistics.

Merugu, Dhillon, Ghosh, & Lafferty, 2005; Cichocki & Amari, 2010) 5 . When DKL[q(x|o; φ)||p(x|o)] = 0 then q(x|o; φ) = p(x|o) and the variational distribution exactly equals the true posterior, and thus we have solved the inference problem6 . By doing this, we have replaced the inference problem of computing the posterior with an optimization problem of minimizing this divergence. However, merely writing the problem this way does not solve it because the divergence we need to optimize still contains the intractable true posterior. The beauty of variational inference is that it instead optimizes a tractable upper bound on this divergence, called the variational free energy7 . To generate this bound, we simply apply Bayes rule to the true posterior to rewrite it in the form of a generative model and the evidence.

$$D_{\text{KL}}[q(x|o;\phi)||p(x|o)] = D_{\text{KL}}[q(x|o;\phi)||\frac{p(o,x)}{p(o)}]$$

$$= D_{\text{KL}}[q(x|o;\phi)||p(o,x)] + \mathbb{E}_{q(x|o;\phi)}[\ln p(o)]$$

$$= D_{\text{KL}}[q(x|o;\phi)||p(o,x)] + \ln p(o)$$

$$\leq D_{\text{KL}}[q(x|o;\phi)||p(o,x)] = \mathcal{F} \tag{2} $$

Where in the third line the expectation around p(o) vanishes since the expectation is over the variable x which is not in p(o). The variational free energy F = DKL[q(x|o; φ)||p(o, x)] is an upper bound because ln p(o) is necessarily ≤ 0 since, as a probability, 0 ≤ p(o) ≤ 1. Importantly, F is a tractable quantity, since it is a divergence between two quantities we assume we (as the modeller) know – the variational approximate posterior q(x|o) and the generative model p(o, x). Since F is an upper bound, by minimizing F, we drive q(x|o; φ) closer to the true posterior. As an additional bonus, if q(x|o; φ) = p(x|o) then F = ln p(o) or the marginal, or model, evidence, which means that in such cases F can be used for model selection (Wasserman, 2000). We can also gain an important intuition about F by showing that it can be decomposed into a likelihood maximization term and a KL divergence term which penalizes deviation from the Bayesian prior. These two terms are often called the 'accuracy' and the 'complexity' terms. This decomposition of F is often utilized and optimized explicitly in many machine learning algorithms (Kingma & Welling, 2013).

$$\begin{split} \mathcal{F} &= D_{\mathrm{KL}}[q(x|o;\phi)||p(o,x)] \\ &= \underbrace{\mathbb{E}_{q(x|o;\phi)} \left[ \ln p(o|x) \right]}_{\mathrm{Accuracy}} + \underbrace{D_{\mathrm{KL}}[q(x|o;\phi)||p(x)]}_{\mathrm{Complexity}} \end{split}$$

In many practical cases, we must relax the assumption that we know the generative model p(o, x). Luckily this is not fatal. Instead, it is possible to learn the generative model alongside the variational posterior on the fly and in parallel using the Expectation Maximization (EM) algorithm (Dempster, Laird, & Rubin, 1977). The EM algorithm is extremely intuitive. First, assume that we parametrize our unknown generative model with some parameters θ which are initialized at some arbitrary θ0. Similarly, we initialize our variational posterior at some arbitrary φ0. Then, we take turns optimizing F with respect to the variational posterior parameters φ with the generative model parameters θ held fixed and then, conversely, optimize F with respect to the generative model parameters θ with the variational posterior parameters φ held fixed. Mathematically, we can write this alternating optimization as

$$\phi_{t+1} = \underset{\phi}{\operatorname{argmin}} \mathcal{F}(\phi, \theta) \big|_{\theta = \theta_t}$$

$$\theta_{t+1} = \underset{\theta}{\operatorname{argmin}} \mathcal{F}(\phi, \theta) \big|_{\phi = \phi_{t+1}} \tag{3} $$

5 Interestingly the KL divergence is asymmetric (KL[Q||P] 6= KL[P||Q] and is thus not a valid distance metric. Throughout we use the reverse-KL divergence KL[Q||P], as is standard in variational inference. Variational inference with the forward-KL KL[P||Q] has close relationships to expectation propagation (Minka, 2001).

6An exact solution is only possible when the family of variational distributions considered includes the true posterior as a member – for example. if both the true posterior and the variational posterior are Gaussian.

7 In machine learning, this is instead called the negative evidence lower bound (ELBO) which is simply the negative free-energy, and is maximized instead.

Where we use the $|_{x=y}$ notation to mean that the variable x is fixed at value y throughout the optimization. It has been shown that this iterative sequence of optimization problems often converges to good solutions and often does so robustly and efficiently in practice (Boyles, 1983; Dellaert, 2002; Dempster et al., 1977; Gupta & Chen, 2011).

Having reviewed the general principles of variational inference, we can see how they relate to predictive coding. First, to make any variational inference algorithm concrete, we must specify the forms of the variational posterior and the generative model. To obtain predictive coding, we assume a Gaussian form for the generative model $p(o, x; \theta) = p(o|x; \theta)p(x; \theta) = \mathcal{N}(o; f(\theta_1 x), \Sigma_1)\mathcal{N}(x; g(\theta_2 \bar{\mu}), \Sigma_2)$ where we first partition the generative model into likelihood $p(o|x; \theta)$ and prior $p(x; \theta)$ terms. The mean of the likelihood Gaussian distribution is assumed to be some function f of the hidden states x, which can be parameterized with parameters $\theta$ , while the mean of the prior Gaussian distribution is set to some arbitrary function g of the prior mean $\bar{\mu}$ . The variances of the two gaussian distributions of the generative model are denoted $\Sigma_1$ and $\Sigma_2$ . We also assume that the variational posterior is a dirac-delta (or point mass) distribution $q(x|o; \phi) = \delta(x - \mu)$ with a center $\phi = \mu^8$ .

Given these definitions of the variational posterior and the generative model, we can write down the concrete form of the variational free energy to be optimized. We first decompose the variational free energy into an 'Energy' and an 'Entropy' term

$$\mathcal{F} = D_{\text{KL}}[q(x|o;\phi)||p(o,x;\theta)]$$

$$= \underbrace{\mathbb{E}_{q(x|o;\phi)}[\ln q(x|o;\phi)]}_{\text{Entropy}} - \underbrace{\mathbb{E}_{q(x|o;\phi)}[\ln p(o,x;\theta)]}_{\text{Energy}} \tag{4} $$

where, since the entropy of the dirac-delta distribution is 0 (it is a point mass distribution), we can ignore the entropy term and focus solely on writing out the energy.

$$\underbrace{\mathbb{E}_{q(x|o;\phi)}[\ln p(o,x;\theta)]}_{\text{Energy}} = \mathbb{E}_{\delta(x-\mu)}[\ln \left(\mathcal{N}(o;f(\theta_{1}x),\Sigma_{1})\mathcal{N}(x;g(\theta_{2}\bar{\mu}),\Sigma_{2})\right)]$$

$$= \ln \mathcal{N}(o;f(\mu,\theta_{1}),\Sigma_{1}) + \ln \mathcal{N}(\mu;g(\bar{\mu},\theta_{2}),\Sigma_{2})$$

$$= -\frac{(o-f(\mu,\theta_{1}))^{2}}{2\Sigma_{1}} - \frac{1}{2}\ln 2\pi\Sigma_{1} - \frac{(\mu-g(\bar{\mu},\theta_{2}))^{2}}{2\Sigma_{2}} - \frac{1}{2}\ln 2\pi\Sigma_{2}$$

$$= -\frac{1}{2}\left[\Sigma_{1}^{-1}\epsilon_{o}^{2} + \Sigma_{2}^{-1}\epsilon_{x}^{2} + \ln 2\pi\Sigma_{1} + \ln 2\pi\Sigma_{2}\right] \tag{5}$$

where we define the 'prediction errors' $\epsilon_o = o - f(\mu, \theta_1)$ and $\epsilon_x = \mu - g(\bar{\mu}, \theta_2)$ . We thus see that the energy term, and thus the variational free energy, is simply the sum of two squared prediction error terms, weighted by their inverse variances, plus some additional log variance terms.

Finally, to derive the predictive coding update rules, we must make one additional assumption – that the variational free energy is optimized using the method of gradient descent such that,

$$\frac{d\mu}{dt} = -\frac{\partial \mathcal{F}}{\partial \mu} \tag{6}$$

<sup>8In previous works, predictive coding has typically been derived by assuming a Gaussian variational posterior under the Laplace approximation. This approximation effectively allows you to ignore the variance of the Gaussian and concentrate only on the mean. This procedure is essentially identical to the dirac-delta definition made here, and results in the same update scheme. However, the derivation using the Laplace approximation is much more involved so, for simplicity, here we use the Dirac delta definition. The original Laplace derivation can be found in Appendix A of this review – see also Buckley et al. (2017) for a detailed walkthrough.

Given this, we can derive dynamics for all variables of interest $(\mu, \theta_1, \theta_2)$ by taking derivatives of the variational free energy $\mathcal{F}$ . The update rules are as follows,

$$\frac{d\mu}{dt} = -\frac{\partial \mathcal{F}}{\partial \mu} = \Sigma_1^{-1} \epsilon_o \frac{\partial f}{\partial \mu} \theta^T - \Sigma_2^{-1} \epsilon_x \tag{7}$$

$$\frac{d\theta_1}{dt} = \frac{\partial \mathcal{F}}{\partial \theta_1} = -\Sigma_1^{-1} \epsilon_o \frac{\partial f}{\partial \theta_1} \mu^T \tag{8}$$

$$\frac{d\theta_2}{dt} = \frac{\partial \mathcal{F}}{\partial \theta_2} = -\Sigma_2^{-1} \epsilon_x \frac{\partial g}{\partial \theta_2} \bar{\mu}^T \tag{9}$$

Importantly, these update rules are very similar to the ones derived in Rao and Ballard (1999), and therefore can be interpreted as recapitulating the core predictive coding update rules. Furthermore while it is possible to run the dynamics for the $\mu$ and the $\theta$ simultaneously, it is often better to treat predictive coding as an EM algorithm and alternate the updates. Empirically, it is typically best to run the optimization of the $\mu$ s, with fixed $\theta$ until close to convergence, and then run the dynamics on the $\theta$ with fixed $\mu$ for a short while. This implicitly enforces a separation of timescales upon the model where the $\mu$ are seen as dynamical variables which change quickly while the $\theta$ are slowly-changing parameters. For instance, the $\mu$ s are typically interpreted as rapidly changing neural firing rates, while the $\theta$ s are the slowly changing synaptic weight values (K. Friston, 2005; Rao & Ballard, 1999).

Finally, we can think about how this derivation of predictive coding maps onto putative psychological processes of perception and learning. The updates of the $\mu$ can be interpreted as a process of perception, since the $\mu$ is meant to correspond to the estimate of the latent state of the environment generating the o observations. By contrast, the dynamics of the $\theta$ can be thought of as corresponding to learning, since these $\theta$ effectively define the mapping between the latent state $\mu$ and the observations o. Importantly, as will be discussed in depth later, these predictive coding update equations can be relatively straightforwardly mapped onto a potential network architecture which only utilizes local computation and plasticity – thus potentially making it a good fit for implementation in the cortex.

2.2 Multi-layer Predictive Coding

The previous examples have only focused on predictive coding with a single level of latent variables $\mu_1$ . However, the expressiveness of such a scheme is limited. The success of deep neural networks in machine learning have demonstrated that having hierarchical sets of latent variables is key to enabling methods to learn powerful abstractions and to handle intrinsically hierarchical dynamics of the sort humans intuitively perceive. The predictive coding schemes previously introduced can be straightforwardly extended to handle hierarchical dynamics of arbitrary depth, equivalently to deep neural networks in machine learning. This is done through postulating multiple layers of latent variables $x_1 \dots x_L$ and then defining the generative model as follows,

$$p(x_0 \dots x_L) = p(x_L) \prod_{l=0}^{L-1} p(x_l | x_{l+1}) \tag{10} $$

where $p(x_l|x_{l+1}) = \mathcal{N}(x_l; f_l(\theta_{l+1}, x_{l+1}, \Sigma_l)$ and the final layer $p(x_L) = \mathcal{N}(x_L|\bar{x_L}, \Sigma_L)$ has an arbitrary prior $\bar{x_L}$ and the latent variable at the bottom of the hierarchy is set to the observation actually received $x_0 = o$ . Similarly, we define a separate variational posterior for each layer $q(x_{1:L}|o) = \prod_{l=1}^L \delta(x_l - \mu_l)$ , then the variational free energy can be written as a sum of the prediction errors at each layer,

$$\mathcal{F} = \sum_{l=1}^{L} \Sigma_l^{-1} \epsilon_l^2 + \ln 2\pi \det(\Sigma_l) \tag{11} $$

where $\epsilon_l = \mu_l - f_l(\theta_{l+1}, \mu_{l+1})$ and $\det(\Sigma)$ denotes the determinant of the covariane matrix $\Sigma$ . Given that the free energy divides nicely into the sum of layer-wise prediction errors, it comes as no surprise that the dynamics of the $\mu$ and the $\theta$ are similarly separable across layers.

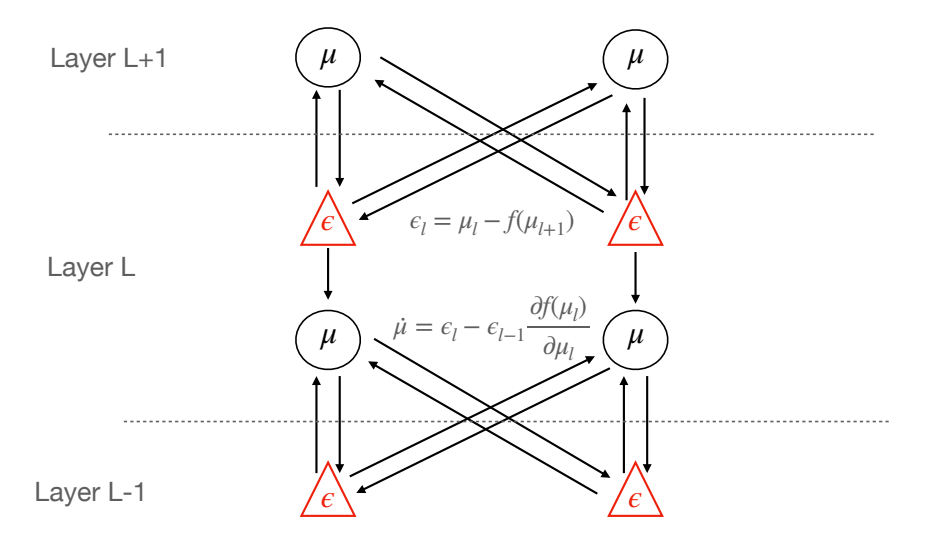

Figure 1: Architecture of a multi-layer predictive coding network (here shown with two value and error neurons in each layer. The value neurons $\mu$ project to both the error neurons of the layer below (representing the top down connections) and the error neurons at the current layer to represent the current activity. The error neurons receive inhibitory top down inputs from the value neurons of the layer above and excitatory inputs from the value neurons at the same layer. Conversely, the value neurons receive excitatory projections from the error neurons of the layer below and inhibitory from the error neurons at the current layer. Crucially, for this model with its explicit error neurons, all synaptic plasticity rules are purely Hebbian.

$$\frac{d\mu_l}{dt} = -\frac{\partial \mathcal{F}}{\partial \mu_l} = \sum_{l=1}^{-1} \epsilon_{l-1} \frac{\partial f_{l-1}}{\partial \mu_l} \theta_l^T - \sum_l^{-1} \epsilon_l \tag{12} $$

$$\frac{d\theta_l}{dt} = -\frac{\partial \mathcal{F}}{\partial \theta_l} = \Sigma_l^{-1} \epsilon_{l-1} \frac{\partial f_{l-1}}{\partial \theta_l} \mu_l \tag{13}$$

We see that the dynamics for the variational means $\mu$ depend only on the prediction errors at their layer and the prediction errors on the level below. Intuitively, we can think of the $\mu$ s as trying to find a compromise between causing error by deviating from the prediction from the layer above, and adjusting their own prediction to resolve error at the layer below. In a neurally-implemented hierarchical predictive coding network, prediction errors would be the only information transmitted 'upwards' from sensory data towards latent representations, while predictions would be transmitted downwards. Crucially for conceptual readings of predictive coding, this means that sensory data is not directly transmitted up through the hierarchy, as is assumed in much of perceptual neuroscience. The dynamics for the $\mu$ s are also fairly biologically plausible as they are effectively just the sum of the precision-weighted prediction errors from the $\mu$ s own layer and the layer below, the prediction errors from below being transmitted back upwards through the synaptic weights $\theta^T$ and weighted with the gradient of the activation function $f_l$ . This means that there is no direct feedforward pass, as is often assumed in models of vision, in predictive coding. It is possible, however, to augment predictive coding models with a feedforward pass, as is discussed in the section on hybrid inference.

Importantly, the dynamics for the synaptic weights are entirely local, needing only the prediction error from the layer below and the current $\mu$ at the given layer. The dynamics thus becomes a Hebbian rule between the presynaptic $\epsilon_{l-1}$ and postsynaptic $\mu_l$ , weighted by the gradient of the activation function.

2.3 Dynamical Predictive Coding and Generalized Coordinates

So far, we have considered the modelling of just a single static stimulus o. However, most interesting data the brain receives comes in temporal sequences o¯ = [o1, o^{2} . . . ]. To model such temporal sequences, it is often useful to split the latent variables into states, which can vary with time, and parameters which cannot. In the case of sequences, instead of minimizing the variational free energy, we must instead minimize the free action F¯, which is simply the path integral of the variational free energy through time 9 (K. Friston, 2008; K. J. Friston, Trujillo-Barreto, & Daunizeau, 2008a):

$$\mu^* = \underset{\mu}{\operatorname{argmin}} \bar{\mathcal{F}}$$

$$\bar{\mathcal{F}} = \int dt \mathcal{F}_t \tag{14}$$

While there are numerous methods and parameterisations to handle sequence data, one influential and elegant approach, which has been developed by Friston in a number of key papers (K. Friston, 2008; K. Friston, Stephan, Li, & Daunizeau, 2010; K. J. Friston et al., 2008a) is to represent temporal data in terms of generalized coordinates of motion. In effect, these represent not just the immediate observation state, but all time derivatives of the observation state. For instance, suppose that the brain represents beliefs about the position of an object. Under a generalized coordinate model, it would also represent beliefs about the velocity (first time derivative), acceleration (second time derivative), jerk (third time derivative) and so on. All these time derivative beliefs are concatenated to form a generalized state. The key insight into this dynamical formulation is, that when written in such a way, many of the mathematical difficulties in handling sequences disappear, leaving relatively straightforward and simple variational filtering algorithms which can natively handle smoothly changing sequences.

Because generalised coordinates can become notationally awkward, we will be very explicit in the following. We denote the time derivatives of the generalized coordinate using a 0 , so µ 0 is the belief about the velocity of the µ, just as µ is the belief about the 'position' about the µ. A key point of confusion is that there is also a 'real' velocity of µ, which we denote µ˙, which represents how the belief in µ actually changes over time – i.e. over the course of inference. Importantly, this is not necessarily the same as the belief in the velocity: µ˙ 6= µ 0 , except at the equilibrium state. Intuitively, this makes sense as at equilibrium (minimum of the free action, and thus perfect inference), our belief about the velocity of mu µ 0 and the 'real' velocity perfectly match. Away from equilibrium, our inference is not perfect so they do not necessarily match. We denote the generalized coordinate representation of a state µ˜ as simply a vector of each of the beliefs about the time derivatives µ˜ = [µ, µ0 , µ00, µ000 . . . ]. We also define the operator D which maps each element of the generalised coordinate to its time derivative i.e. Dµ = µ 0 , Dµ˜ = [µ 0 , µ00, µ000, µ0000 . . . ]. With this notation, we can define a dynamical generative model using generalized coordinates. Crucially, we assume that the noise ω in the generative model is not white noise, but is colored, so it has non-zero autocorrelation and can be differentiated. Effectively, colored noise allows one to model relatively slowly (not infinitely fast) exogenous forces on the system. For more information on colored noise vs white noise see K. J. Friston et al. (2008a); Jazwinski (2007); Stengel (1986). With this assumption we can obtain a generative model in generalized coordinates of motion by simply differentiating the original model.

$$o = f(x) + \omega_{o} \qquad x = g(\bar{x}) + \omega_{x}$$

$$o' = f(x)x' + \omega'_{o} \qquad x' = g(\bar{x})x' + \omega'_{x}$$

$$o'' = f(x)x'' + \omega''_{o} \qquad x'' = g(\bar{x})x'' + \omega''_{x}$$

$$\dots \qquad \dots \qquad (15)$$

Where we have applied a local linearisation assumption (K. J. Friston et al., 2008a) which drops the cross terms in the derivatives. We can write these generative models more compactly in generalized coordinates.

$$\tilde{o} = \tilde{f}(\tilde{x}) + \tilde{\omega}_o \qquad \qquad \tilde{x} = \tilde{g}(\tilde{x}) + \tilde{\omega}_x \tag{16}$$

9This quantity is called the free action due to the analogy between it and the action central to the variational principles central to classical mechanics.

which, written probabilistically is $p(\tilde{o}, \tilde{x}) = p(\tilde{o}|\tilde{x})p(\tilde{x})$ . It has been shown (K. J. Friston et al., 2008a) that the optimal (equilibrium) solution to this free action is the following stochastic differential equation,

$$\dot{\tilde{\mu}} = \mathcal{D}\tilde{\mu} + \frac{\partial \mathbb{E}_{q(\tilde{x}|\tilde{o};\tilde{\mu})}[\ln p(\tilde{o},\tilde{x})]}{\partial \tilde{\mu}} + \tilde{\omega} \tag{17} $$

Where $\tilde{\omega}$ is the generalized noise at all orders of motion. Intuitively, this is because when $\frac{\partial \mathbb{E}_{q(x|o;\mu)}[\ln p(\bar{o},\bar{x})]}{\partial \mu}=0$ then $\dot{\mu}=\mathcal{D}\tilde{\mu}$ , or that the 'real' change in the variable is precisely equal to the expected change. This equilibrium is a dynamical equilibrium which moves over time, but precisely in line with the beliefs $\mu'$ . This allows the system to track a dynamically moving solution precisely, and the generalized coordinates let us capture this motion while retaining the static analytical approach of an equilibrium solution, which would otherwise necessarily preclude motion. There are multiple options to turn this result into a variational inference algorithm. Note, the above equation makes no assumptions about the form of variational density or the generative model, and thus allows multimodal or nonparametric distributions to be represented. For instance, the above equation Equation 17 could be integrated numerically by a number of particles in parallel, thus leading to a generalization of particle filtering (K. J. Friston, Trujillo-Barreto, & Daunizeau, 2008b). Alternatively, a fixed Gaussian form for the variational density can be assumed, using the Laplace approximation. In this case, we obtain a very similar algorithm to predictive coding as before, but using generalized coordinates of motion. In the latter case, we can write out the free energy as,

$$\mathcal{F}_t = \ln p(\tilde{o}|\tilde{x})p(\tilde{x})$$

$$\propto \tilde{\Sigma}_o^{-1}\tilde{\epsilon}_o^2 + \tilde{\Sigma}_x^{-1}\tilde{\epsilon}_x^2 \tag{18}$$

Where $\tilde{\epsilon}_o = \tilde{o} - \tilde{f}(\tilde{x})$ and $\tilde{\epsilon}_x = \tilde{o} - \tilde{g}(\tilde{x})$ . Moreover, the generalized precisions $\tilde{\Sigma}^{-1}$ not only encode the covariance between individual elements of the data or latent space at each order, but also the correlations between generalized orders themselves. Since we are using a unimodal (Gaussian) approximation, instead of integrating the stochastic differential equations of multiple particles, we instead only need to integrate the deterministic differential equation of the mode of the free energy,

$$\dot{\tilde{\mu}} = \mathcal{D}\tilde{\mu} - \tilde{\Sigma}_o^{-1}\tilde{\epsilon}_o - \tilde{\Sigma}_x^{-1}\tilde{\epsilon}_x \tag{19}$$

which cashes out in a scheme very similar to standard predictive coding (compare to Equation 7), but in generalized coordinates of motion. The only difference is the $\mathcal{D}\tilde{\mu}$ term which links the orders of motion together. This term can be intuitively understood as providing the 'prior motion' while the prediction errors provide 'the force' terms. To make this clearer, let's take a concrete physical analogy where $\mu$ is the position of some object and $\mu'$ is the expected velocity. Moreover, the object is subject to forces $\tilde{\Sigma}_o^{-1}\tilde{\epsilon}_o+\tilde{\Sigma}_x^{-1}\tilde{\epsilon}_x$ which instantaneously affect its position. Now, the total change in position $\dot{\mu}$ can be thought of as first taking the change in position due to the intrinsic velocity of the object $\mathcal{D}\mu$ and adding that on to the extrinsic changes due to the various exogenous forces.

2.4 Predictive Coding and Precision

One core aspect of the predictive coding framework, which is absent in the original Rao and Ballard formulation, but which arises directly from the variational formulation of predictive coding and the Gaussian generative model, is the notion of precision or inverse-variances, which we have throughout denoted as $\Sigma^{-1}$ (sometimes $\Pi$ is used in the literature as well). Precisions serve to multiplicatively modulate the importance of the prediction errors, and thus possess a significant influence in the overall dynamics of the model. They have been put to a wide range of theoretical purposes in the literature, all centered around their modulatory function. Early work (K. Friston, 2005) ties the precision parameters to lateral inhibition and biased competition models, proposing that they serve to mediate competition between prediction error neurons, and are implemented through lateral synaptic weights. Later work (Feldman & Friston, 2010; Kanai et al., 2015) has argued instead that precisions can be interpreted as implementing top-down attentional modulation of predictions – which are thus sensitive to the global context variables such as task relevance which have been shown empirically to have a large affect on attentional salience. This work has shown that equipping

predictive coding schemes with precision allows them to recapitulate key phenomena observed in standard attentional psychophysics tasks such as the Posner paradigm (Feldman & Friston, 2010).

Further theoretical and philosophical work has further developed the interpretation of precision matrices into a general purpose modulatory function (Clark, 2015). This function could be implemented neurally in a number of ways. First, certain precision weights could be effectively hardcoded by evolution. One promising candidate for this would be the precisions of interoceptive signals transmitting vital physiological information such as hunger or pain. These interoceptive signals would be hardwired to have extremely high precision, to prevent the organism from simply learning to ignore or down-weight them in comparison to other objectives. Conceptually, assuming high precision for interoceptive signals can shed light on how such signals drive adaptive action through active inference (A. K. Seth & Critchley, 2013; A. K. Seth & Friston, 2016). It is also possible that certain psychiatric disorders such as autism (Lawson, Rees, & Friston, 2014; Van Boxtel & Lu, 2013) and schizophrenia (Sterzer et al., 2018) could be interpreted as disorders of precision (either intrinsically hardcoded, or else resulting from an aberrant or biased learning of precisions). If on track, these theories would provide us with a helpful mechanistic understanding of the algorithmic deviations underlying these disorders, which could potentially enable improved differential diagnosis, and could even guide clinical intervention. This approach, under the name of computational psychiatry, is an active area of research and perhaps one of the most promising avenues for translating highly theoretical models such as predictive coding into concrete medical advances and treatments (Huys, Maia, & Frank, 2016).

Mathematically, a dynamical update rule for the precisions can be derived as a gradient descent on the free-energy. This update rule becomes an additional M-step in the EM algorithm, since the precisions are technically parameters of the generative model. Recall that we can write the free-energy as,

$$\mathcal{F} = \sum_{l=1}^{L} \Sigma_l^{-1} \epsilon_l^2 + \ln 2\pi \det(\Sigma_l) \tag{20} $$

We can then derive the dynamics of the precision Σ −1 l matrix as a gradient descent on the free-energy with respect to the variance,

$$\frac{d\Sigma}{dt} = -\frac{\partial \mathcal{F}}{\partial \Sigma} = \Sigma_l^{-T} \epsilon_l \epsilon_l^T \Sigma_l^{-T} - \Sigma_l^{-T} = \Sigma_l^{-1} \epsilon_l \epsilon_l^T \Sigma_l^{-T} - \Sigma_l^{-1} = \tilde{\epsilon}_l \tilde{\epsilon}_l^T - \Sigma_l^{-1} \tag{21} $$

Where we have used the fact that the covariance (and precision) matrices are necessarily symmetric (Σ −1 = Σ−T ). Secondly, we have defined the precision-weighted predictions errors as ˜l = Σ−1 l . From these dynamics, we can see that the average fixed-point of the precision matrix is simply the variance of the precision-weighted prediction errors at each layer

$$\mathbb{E}\left[\frac{d\Sigma}{dt}\right] = \mathbb{E}\left[\tilde{\epsilon}_{l}\tilde{\epsilon}_{l}^{T}\right] - \Sigma_{l}$$

$$= \mathbb{E}\left[\frac{d\Sigma}{dt}\right] = 0 \implies \Sigma_{l} = \mathbb{E}\left[\tilde{\epsilon}_{l}\tilde{\epsilon}_{l}^{T}\right]$$

$$\implies \Sigma_{l} = \mathbb{V}\left[\tilde{\epsilon}\right] \tag{22} $$

In effect, the fixed point of the precision dynamics will lead to these matrices simply representing the average variance of the prediction errors at each level. At the lowest level of the hierarchy, the variance of the prediction errors will be strongly related to the intrinsic variance of the data, and thus algorithmically, learnable precision matrices allow the representation and inference on data with state-dependent additive noise. This kind of state-dependent noise is omnipresent in the natural world, in part due to its own natural statistics, and in part due to intrinsically noisy properties of biological perceptual systems (Stein, Gossen, & Jones, 2005). Similarly, the human visual system can function over many orders of magnitude of objective brightness which dramatically alters the variance of the visual input, while in auditory perception the variance of specific sound inputs is crucially dependent on ambient audio conditions. In all cases, being able to represent the variance of the incoming sensory data is likely crucial to being able to successfully model and perform accurate inference on such sensory streams.

Precision also has deep relevance to machine learning. As noted in the backpropagation section later in the paper, predictive coding with fixed predictions and identity precisions forms a scheme which can converge to the exact gradients computed by the backpropagation of error algorithm. Importantly, however, when precisions are included in the scheme, predictive coding forms a superset of backpropagation which allows it to weight gradients by their intrinsic variance. This more subtle and nuanced approach may prove more adaptable and robust than standard backpropagation, which implicitly assumes that all data-points in a dataset are of equal value - an assumption which is likely not met for many datasets with heteroscedastic noise. Exploring the use of precision to explicitly model the intrinsic variance of data is an exciting area for future work, especially applied to large-scale modern machine learning systems. Indeed, it can be shown (see Appendix B) that in the linear case using learnt precisions is equivalent to a natural gradients algorithm Amari (1995). Natural gradients modulate the gradient vector with the Hessian or curvature of the loss function (which is also the Fisher information) and therefore effectively derive an optimal adaptive learning rate for the descent, which has been found to improve optimization performance, albeit at a sometimes substantial computational cost of explicitly computing and materializing the Fisher information matrix.

There remains an intrinsic tension, however, between these two perspectives on precision in the literature. The first interprets precision as a bottom-up 'objective' measure of the intrinsic variance in the sensory data and then, deeper in the hierarchy, the intrinsic variance of activities at later processing stages. This contrasts strongly with views of precision as serving a general purpose adaptive modulatory function as in attention. While attention is indeed deeply affected by bottom up factors, which are generally termed attentional salience (Parkhurst, Law, & Niebur, 2002), these factors are typically modelled as Bayesian surprise (Itti & Baldi, 2009). 'Bayesian surprise' is often modelled mathematically as the information gain upon observing a stimulus which is not necessarily the same as high or low variance. For instance, both high variance (such as strobe-lights or white noise) and low variance (such as constant bright incongruous blocks of colour) may both be extremely attentionally salient in visual input while having opposite effects on precision. Precisions, if updated using Equation 21 explicitly represent the objective variance of the stimulus or data, and therefore cannot easily account for the well-documented top-down or contextually guided attention (Henderson, 2017; Kanan, Tong, Zhang, & Cottrell, 2009; Torralba & Oliva, 2003). This means that it seems likely that theories of top-down modulatory precision cannot simply rely on a direct derivation of precision updating in terms of a gradient descent on the free energy, but must instead postulate additional mechanisms which implement this top down precision modulation explicitly. One possible way to do this is to assume a system of direct inference over precisions with modulatory 'precision expectations' which form hyperpriors over the precisions, which can then be updated in a Bayesian fashion by using the objective variance of the data as the likelihood. However, much remains to be worked out as to the precise mathematics of this scheme.

Finally, there remains an issue of timescale. Precisions are often conceptualised as being optimized over a slow timescale, comparable with the optimisation of synaptic weights – i.e., in the M-step of the EM algorithm – which fits their mathematical expression as a parameter of the generative model. However, attentional modulation can be very rapid, and likely cannot be encoded through synaptic weights. These concerns make any direct identification of precision with attention difficult, while the idea of precision as instead encoding some base level of variance-normalization or variance weighting finds more support from the mathematics. However even here problems remain due to timescales. The objective variance of different regions of the sensory stream can also vary rapidly, and it is not clear that this variation can be encoded into synaptic weights either, although it is definitely possible to maintain a moving average of the variance through the lateral synaptic weights. Overall, the precise neurophysiological and mathematical meaning and function of precision remains quite uncertain, and is thus an exciting area of future development for the predictive

coding framework. Finally, there has been relatively little empirical work on studying the effects of learnable precision in large-scale predictive coding networks.

2.5 Predictive Coding in the Brain?

While technically predictive coding is simply a variational inference and filtering algorithm under Gaussian assumptions, from the beginning it has been claimed to be a biologically plausible theory of cortical computation, and the literature has consistently drawn close connections between the theory and potential computations that may be performed in brains. For instance, the Rao and Ballard model explicitly claims to model the early visual cortex, while K. Friston (2005) explicitly proposed predictive coding as a general theory of cortical computation. In this section, we review work which has began translating the mathematical formalism into neurophysiological detail, and focus especially on the seminal cortical microcircuit model by Bastos et al. (2012). We also briefly review empirical work that has attempted to verify or falsify key tenets of predictive coding in the cortex, and discuss the methodological or algorithmic difficulties with this approach.

The hierarchical generative models generally treated in predictive coding are composed of multiple layers in a stacked structure. Each layer consists of a single vector of value, or activity, neurons and a single vector of error neurons. However, the cortex is not organised into such a simple structure. Instead each cortical 'area' such as V1, V2, or V4 is comprised of 6 internal layers: L1-L6. These layers are reciprocally connected with each other in a complex way which has not yet been fully elucidated, and may subtly vary between cortical regions and across species (Felleman & Van Essen, 1991). Nevertheless, there is convergence around a relatively simple scheme where the six cortical layers can be decomposed into an 'input layer' L4, which primarily receives driving excitatory inputs from the area below as well as from the thalamus, and then two relatively distinct processing streams – a feedforward superficial, or supragranular stream consisting of layers L1/2/3 and a feedback deep, or infragranular stream consisting of layers 5 and 6 (layer 4 is typically called the 'granular' layer). These streams have been shown to have different preferred oscillatory frequencies, with the superficial layers possessing the strongest theta and gamma power ((Bastos et al., 2015), and the deep layers possessing strongest alpha and beta power which are negatively correlated across layers. The superficial layers then send excitatory connectivity forward to L4 of the area above, while the deep layers possess feedback connectivity, which can be both inhibitory or excitatory, back to both deep and superficial layers of the areas below. Within each cortical area, there is a well-established 3 step feedback relay, from the input L4, to the superficial layers L2/3, which then project their input forwards to the next area in the hierarchy. From L2/L3, the superficial layers then project to the deep Layer 5, which could then project to L6, or else provide feedback to regions lower in the hierarchy (Rockland, 2019). Interestingly, deep L5 and L6 are the only cortical layers which contains neurons which project to subcortical regions or the brainstem, and L6 especially appears to maintain precise reciprocal connectivity with the thalamus (Thomson, 2010). While this feedforward input, superficial, deep 'relay' is well studied, there are also other pathways, including from deep to L4 (Amorim Da Costa & Martin, 2010), and superficial feedback connections which are not well explored. Moreover, alongside the cortico-cortico connectivity studied here, there are also many cortico-subcortico, and especially cortico-thalamic connections which are less well-understood or integrated into specific process theories of predictive coding (Markov et al., 2014).

While this intrinsic connectivity of the cortical region may seem dauntingly complex, much progress has been made within the last decade of fitting predictive coding models to this neurophysiology. Of special importance is the work of Bastos et al. (2012) who provided the central microcircuit model of predictive coding 10. The fundamental operations of predictive coding require predictions to be sent down the hierarchy, while prediction errors are sent upwards. The dynamics of the value neurons µs require both the prediction errors at the current layer (from the top down predictions of the layer above) to be combined with the prediction errors from the layer below mapped through the backwards

10Perhaps the first worked out canonical microcircuit for predictive coding, although not using that name, was in the early work of Mumford (1992). He argued that the descending deep pathway transmits 'templates' backwards which are then fitted to the data present in the layers below before computing 'residuals' which are transmitted upwards on the superficial to L4 ascending pathway.

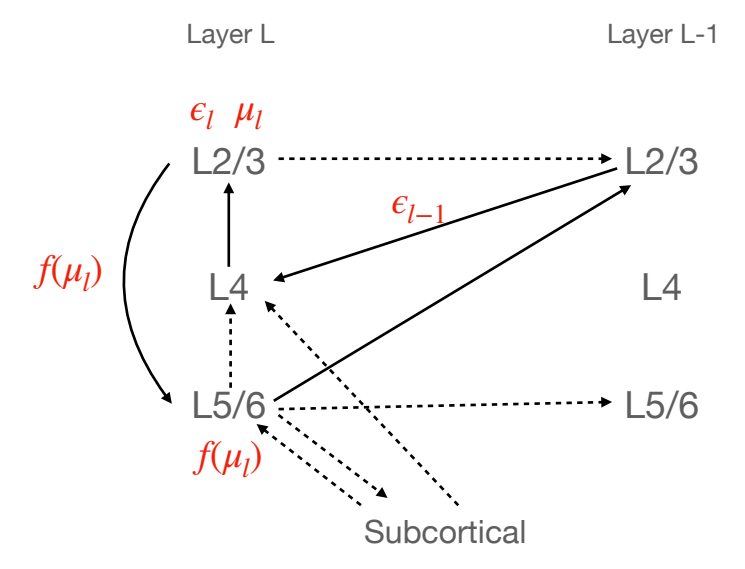

Figure 2: The canonical microcircuit proposed by Bastos et al mapped onto the laminar connectivity of a cortical region (which comprises 6 layers). Here, for simplicity, we group layers L2 and L3 together into a broad 'superficial' layer and L5 and L6 together into a 'deep' layer. We ignore L1 entirely since there are few neurons there and they are not involved in the Bastos microcircuit. Bold lines are included in the canonoical microcircuit of Bastos et al. Dashed lines are connections which are known to exist in the cortex which are not explained by the model. Red text denotes the values which are computed in each part of the canonical microcircuit

weights θ T and the derivative of the activation function ∂f ∂µ. The Bastos microcircuit model associates a 'layer' of predictive coding, with a 6-level cortical 'region'. The inputs to L4 of the region are taken to be the prediction errors of the region below, which are then immediately passed upwards to the superficial levels L2/L3 where the prediction error l and the value neurons l are taken to be located. The predictions f(µl , θl) are taken to reside in the deep layers L5/6. The superficial layers receive top-down prediction inputs from the deep layers of the region above it in the hierarchy f(µl+1, θl+1) which are combined to compute the prediction errors l of the region. These are then combined with the bottom-up prediction errors l+1 coming from L4 to update the value neurons µl , also located in the superficial layers. The value neurons then transmit to the deep layers L5/6 where the predictions f(µl , θl) to be transmitted to the region below on the hierarchy are computed, while the superficial prediction error units l transmit to the L4 input layer of the region above in the hierarchy. The full schematic of the Bastos model is presented in Figure 2.

This model fits the predictive coding architecture to the laminar structure within cortical regions. It explains several core features of the cortical microcircuit – that superficial cells (interpreted as encoding prediction errors), project forward to the L4 input layer of the region above. It also provides an interpretation of the function of the well-established 'relay' from L4 to superficial (transmitting inputted prediction errors to superficial), the computation of prediction errors and value neurons in the superficial layers, and then the superficial to deep connectivity as encoding the prediction of the value neurons. It also can explain the deep to superficial backwards pass as the transmission of predictions from one region to the next. Similarly, the L5 and L6 deep cortico-subcortical transmission can be straightforwardly interpreted as the transmission of predictions to subcortical areas, or to motor and brainstem regions to perform active inference. However, there are several aspects of the model and the neurophysiology which require further elucidation. One primary issue is the well-established deep-deep feedback pathway from the deep layers of one region to the deep layers of the region below it in the hierarchy (Amorim Da Costa & Martin, 2010; Harris et al., 2018). In strength, this feedback pathway is often considered more important than the deep-superficial pathway that is thought to convey the predictions to the prediction error units in the superficial layers of the region below. This pathway is entirely unexplained within

the Bastos model, yet it appears to be important for cortical function. It is possible that this may be thought of as a prediction pathway, so that predictions at one level can directly modulate predictions at the layer below without having to have their influence modulated by going through the prediction error units. This would provide the brain with a powerful downward or generative path, enabling it to compute low-level predictions effectively directly from high-level abstractions in a single downward sweep. However, such a path is not used in the mathematical predictive coding model, and it is unclear what probabilistic interpretation it could have. A secondary concern is the fact that there also exists a superficial-superficial feedback connection with unclear function within this model (Markov et al., 2014). It has been suggested that this feedback connection may carry precision information (Kanai et al., 2015), although it is unclear why this is necessary since the actual dynamics of the precision only require access to the precision-weighted prediction errors of the current layer (Equation 21).

A more general concern with this model is that the deep layers are in general relatively poorly utilised by the model. All the 'action' so to speak occurs in the superficial layers – which is where both the prediction errors and the value neurons are located, and where the top-down predictions interface with the prediction errors. The only task the deep layers provide in this model is to compute the predictions and then relay them to the layers below. It is possible, perhaps likely, that the actual function f(θ, ; µ) could be considerably more complex than the ANN approach of synaptic weights passed through an elementwise nonlinear activation function. However, extensions of predictive coding to more complex backwards functions have not yet been substantially explored in the literature, and would require more complex update rules for the parameters which may lead to less biologically plausible learning rules. It is also known that the deep layers contain the connections to the thalamus, striatum, and other subcortical regions which are likely important in action selection as well as large-scale coordination across the brain. However, such effects and connections are not included in standard predictive coding models which are primarily concerned with only cortico-cortical processing.

One interesting potential issue is that there are several null operations in the model. For instance, the prediction errors l−1 are computed in the superficial layers at the level below, and then transmitted first to the input layer L4 before being transmitted again to the superficial layers. This provides two sets of operations on the prediction errors while only one is necessary, thus necessitating that one of these steps is effectively a pure relay step without modification of the prediction errors – an interesting and testable neurophysiological prediction. A similar situation arises with the predictions, where although the predictions are considered to only be computed as a nonlinear function of the value units, mapped through synaptic connections, the predictions actually undergo two steps of computation. Firstly in the superficial-deep transmission within a region, and secondly the deep-superficial feedback transmission to the region below. According to the standard model, one of these steps must be a null step and not change the predictions, which could in theory be tested by current methods. Importantly, it is possible that the actual function being computed by the predictions is more complex (although not the prediction errors), and thus takes multiple steps. However, to achieve learning in such a system would require more complicated update rules, which would likely exacerbate the issues of weight transport and derivative computation already inherent in the algorithm. An additional interesting consideration is the extension of predictive coding from a simple linear stack of hierarchical regions, to a heterarchical scheme, where multiple regions may all project to the same region, and similarly one region may send prediction errors to many others. Predictive coding makes a very strong hypothesis in this situation, which is that heterarchical connectivity must be symmetrical. If a region sends feedforward prediction errors to another region, it must receive feedback predictions from it and vice versa. This feature of connectivity in the brain has long been confirmed through neuroantomical studies Felleman and Van Essen (1991); Mumford (1992).

While the prediction errors must be transmitted upwards by being modulated through the transpose of the forward weights θ T , which would be implemented as the synaptic strengths in either the deep-superficial backwards paths, or the superficial-deep forward relay step, both of which are far from the superficial-input pathway, thus raising a considerable issue of weight transport. Additionally, these prediction errors must transmit with them the derivative of the activation function ∂f ∂µ, which is theoretically available at the superficial layers of the level below where the prediction errors are transmitted, but would then need to be computed separately and transmitted back up with the prediction errors. The weight transport problem poses a greater difficulty, however, as we discuss below it may be solvable with learnable or random backwards weights.