title: Pedersen和Risi - 2021 - Evolving and Merging Hebbian Learning Rules Increasing Generalization by Decreasing the Number of R

source_pdf: /home/lzr/code/thesis-reading/Pedersen和Risi - 2021 - Evolving and Merging Hebbian Learning Rules Increasing Generalization by Decreasing the Number of R.pdf

Evolving and Merging Hebbian Learning Rules: Increasing Generalization by Decreasing the Number of Rules

Joachim Winther Pedersen jwin@itu.dk IT University of Copenhagen Copenhagen, Denmark

Sebastian Risi sebr@itu.dk IT University of Copenhagen Copenhagen, Denmark

ABSTRACT

Generalization to out-of-distribution (OOD) circumstances after training remains a challenge for artificial agents. To improve the robustness displayed by plastic Hebbian neural networks, we evolve a set of Hebbian learning rules, where multiple connections are assigned to a single rule. Inspired by the biological phenomenon of the genomic bottleneck, we show that by allowing multiple connections in the network to share the same local learning rule, it is possible to drastically reduce the number of trainable parameters, while obtaining a more robust agent. During evolution, by iteratively using simple K-Means clustering to combine rules, our Evolve & Merge approach is able to reduce the number of trainable parameters from 61,440 to 1,920, while at the same time improving robustness, all without increasing the number of generations used. While optimization of the agents is done on a standard quadruped robot morphology, we evaluate the agents' performances on slight morphology modifications in a total of 30 unseen morphologies. Our results add to the discussion on generalization, overfitting and OOD adaptation. To create agents that can adapt to a wider array of unexpected situations, Hebbian learning combined with a regularising "genomic bottleneck" could be a promising research direction.

CCS CONCEPTS

• Computing methodologies → Bio-inspired approaches.

KEYWORDS

Plastic neural networks, local learning, indirect encoding, generalization

ACM Reference Format:

Joachim Winther Pedersen and Sebastian Risi. 2021. Evolving and Merging Hebbian Learning Rules: Increasing Generalization by Decreasing the Number of Rules. In 2021 Genetic and Evolutionary Computation Conference (GECCO '21), July 10–14, 2021, Lille, France. ACM, New York, NY, USA, 9 pages.https://doi.org/10.1145/3449639.3459317

1 INTRODUCTION

The inability to adapt to out-of-distribution (OOD) situations makes artificial agents less useful and despite many advances in the field of reinforcement learning and evolutionary strategies, making adaptable agents remains a challenge [31, 64–66]. If we want agents to

1 2 3 4 5 6 7 8 9 1 4 3 5 2 2 1 4 3 1. ⍺ ABCD 2. ⍺ ABCD 3. ⍺ ABCD 4. ⍺ ABCD 5. ⍺ ABCD 6. ⍺ ABCD 7. ⍺ ABCD 8. ⍺ ABCD 9. ⍺ ABCD Initialize learning rule parameters: Merge the rules: 1. ⍺ ABCD 2. ⍺ ABCD 3. ⍺ ABCD 4. ⍺ ABCD 5. ⍺ ABCD Learning rules adapt connections at each time step. Evolve for a specified number of generations: Repeat I. II. III.

Figure 1: Evolve and Merge Approach. First, one learning rule is randomly initialized for each connection in the network. Then, the learning rule parameters are evolved for a predetermined number of generations. Subsequently, the rules that have similar parameters are merged to a single rule. The new reduced rule set is then evolved further. This process is repeated and continues until the maximum number of generations allowed has been reached.

be deployed in complex environments, we cannot expect to be able to train them on all possible situations beforehand.

While artificial neural networks (ANNs) were originally inspired by the brain, they differ from biological neural networks on several key points. One crucial difference is that most ANNs have their connection strengths trained during a training phase, after which they stay fixed forever. Biological neural networks on the other hand undergo changes throughout their lifetimes. The framework of Evolved Plastic Artificial Neural Networks (EPANNs) [54] aims to incorporate the plasticity – and thereby the adaptability – of biological neural networks into artificial agents. The brain architectures found in biological agents have been shaped by evolution throughout millions of years [5]. The specific wiring of the synapses within each individual brain is a result of learning to adapt to sensory input, faced within the lifetime of the individual [19, 40, 58].

Here, we build upon the approach introduced in Najarro and Risi [35], where parameters of local plasticity rules - not the connections of the network - were evolved. With this approach, ANNs with plastic connections showed better performances than static ANNs when faced with changes in robot morphology not seen during training.

However, in contrast to nature, in which genomes encode an extremely compressed blueprint of a nervous system, the approach by Najarro and Risi [35] required each connection in the network to have its own learning rule, significantly increasing the amount of trainable parameters.

The amount of information it takes to specify the wiring of a sophisticated brain is far greater than the information stored in the genome [5]. Instead of storing a specific configuration of synapses, the genome is thought to encode a much smaller number of rules that govern how the wiring should change throughout the lifetime of the individual [63]. In this manner, learning and evolution are intertwined: evolution shapes the rules that in turn shape learning [26, 41, 52]. If the rules encoded by the genome do not allow the individual to learn useful behavior, then these rules will have to be adapted or go extinct. The phenomenon that a large number of synapses has to be controlled by a small number of rules has been called the "genomic bottleneck", and it has been hypothesized that this bottleneck acts a regularizer that selects for generic rules likely to generalize well [63].

Here, we aim to mimic the genomic bottleneck by limiting the number of rules to be much smaller than the number of connections in the neural networks, in the hope that we can evolve more robust agents. The main insight in the novel approach introduced in this paper (Figure 1) is that after having optimized one learning rule for each synapse in the network, it is possible with a simple clustering approach to drastically reduce the number of unique learning rules required to achieve good results. In fact, we show that starting from having one rule per connection in the ANN (12,288 rules) we can decrease this number of rules by 96.875 % to have only 384 rules controlling all 12,288 connections. We further show that as the number of learning rules decreases, the robustness to unfamiliar morphologies tends to increase. In addition to be inspired by the compressed representations in genomes, the method proposed in this paper is related to the field of indirect encoding, which has a long history in artificial intelligence research [3, 20, 57, 60].

As we gradually decrease the number of learning rules, we end up with a set of rules, which have a smaller number of trainable parameters than there are connections in the network. We operationalize robustness as being able to perform well across an array of settings not seen during training. We compare the robustness of plastic ANNs to that of different static ANNs (described further in section 3.4): a plain static network with the same architecture as our plastic networks, a smaller static network, and static networks where noise is applied to their inputs during optimization. The plastic networks outperform the plain static networks of different sizes in terms of robustness, and perform at the same level as the best configurations of static networks with noisy inputs while requiring a significantly lower number of trainable parameters.

In the future it will be interesting to further increase the expressivity of the evolved rules, potentially allowing an even greater genomic compression with increased generalisation to robots with drastically different morphologies.

2 RELATED WORK

This section reviews related work on Hebbian learning and indirectly encoding plasticity in evolving neural networks.

2.1 Hebbian Plasticity

In neuroscience, Hebbian theory proposes that if a group of neurons are activated close to each other in time, the neurons can increase their efficacy in activating each other at a later point [28]. In this way, the full group could thus become active, even if just a part of the group was initially activated by external stimuli [33]. This type of plasticity constitutes a local learning rule, where the change in connection only depends on information local to the two connected neurons, such as their activation. In this way, recurrent connections within a layer of neurons endow a network with the ability to "complete patterns"[29]: if a stimulus at an earlier time has activated a certain group of neurons, also called a cell assembly, the network might be able to respond with the activation of this same cell assembly, if it is faced with an incomplete or noisy presentation of this stimulus [27]. These cell assemblies have been proposed to be the basic units for thoughts and cognition [6, 42, 50], and their existence was first suggested by Hebb [43].

Several past studies have focused on finding ways to use plasticity inspired by Hebbian plasticity in artificial neural networks. The motivation for this has for some studies been to study network plasticity in computational models [1, 55], and for other studies the aim has been to better enable models to generalize. For example, Soltoggio et al. [53] evolve modulatory neurons that when activated will alter the connection strengths between other neurons. With this approach, they are able to solve the T-Maze problem, where rewards are not stationary. In Orchard and Wang [37], linear and non-linear learning rules are evolved to adapt to a simple foraging task. Yaman et al. [62] use genetic algorithms to optimize delayed synaptic plasticity that can learn from distal rewards. Common to these examples are that learning rules that update neural connections have access to a reward signal during the lifetime of the agent. While this might be a useful future addition to our approach, here the learning rules only have access to neural activations, requiring adaptation to only rely on the robot's proprioceptors.

2.2 Indirect Encoding with Plasticity

One of the goals of indirect encoding approaches is to be able to represent a full solution, like a large neural network, in a compressed manner [3, 20, 57]. Indirect encoding schemes come in many variations. Examples include letting smaller networks determine the connection strengths of a larger network [7, 22, 45, 47], or representing the connections of a neural network by Fourier coefficients [18, 32]. Of particular relevance to our work are methods that use plasticity rules to make indirect encodings.

Early approaches include that of Chalmers [8], where a single parameterized learning rule with 10 parameters was evolved to allow a feedforward network to do simple input-output associations. More recently, approaches such as adaptive HyperNEAT [46] have been deployed to exploit the geometry of a neural network to produce patterns of learning rules. The HyperNEAT approach has previously been used for improving a controller's ability to control robot morphologies outside of what was experienced during training [48]. However, this method depended on explicit information about the morphologies, whereas the method used in the current paper does not rely on any such information. Indirectly encoding plasticity has shown to improve a network's learning abilities, with networks

that are more regular showing improved performance [60]. These earlier results point at a deep connection between plasticity and indirect encodings, which has so far received little attention.

3 APPROACH

The approach introduced in this paper evolves a set of local learning rules, where the number of rules is ultimately much smaller than the number of connections. In contrast to other indirect encoding methods, instead of starting with a small rule set, the Evolve & Merge approach starts with a large amount of trainable parameters compared to the number of trainable parameters in the ANN and only over the course of the evolution end up with a smaller number of trainable parameters by merging rules that have evolved to be very similar (Figure 1).

For learning rules, we use a parameterized abstraction of Hebbian learning. The so-called "ABCD" rule, which has been used several times in the past as a proxy for Hebbian learning [35–37, 44], updates the connection between two neurons in the following manner:

$$\Delta w_{ij} = \alpha (Ao_i o_j + Bo_i + Co_j + D) \tag{1}$$

where $w_{ij}$ is the connection strength between the neurons, $o_i$ and $o_j$ are the activity levels of the two connected neurons, $\alpha$ is a learned learning rate, and A, B, C, and D are learned constants.

Instead of directly optimizing connection strengths in the plastic network, we only optimize parameters of the ABCD learning rules, which in turn continually adapts the network's connections throughout the lifetime of the agent. The parameters of the learning rules are randomly initialized before evolution following a normal distribution, $\mathcal{N}(0,0.1)$ , and are optimized at the end of each generation. In the beginning of each new episode, the connection strengths of a plastic network are randomly initialized, drawn from a uniform distribution, Unif(-0.1,0.1). At each time step of the episode, after an action has been taken by the agent, each connection strength is changed according to the learning rule that it is assigned to. Below, we show training and performance of models for which a unique learning rule is optimized for each connection in the network, as well as models where multiple connections share the same learning rule.

3.1 Environment

We train and evaluate our models on how well they can control a simulated robot in the AntBullet environment [14]. Here, the task is to train a three-dimensional four-legged robot to walk as efficiently as possible in a certain direction.

The neural network controlling the robot receives as input a vector of length 28, in which the elements correspond to the position and velocity of the robot, as well as angles and angular velocities of the robot's joints. To control the robot's movements, the output of the neural network is a torque for each of the eight joints of the robot, resulting in a vector of eight elements.

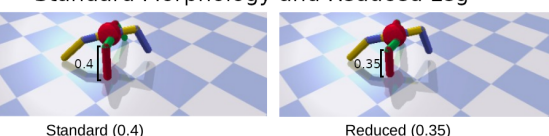

Previous research has mostly focused on a robot's ability to cope with catastrophic damages to a leg [9, 11, 35]. Here we take a different approach, where legs are modified, but still usable (Figure 2). For evaluation, we create slight variations on the standard morphology by shrinking the lower part of the robot's legs (the "ankles" in the robot's xml file). The standard length of an "ankle" is 0.4.

Standard Morphology and Reduced Leg

Figure 2: Robot Environment. Right: The standard morphology that the models were optimized for. Left: An example of the most severe leg reduction done to a leg to test robustness. The lower part of the leg was reduced from 0.4 to 0.35. In this figure, the reduction was done to the red part of the leg in the foreground of the image.

Table 1: Hyperparameters for ES

| Parameter | Value | |

|---|---|---|

| Population Size | 500 | |

| Learning Rate | 0.1 | |

| Learning Rate Decay | 0.9999 0.001 0.1 |

|

| Learning Rate Limit | ||

| Sigma | ||

| Sigma Decay | 0.999 | |

| Sigma Limit | 0.01 | |

| Weight Decay | 0 |

We evaluate five different reductions, reducing the length of an "ankle" to 0.39, 0.38, 0.37, 0.36, and 0.35, respectively. One by one, each of the four legs have their ankle reduced to each of these five lengths. In addition, we evaluate on a setting where both front legs are reduced at the same time, as well as a setting where the front left leg and the back right leg are reduced at the same time. We thus have five different reductions on six different leg combinations, amounting to 30 different morphology variations. This way we can more precisely determine how much variation from the original setting the models are able to cope with. When evaluating on a varied morphology, we adapt the reward to solely reflect the distance traveled in the correct direction, so that no points are given for just "staying alive".

3.2 Evolution Strategy

All models are optimized using Evolution Strategy (ES) [49]. In static networks connections are evolved directly and in plastic networks only the learning rule parameters are evolved. We use an off-the-shelf implementation of ES [21] with its default hyperparameters, except that we set weight decay to zero (see Table 1 for complete hyperparameter configurations). This implementation uses mirrored sampling, fitness ranking, and uses the Adam optimizer for optimization. In all runs, a population size of 500 is used, and optimization spans 1,600 generations.

3.3 Merging of Rules

In order to produce rule sets with fewer rules than the number of connections in the network, we use the K-Means clustering algorithm [39] to gradually merge the learning rules throughout

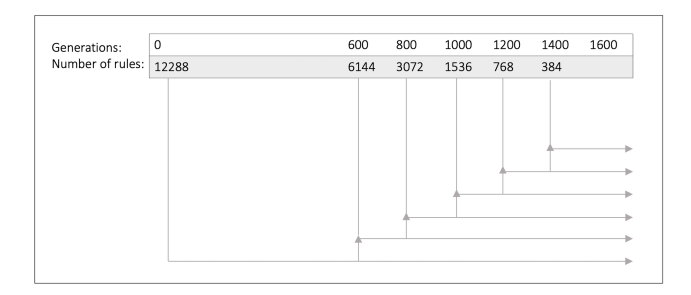

Figure 3: Rule Merging. The number of rules is iteratively halved using K-Means clustering. Every reduced rule set is optimized until the time limit of 1,600 generations so that fair comparisons between models can be made.

training. We first initialize a network with one learning rule for each synapse (12,288 different learning rules) and optimize these learning rules for 600 generations. We then half the number of learning rules by using the K-Means algorithm to find 6,144 cluster centers among the 12,288 rules. The new learning rule of a given synapse will simply be the cluster center of the learning rule, to which it was previously assigned. The newly found smaller set of learning rules is then optimized for 200 generations, before K-Means clustering is used to half the number of rules again. The number of generations between merging of the rules as well as how many cluster centers to reduce the rule set to are hyperparamters that were determined to work well in preliminary experiments, and the choices mainly depend on how much total training time is permitted. This process is repeated until we have optimized for 1,600 generations altogether. In order to be able to make fair comparisons of the different reduced rule sets, we also optimize each individual reduced set until it has been optimized for 1,600 generations (see Figure 3).

3.4 Experiments

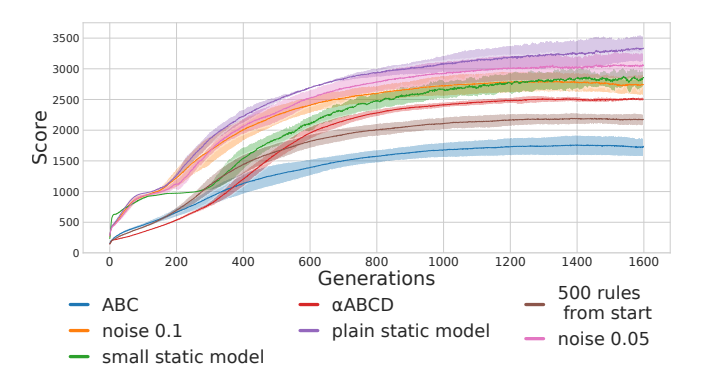

We compare a total of 12 different models in terms of their ability to learn and their robustness (see Table 2 and Figure 9a, 9b). Common to all of them is that they are feedforward networks, they have two hidden layers, they have no biases, and the hyperbolic tangent function is used for all activations.

All networks, have 128 neurons in the first hidden layer, and 64 neurons in the second. The plain static model is a static neural network without any Hebbian learning. We also optimize and evaluate a smaller static model, in which the hidden layers are size 32 and 16 (1,536 parameters). Two other static network models were trained in a setting where noise was applied to the input throughout optimization. If the models can perform well despite noisy inputs, they might also be more robust to morphology changes despite being static, and therefore we create these models as additional baselines to compare our approach to. At each time step during optimization, a vector of the same size as the input with elements drawn from a normal distribution was created and then added to the input element-wise. For one model, the distribution was N (0, 0.05), the other had a distribution of N (0, 0.1). In the figures below, these models are named after the amount of noise their inputs received during optimization.

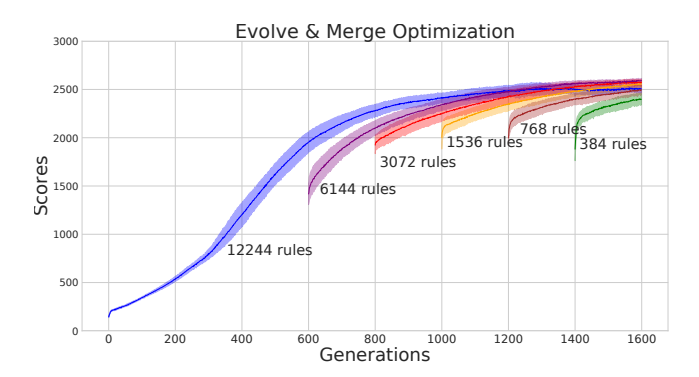

Figure 4: Evolve & Merge Training Results. Training curves of five independent evolution runs. The solid lines reflect the average population mean for each run of each model. The filled areas are the standard deviations of the models' population means. Immediately after a merging of rules has occurred, the newly reduced rule set is set back a bit in its performance compared to before merging, but performance quickly recovers and improves. Without adding to the total optimization time of 1600 generations, a rule set of just 384 different rules reaches a comparable population mean score as a rule set with 12244 rules.

The ABC model type was trained with an incomplete ABCD rule: the learning rate and the D parameter were omitted, so only the activity dependent terms were left. For all other plastic network models, the rules consisted of five parameters (A,B,C,D and the learning rate). The model called ABCD was optimized with a rule for each connection throughout evolution, and the number of rules was never reduced. This is the same method as was introduced by Najarro and Risi [35] . The model called "500 rules from start" was initialized with just 500 rules, and this number remained unchanged. Each connection of the ANN was randomly assigned to one of the 500 rules at initialization. In the figures summarizing the results (Figures 8,7, 9a, 9b), the rest of the plastic models are simply named after the number of rules they have in their rule sets.

4 RESULTS

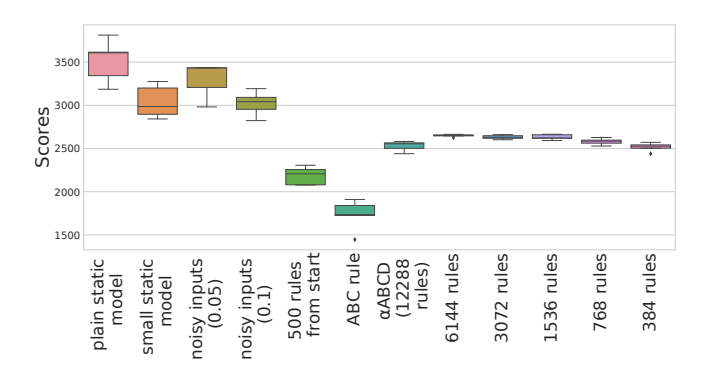

After optimizing for 1,600 generations, we see that the static networks tend to perform much better on the standard morphology that the models are optimized on. Figure 4 presents the training curves of the each of the reduced rule sets, and Figure 5 shows the training curves of the rest of the models. For comparisons with different reinforcement learning algorithms in the standard AntBullet environment see Pardo [38] for baselines where, e.g., Proximal Policy Optimization (PPO) achieves a score of around 3100, Deep Deterministic Policy Gradient (DDPG) scores around 2500, and Advantage Actor-Critic (A2C) scores around 1800.

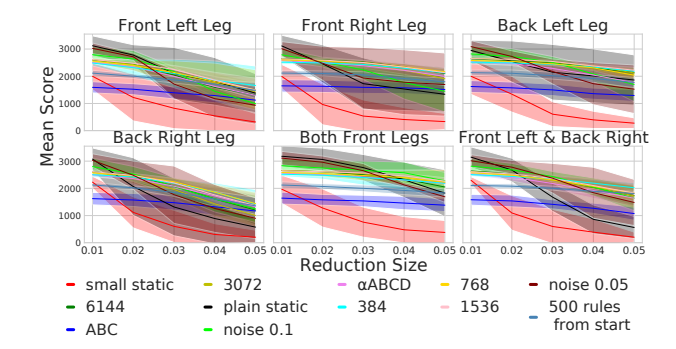

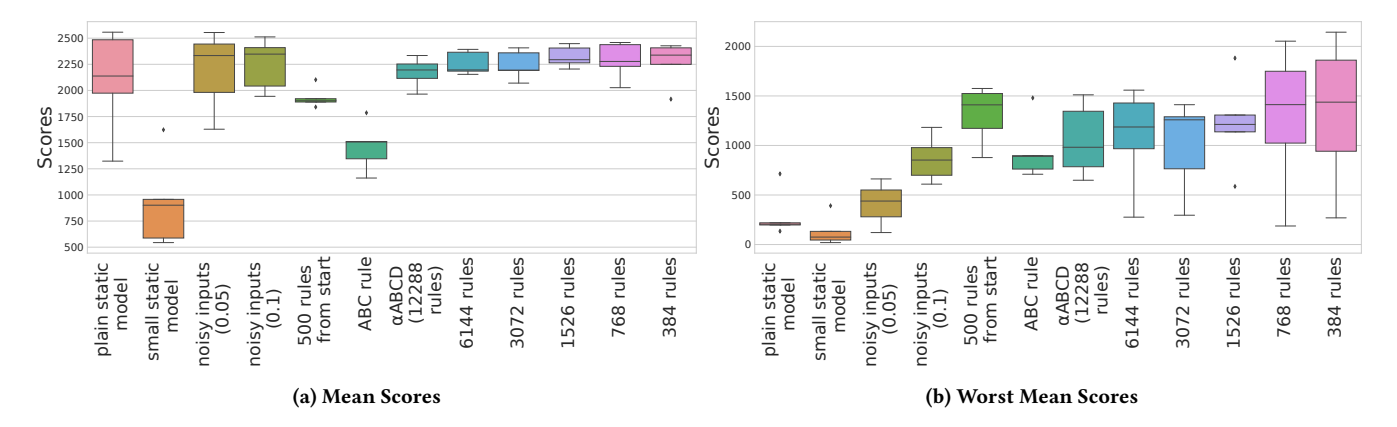

Performances after optimization are summarized in Table 2 and Figures 7,8, 9a, 9b. The reduced rule sets generally end up with a better performance when evaluated the original settings compared with the case where the number of rules is equal to the number of connections throughout. Looking at the box plots in Figure 9a, we see that the all models with reduced rule sets (named by number of rules) have a higher mean performance across all novel settings than

Figure 5: Training Results. For all models, we ran five independent evolutionary runs. The solid lines reflect the average population mean for a given model throughout evolution. The filled areas are the standard deviations of the models' population means. While the static models end up with better scores, all models, except 'ABC', are able to obtain reasonably well-performing populations within 1600 generations.

| Num. Params. | Orig. Score | Novel Score |

|---|---|---|

| 12,288 | 3,513 ±221 | 2, 095±442 |

| 1,536 | $3,040\pm170$ | 923±387 |

| 12,288 | $3,283\pm213$ | $2,172\pm395$ |

| 12,288 | $3,020\pm126$ | $2,256\pm226$ |

| 2,500 | $2,185\pm92$ | $1,931\pm90$ |

| 36,864 | 1,731±158 | $1,462\pm206$ |

| 61,440 | $2,528\pm52$ | $2,173\pm126$ |

| 30,720 | $2,649\pm13$ | $2,259\pm100$ |

| 15,360 | $2,631\pm21$ | $2,244\pm123$ |

| 7,680 | $2,631\pm27$ | $2,323\pm90$ |

| 3,840 | $2,580\pm33$ | $2,286\pm157$ |

| 1,920 | $2,516\pm44$ | $2,267 \pm 186$ |

| 12,288 1,536 12,288 12,288 2,500 36,864 61,440 30,720 15,360 7,680 3,840 |

12,288 |

Table 2: Parameter counts and scores after optimization. Orig. Scores are scores under original morphology settings, and Novel Scores are under the unseen altered settings. Scores reflect the means of 5 models of each model type. A model's score is its mean performance over 100 episodes. Standard deviations are provided next to each mean. The plain static network has the highest score in the original settings, but has a massive decrease in score in the novel settings. All reduced rule sets have higher mean scores with smaller standard deviations in the novel settings, than any static network.

the model with a rule for each connection (named $\alpha ABCD$ in the figures). They also have a higher mean performance than the plain static model, which have a large variability in its performance across the its different evolution runs. The average performance tends to increase as the number of rules decreases. The ABC model and the model that had only 500 rules from the beginning achieved training performances considerably lower than the rest of the models. The static models optimized with noisy inputs obtain similar scores as the reduced rule sets. When the noise added to the input is drawn

from $\mathcal{N}(0,0.05)$ , the best evolution run of the model is, like the static network, better than any of the runs with the reduced rule sets, but its worst run is far worse. When the noise is drawn from $\mathcal{N}(0,0.1)$ the average performance is at the same level as the most reduced rule set and the top performance is a bit better. Overall, while the ceiling of the performances seems to be lower for the plastic networks, the floor is tends to be much higher (except for the case of the ABC-only models). The latter point is especially visible if we look at the worst performances of the runs of each model, as is done in Figure 9b. Here we see that the model trained with 500 learning rules from the beginning of the training is the least likely to get catastrophically bad performances.

4.1 Discovered Rules

To get a visual intuition of what type of learning rules evolved, Figure 6 shows strip plots of the top 10 most followed rules in each of the reduced rule sets. From these, we can see that several different types of rules are found by evolution. First, one type of rule that is found in the top 10 of all the rule sets, have all its updates closely concentrated around zero (e.g., the rule indexed 1020 in the rule set of 1536 rules, or the rule indexed 114 in the set of 384 rules). Second, multiple rules provide relatively strong updates, both positive and negative, but which have a gap around zero (e.g., index 104 in the 384 set, or 837 in the 6144 set). Another apparent type of rule provides both positive and negative updates, but has no gap around zero (e.g., index 734 in the 768 set, or 248 in the 3072 set). Lastly, we see a type of rule, which has the vast majority of its updates to be of a specific sign (e.g, index 240 in set 768 (mostly negative), or 784 in set 3072 (mostly positive)). These observations suggest that many connections are destined to only receive very small updates throughout the episode, and remain largely unchanged compared to some of the stronger updates that other rules provide. Further, the fact that multiple of the rules that have the most connections assigned to them, can provide both positive and negative updates, confirms that two connections following the same rules might end up being updated very differently from each other. Fewer rules do therefore not necessarily make the neural network less expressive.

5 DISCUSSION

In this paper, we build upon the results of Najarro and Risi [35] that showed that an increased robustness can be achieved by evolving plastic network with local Hebbian learning rules instead of evolving ANN connections directly. We show that the robustness can be enhanced even further with an Evolve & Merge approach: throughout optimization we iteratively use a clustering algorithm to merge similar rules, resulting in a smaller rule set at the end of the optimization process. While the plastic networks are not able to get scores as high as the highest scores of the static networks, the plastic networks are less likely to get catastrophically bad scores, when the morphology of the robot is changed slightly. Note, that since the plastic networks are initialized randomly at the beginning of each new episode, a somewhat lower score for plastic networks under the original training setting is to be expected. This is because we cannot expect the initially random network to perform well in the first few time steps when the learning rules have only had little time to adapt the connections. Static networks, on the other hand,

Figure 6: Evolved Rules Examples. Strip plots of values of connection weight changes of the top 10 most followed rules in an optimized version of each of the reduced rule sets. The names of each of the strips seen along the x-axis are the indices of the rules, and in parentheses are the number of updates that the rule made during an episode with the original environment settings. A strip indicates what updates was made by the rules during this episode.

Figure 7: Original Environment Scores. Box plots for the scores of the optimized models in the original environment setting. For each model, the score is averaged over 100 independent episodes. As we optimized 5 models of each type, the box plots show the variation of the scores within a given model type. See Section 3.4 for model descriptions. All static models have better scores than any plastic model in the original setting. The reduced rule sets see no decrease in performance compared to the model with a rule for each connection.

can be optimized to perform well under familiar settings from the very first time step, but suffer from lack of robustness.

Our results indicate that the generalization capabilities tend to improve as the number of rules is reduced. At the end of the Evolve & Merge approach we thus have a model, which has a smaller number of trainable parameters and at the same time generalizes better. Using a simple clustering algorithm as a way of merging the learning rules, we are able to go from initially having 12,288 rules (corresponding to 61,440 trainable parameters) to having just 384 learning rules (corresponding to 1,920 trainable parameters) while improving robustness, all without increasing the number of generations used for optimization.

In order to make fair comparisons between the all the models, we did not allow for more generations for the reduced rule sets here.

Figure 8: Mean scores for all models in all novel environment settings. As leg reductions increase (x-axis), the models tend to perform worse (y-axis). The mean scores are calculated over 100 episodes for each model. The static models have much more variability in their scores than the plastic ones. Further, static networks tend to start with a good score for the smallest reduction, but decrease rapidly as reductions become larger. The plastic networks, on the other hand, have much flatter performance curves.

However, it is likely that if we were to permit more optimization time, we could decrease the number of learning rules even further. The observed robustness cannot just be attributed to a smaller number of trainable parameters, since the smaller static networks that performed at the same level as the plastic networks in the original settings, did not show robust performances in altered settings.

The static network optimized with noisy inputs, on the other hand, had average performances across all novel settings and optimization runs, which were very similar to that of the best plastic network model. Noisy inputs have long been used for data augmentation in supervised tasks, such as speech recognition, to gain more robust models [10]. Adding noise to inputs has also been used as a way to get robust representations in autoencoders [61]. However, using noise in training has been explored to a lesser extend in reinforcement learning-type frameworks [30]. In this paper, the static networks with noisy input provide a strong baseline to compare the

Figure 9: Generalisation Performance. Box plots for the (a) mean scores and (b) worst mean scores of the optimized models across all novel environment setting. For each model, the score is averaged over 100 independent episodes. The worst mean scores shown in (b) shows how bad a model is at its worst across 100 episodes. The worst scores of the plastic networks tend to be much better than the worst scores of the static one. This shows that plastic models are at less risk of getting a catastrophically bad score where the robot is only barely able to move at all. As in Figure 7, the box plots show the variation of the scores within a given model type.

reduced plastic networks to in terms of their robustness, and as we can see, the plastic networks achieve similar performances. However, in order to achieve such results by applying noise to the inputs to static networks, we need to carefully pick the correct amount of noise to apply; too little noise, and the results will be indistinguishable from the plain static approach, and too much noise is likely to hinder progress completely. Using the plastic approach, the parameters will regulate themselves, and with the Evolve & Merge method we have the added benefit of ending up with a smaller number of trainable parameters. The similar performances between static networks with noisy inputs and plastic networks are interesting and will have to be explored further in the future. The rather naive approach to using noise of simply adding it on top of the input is unlikely to be a promising method to improve upon to make even more robust models. It is, on the other hand, easier to imagine improvements to the learning rules (see section 5.1).

The evolved learning rules used here are inspired by Hebbian learning. However, the model which used only the activity dependent terms of the parameterized rule (the ABC terms), failed to perform well, making it clear that the stability provided by the constant terms are necessary for the locomotion task used here.

The results also showed that starting from a complete rule set, and then merging the rules throughout optimization achieved superior results compared to starting with a small number of rules. This draws parallels to the recent "Lottery Ticket Hypothesis" [17] that builds upon the notion that a trained neural network can most often be pruned drastically, resulting in a much smaller network that performs just as well as the unpruned network. However, if one was to start training from scratch with a randomly initialized small network of the same size as the pruned network, the training is likely to be much more difficult, and one is unlikely to get the same performance. The hypothesis states that when initializing a large network, it is likely that one of the combinatorially many sub-networks inside the full network will be "easily trainable" for the given problem; we are more likely to have a "winning ticket" within the random initialization, since we have so many of them.

The number of sub-networks - or potential winning tickets - dwindle rapidly if we decrease the size of the full network. To the best of our knowledge, the methods used for finding winning tickets [17, 67] have not yet been explored in the case where the optimization method is ES, and much less in the context of indirect encoding. Our results hint that the Lottery Ticket Hypothesis might also hold in the indirect encoding setting that we employ here. Further investigations into when the Lottery Ticket Hypothesis asserts itself in indirect encoding schemes might provide valuable insights for future approaches.

Deciding how to evaluate one's models on OOD circumstances can seem a bit arbitrary, as countless different changes to a simulated environment can be made. Several previous studies have focused on the ability of a robot show robustness in the face of a severe leg injury [9, 11, 35]. Here we opted for slight variations on leg lengths instead, partly to highlight how quickly neural network models can break completely. For many of the models, a severe injury is not required in order to put a well-performing model at risk of malfunctioning. Had we chosen a larger range of reductions, it likely that the results would have been tilted to favor the plastic networks more, whereas a smaller range would have had the opposite effect. The issue of deciding how to evaluate generalization capabilities of a model speaks into a larger discussion of overfitting in artificial agents [66]. In some sense, the static networks do what we ask them to more so than the plastic ones; when we optimize the networks we implicitly ask them to overfit as much as possible to the problem that we present them. In the approach presented in this paper, we explicitly optimize for one setting, but hope that our models will also be able to perform in different settings. Of course, several already established meta-learning frameworks attempt to create models that learn how to learn to solve new tasks [16]. However, such approaches also require us to make somewhat arbitrary choices regarding which types of different tasks we should expose our models to during training. For this reason, it is still useful to develop methods that are intrinsically as robust as possible. Such

methods might be promising candidates for use in frameworks such as in Model-Agnostic Meta-Learning (MAML) [16].

5.1 Future Directions

We showed that it is indeed possible to evolve relatively wellperforming models with an increased robustness to morphology changes, while at the same time having fewer trainable parameters; this approach opens up interesting future research directions.

As mentioned in section 2.1, an important concept within Hebbian theory is that of cell assemblies, which after repeated exposure to stimuli can become increasingly correlated and able to perform pattern completion. In this theoretical framework that have been supported by a wide array of evidence[24, 34, 51](for a review, see [50]), recurrent connections between the neurons are often assumed. In the current study, we have only used simple feedforward networks with two hidden layers. Applying the Evolve & Merge approach to local learning rules for more advanced neural networks with recurrent connections will be an interesting line of research in the future. More generally, the Evolve & Merge approach also lend itself well to be combined with methods that evolves the neural architecture of the network that the learning rules apply to. An example of this is the NEAT algorithm [45, 47, 56].

It will also be interesting to combine this indirect encoding method with a meta-learning framework such as MAML [16]. Models with few trainable parameters, controlling a large, expressive ANN might intuitively be an ideal candidate for few-shot learning in the MAML framework.

Further, while local learning rules such as spike-time dependent plasticity are important drivers of change in synapses in the brain, synaptic plasticity is also affected by neuromodulators [12, 15]. Extending the evolved learning rules to be able to take into account reward signals could greatly improve the model's ability to respond to changes in the environment in an adaptive manner, and it is something we look forward to implementing in future studies. Something similar to this has been explored in other approaches to plastic networks [2, 4, 13, 53], and we expect this to also be a beneficial addition to our approach.

While we have evolved a set of relatively simple learning rules, our approach could just as well be used in conjuction with more complicated rules, e.g., rules with more parameters [8], or rules that produce non-linear outputs of the inputs [4, 37]. With more expressive rules, it might be possible to limit the number of rules and trainable parameters even further.

On a practical note, having few trainable parameters opens up for the possibility of using more sophisticated optimization methods. For example, the Covariance Matrix Adaptation Evolution Strategy [25] has recently been used in several studies to obtain impressive results [23, 59], but it quickly becomes infeasible to use if the number of trainable parameters is too large.

6 CONCLUSION

If the environment one wishes to deploy an artificial agent in is guaranteed to be identical to the training environment, one might be better off evolving a static network to control the agent. However, if the aim is to have artificial agents act in complex real-world environments, such guarantees cannot be made. The evolution of

plastic networks that can better adapt to changes, is therefore an interesting prospect. The results shown in this paper contribute to the development of robust plastic networks. We show that it is simple to achieve a fairly small rule set and that as the number of rules decreases, the robustness of the model increases. This can be achieved with no additional optimization time. We believe that there are several exciting ways to continue this line of research, and we hope that the results presented here will inspire additional studies in evolved plastic artificial neural networks.

ACKNOWLEDGMENTS

This project was funded by a DFF-Research Project1 grant (9131- 00042B).

REFERENCES

- [1] Larry F Abbott and Sacha B Nelson. 2000. Synaptic plasticity: taming the beast. Nature neuroscience 3, 11 (2000), 1178–1183.

- [2] Eseoghene Ben-Iwhiwhu, Pawel Ladosz, Jeffery Dick, Wen-Hua Chen, Praveen Pilly, and Andrea Soltoggio. 2020. Evolving inborn knowledge for fast adaptation in dynamic POMDP problems. In Proceedings of the 2020 Genetic and Evolutionary Computation Conference. 280–288.

- [3] Peter J Bentley and Sanjeev Kumar. 1999. Three ways to grow designs: A comparison of embryogenies for an evolutionary design problem.. In GECCO, Vol. 99. 35–43.

- [4] Paul Bertens and Seong-Whan Lee. 2019. Network of Evolvable Neural Units: Evolving to Learn at a Synaptic Level. arXiv preprint arXiv:1912.07589 (2019).

- [5] Stephen M Breedlove and Neil V Watson. 2013. Biological psychology: An introduction to behavioral, cognitive, and clinical neuroscience. Sinauer Associates.

- [6] György Buzsáki. 2010. Neural syntax: cell assemblies, synapsembles, and readers. Neuron 68, 3 (2010), 362–385.

- [7] Christian Carvelli, Djordje Grbic, and Sebastian Risi. 2020. Evolving hypernetworks for game-playing agents. In Proceedings of the 2020 Genetic and Evolutionary Computation Conference Companion. 71–72.

- [8] David J Chalmers. 1991. The evolution of learning: An experiment in genetic connectionism. In Connectionist Models. Elsevier, 81–90.

- [9] Cédric Colas, Vashisht Madhavan, Joost Huizinga, and Jeff Clune. 2020. Scaling map-elites to deep neuroevolution. In Proceedings of the 2020 Genetic and Evolutionary Computation Conference. 67–75.

- [10] Xiaodong Cui, Vaibhava Goel, and Brian Kingsbury. 2015. Data augmentation for deep neural network acoustic modeling. IEEE/ACM Transactions on Audio, Speech, and Language Processing 23, 9 (2015), 1469–1477.

- [11] Antoine Cully, Jeff Clune, Danesh Tarapore, and Jean-Baptiste Mouret. 2015. Robots that can adapt like animals. Nature 521, 7553 (2015), 503–507.

- [12] Peter Dayan. 2012. Twenty-five lessons from computational neuromodulation. Neuron 76, 1 (2012), 240–256.

- [13] Kai Olav Ellefsen, Jean-Baptiste Mouret, and Jeff Clune. 2015. Neural modularity helps organisms evolve to learn new skills without forgetting old skills. PLoS Comput Biol 11, 4 (2015), e1004128.

- [14] Benjamin Ellenberger. 2018–2019. PyBullet Gymperium. https://github.com/ benelot/pybullet-gym.

- [15] Daniel E Feldman. 2012. The spike-timing dependence of plasticity. Neuron 75, 4 (2012), 556–571.

- [16] Chelsea Finn, Pieter Abbeel, and Sergey Levine. 2017. Model-agnostic metalearning for fast adaptation of deep networks. In International Conference on Machine Learning. PMLR, 1126–1135.

- [17] Jonathan Frankle and Michael Carbin. 2018. The lottery ticket hypothesis: Finding sparse, trainable neural networks. arXiv preprint arXiv:1803.03635 (2018).

- [18] Faustino Gomez, Jan Koutník, and Jürgen Schmidhuber. 2012. Compressed network complexity search. In International Conference on Parallel Problem Solving from Nature. Springer, 316–326.

- [19] William T Greenough and James E Black. 2013. Induction of brain structure by experience: Substrates. In Developmental behavioral neuroscience: The Minnesota symposia on child psychology. 155.

- [20] Frederic Gruau, Darrell Whitley, and Larry Pyeatt. 1996. A comparison between cellular encoding and direct encoding for genetic neural networks. In Proceedings of the 1st annual conference on genetic programming. 81–89.

- [21] David Ha. 2017. Evolving Stable Strategies. blog.otoro.net (2017). http://blog. otoro.net/2017/11/12/evolving-stable-strategies/

- [22] David Ha, Andrew Dai, and Quoc V Le. 2016. Hypernetworks. arXiv preprint arXiv:1609.09106 (2016).

-

[23] David Ha and Jürgen Schmidhuber. 2018. World models. arXiv preprint arXiv:1803.10122 (2018).

-

[24] Robert E Hampson and Sam A Deadwyler. 2009. Neural population recording in behaving animals: Constituents of a neural code for behavioral decisions. (2009).

- [25] Nikolaus Hansen. 2006. The CMA evolution strategy: a comparing review. Towards a new evolutionary computation (2006), 75–102.

- [26] Geoffrey E Hinton and Steven J Nowlan. 1996. How learning can guide evolution. Adaptive individuals in evolving populations: models and algorithms 26 (1996), 447–454.

- [27] Kari L Hoffmann. 2009. A face in the crowd: Which groups of neurons process face stimuli, and how do they interact? (2009).

- [28] Christian Holscher. 2008. How could populations of neurons encode information? In Information Processing by Neuronal Populations. Cambridge University Press, 3–20.

- [29] Michael R Hunsaker and Raymond P Kesner. 2013. The operation of pattern separation and pattern completion processes associated with different attributes or domains of memory. Neuroscience & Biobehavioral Reviews 37, 1 (2013), 36–58.

- [30] Maximilian Igl, Kamil Ciosek, Yingzhen Li, Sebastian Tschiatschek, Cheng Zhang, Sam Devlin, and Katja Hofmann. 2019. Generalization in reinforcement learning with selective noise injection and information bottleneck. arXiv preprint arXiv:1910.12911 (2019).

- [31] Niels Justesen, Ruben Rodriguez Torrado, Philip Bontrager, Ahmed Khalifa, Julian Togelius, and Sebastian Risi. 2018. Illuminating Generalization in Deep Reinforcement Learning through Procedural Level Generation. In NeurIPS Workshop on Deep Reinforcement Learning.

- [32] Jan Koutnik, Faustino Gomez, and Jürgen Schmidhuber. 2010. Evolving neural networks in compressed weight space. In Proceedings of the 12th annual conference on Genetic and evolutionary computation. 619–626.

- [33] Anders Lansner. 2009. Associative memory models: from the cell-assembly theory to biophysically detailed cortex simulations. Trends in neurosciences 32, 3 (2009), 178–186.

- [34] Jae-eun Kang Miller, Inbal Ayzenshtat, Luis Carrillo-Reid, and Rafael Yuste. 2014. Visual stimuli recruit intrinsically generated cortical ensembles. Proceedings of the National Academy of Sciences 111, 38 (2014), E4053–E4061.

- [35] Elias Najarro and Sebastian Risi. 2020. Meta-learning through hebbian plasticity in random networks. Advances in Neural Information Processing Systems 33 (2020).

- [36] Yael Niv, Daphna Joel, Isaac Meilijson, and Eytan Ruppin. 2002. Evolution of reinforcement learning in uncertain environments: A simple explanation for complex foraging behaviors. (2002).

- [37] Jeff Orchard and Lin Wang. 2016. The evolution of a generalized neural learning rule. In 2016 International Joint Conference on Neural Networks (IJCNN). IEEE, 4688–4694.

- [38] Fabio Pardo. 2020. Tonic: A Deep Reinforcement Learning Library for Fast Prototyping and Benchmarking. arXiv preprint arXiv:2011.07537 (2020).

- [39] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay. 2011. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 12 (2011), 2825–2830.

- [40] Jonathan D Power and Bradley L Schlaggar. 2017. Neural plasticity across the lifespan. Wiley Interdisciplinary Reviews: Developmental Biology 6, 1 (2017), e216.

- [41] Trevor D Price, Anna Qvarnström, and Darren E Irwin. 2003. The role of phenotypic plasticity in driving genetic evolution. Proceedings of the Royal Society of London. Series B: Biological Sciences 270, 1523 (2003), 1433–1440.

- [42] J Andrew Pruszynski and Joel Zylberberg. 2019. The language of the brain: real-world neural population codes. Current opinion in neurobiology 58 (2019), 30–36.

- [43] Friedemann Pulvermüller, Max Garagnani, and Thomas Wennekers. 2014. Thinking in circuits: toward neurobiological explanation in cognitive neuroscience. Biological cybernetics 108, 5 (2014), 573–593.

- [44] Sebastian Risi, Charles E Hughes, and Kenneth O Stanley. 2010. Evolving plastic neural networks with novelty search. Adaptive Behavior 18, 6 (2010), 470–491.

- [45] Sebastian Risi and Kenneth Stanley. 2011. Enhancing ES-HyperNEAT to Evolve More Complex Regular Neural Networks. In Proceedings of the Genetic and Evolutionary Computation Conference (GECCO 2011). New York, NY: ACM. http://eplex. cs. ucf. edu/papers/risi_gecco11. pdf.

- [46] Sebastian Risi and Kenneth O Stanley. 2010. Indirectly encoding neural plasticity as a pattern of local rules. In International Conference on Simulation of Adaptive Behavior. Springer, 533–543.

- [47] Sebastian Risi and Kenneth O Stanley. 2012. An enhanced hypercube-based encoding for evolving the placement, density, and connectivity of neurons. Artificial life 18, 4 (2012), 331–363.

- [48] Sebastian Risi and Kenneth O Stanley. 2013. Confronting the challenge of learning a flexible neural controller for a diversity of morphologies. In Proceedings of the 15th annual conference on Genetic and evolutionary computation. 255–262.

- [49] Tim Salimans, Jonathan Ho, Xi Chen, Szymon Sidor, and Ilya Sutskever. 2017. Evolution strategies as a scalable alternative to reinforcement learning. arXiv preprint arXiv:1703.03864 (2017).

-

[50] Shreya Saxena and John P Cunningham. 2019. Towards the neural population doctrine. Current opinion in neurobiology 55 (2019), 103–111.

-

[51] Jermyn Z See, Craig A Atencio, Vikaas S Sohal, and Christoph E Schreiner. 2018. Coordinated neuronal ensembles in primary auditory cortical columns. Elife 7 (2018), e35587.

- [52] Emilie C Snell-Rood. 2013. An overview of the evolutionary causes and consequences of behavioural plasticity. Animal Behaviour 85, 5 (2013), 1004–1011.

- [53] Andrea Soltoggio, John A Bullinaria, Claudio Mattiussi, Peter Dürr, and Dario Floreano. 2008. Evolutionary advantages of neuromodulated plasticity in dynamic, reward-based scenarios. In Proceedings of the 11th international conference on artificial life (Alife XI). MIT Press, 569–576.

- [54] Andrea Soltoggio, Kenneth O Stanley, and Sebastian Risi. 2018. Born to learn: the inspiration, progress, and future of evolved plastic artificial neural networks. Neural Networks 108 (2018), 48–67.

- [55] Sen Song, Kenneth D Miller, and Larry F Abbott. 2000. Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nature neuroscience 3, 9 (2000), 919–926.

- [56] Kenneth O Stanley, Bobby D Bryant, and Risto Miikkulainen. 2003. Evolving adaptive neural networks with and without adaptive synapses. In The 2003 Congress on Evolutionary Computation, 2003. CEC'03., Vol. 4. IEEE, 2557–2564.

- [57] Kenneth O Stanley and Risto Miikkulainen. 2003. A taxonomy for artificial embryogeny. Artificial Life 9, 2 (2003), 93–130.

- [58] Joan Stiles. 2000. Neural plasticity and cognitive development. Developmental neuropsychology 18, 2 (2000), 237–272.

- [59] Yujin Tang, Duong Nguyen, and David Ha. 2020. Neuroevolution of selfinterpretable agents. In Proceedings of the 2020 Genetic and Evolutionary Computation Conference. 414–424.

- [60] Paul Tonelli and Jean-Baptiste Mouret. 2013. On the Relationships between Generative Encodings, Regularity, and Learning Abilities when Evolving Plastic Artificial Neural Networks. PLOS ONE 8, 11 (11 2013), 1–12. https://doi.org/10. 1371/journal.pone.0079138

- [61] Pascal Vincent, Hugo Larochelle, Yoshua Bengio, and Pierre-Antoine Manzagol. 2008. Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th international conference on Machine learning. 1096–1103.

- [62] Anil Yaman, Giovanni Iacca, Decebal Constantin Mocanu, George Fletcher, and Mykola Pechenizkiy. 2019. Learning with delayed synaptic plasticity. In Proceedings of the Genetic and Evolutionary Computation Conference. 152–160.

- [63] Anthony M Zador. 2019. A critique of pure learning and what artificial neural networks can learn from animal brains. Nature communications 10, 1 (2019), 1–7.

- [64] Amy Zhang, Nicolas Ballas, and Joelle Pineau. 2018. A dissection of overfitting and generalization in continuous reinforcement learning. arXiv preprint arXiv:1806.07937 (2018).

- [65] Chiyuan Zhang, Oriol Vinyals, Remi Munos, and Samy Bengio. 2018. A study on overfitting in deep reinforcement learning. arXiv preprint arXiv:1804.06893 (2018).

- [66] Chenyang Zhao, Olivier Sigaud, Freek Stulp, and Timothy M Hospedales. 2019. Investigating generalisation in continuous deep reinforcement learning. arXiv preprint arXiv:1902.07015 (2019).

- [67] Hattie Zhou, Janice Lan, Rosanne Liu, and Jason Yosinski. 2019. Deconstructing lottery tickets: Zeros, signs, and the supermask. arXiv preprint arXiv:1905.01067 (2019).