title: Evolving Artificial Neural Networks

source_pdf: /home/lzr/code/thesis-reading/Neuroevolution/Chapter 3/Evolving Artificial Neural Networks/Evolving Artificial Neural Networks.pdf

Evolving Artificial Neural Networks

XIN YAO, SENIOR MEMBER, IEEE

Invited Paper

Learning and evolution are two fundamental forms of adaptation. There has been a great interest in combining learning and evolution with artificial neural networks (ANN's) in recent years. This paper: 1) reviews different combinations between ANN's and evolutionary algorithms (EA's), including using EA's to evolve ANN connection weights, architectures, learning rules, and input features; 2) discusses different search operators which have been used in various EA's; and 3) points out possible future research directions. It is shown, through a considerably large literature review, that combinations between ANN's and EA's can lead to significantly better intelligent systems than relying on ANN's or EA's alone.

Keywords—Evolutionary computation, intelligent systems, neural networks.

I. INTRODUCTION

Evolutionary artificial neural networks (EANN's) refer to a special class of artificial neural networks (ANN's) in which evolution is another fundamental form of adaptation in addition to learning [1]–[5]. Evolutionary algorithms (EA's) are used to perform various tasks, such as connection weight training, architecture design, learning rule adaptation, input feature selection, connection weight initialization, rule extraction from ANN's, etc. One distinct feature of EANN's is their adaptability to a dynamic environment. In other words, EANN's can adapt to an environment as well as changes in the environment. The two forms of adaptation, i.e., evolution and learning in EANN's, make their adaptation to a dynamic environment much more effective and efficient. In a broader sense, EANN's can be regarded as a general framework for adaptive systems, i.e., systems that can change their architectures and learning rules appropriately without human intervention.

This paper is most concerned with exploring possible benefits arising from combinations between ANN's and EA's. Emphasis is placed on the design of intelligent systems based on ANN's and EA's. Other combinations

Manuscript received July 10, 1998; revised February 18, 1999. This work was supported in part by the Australian Research Council through its small grant scheme.

The author is with the School of Computer Science, University of Birmingham, Edgbaston, Birmingham B15 2TT U.K. (e-mail: xin@cs.bham.ac.uk).

Publisher Item Identifier S 0018-9219(99)06906-6.

between ANN's and EA's for combinatorial optimization will be mentioned but not discussed in detail.

A. Artificial Neural Networks

1) Architectures: An ANN consists of a set of processing elements, also known as neurons or nodes, which are interconnected. It can be described as a directed graph in which each node i performs a transfer function $f_i$ of the form

$$y_i = f_i \left( \sum_{j=1}^n w_{ij} x_j - \theta_i \right) \tag{1}$$

where $y_i$ is the output of the node i, $x_j$ is the jth input to the node, and $w_{ij}$ is the connection weight between nodes i and j. $\theta_i$ is the threshold (or bias) of the node. Usually, $f_i$ is nonlinear, such as a heaviside, sigmoid, or Gaussian function.

ANN's can be divided into feedforward and recurrent classes according to their connectivity. An ANN is feedforward if there exists a method which numbers all the nodes in the network such that there is no connection from a node with a large number to a node with a smaller number. All the connections are from nodes with small numbers to nodes with larger numbers. An ANN is recurrent if such a numbering method does not exist.

In (1), each term in the summation only involves one input $x_j$ . High-order ANN's are those that contain high-order nodes, i.e., nodes in which more than one input are involved in some of the terms of the summation. For example, a second-order node can be described as

$$y_i = f_i \left( \sum_{j,k=1}^n w_{ijk} x_j x_k - \theta_i \right)$$

where all the symbols have similar definitions to those in (1).

The architecture of an ANN is determined by its topological structure, i.e., the overall connectivity and transfer function of each node in the network.

-

- Generate the initial population G(0) at random, and set i = 0;

-

- REPEAT

- (a) Evaluate each individual in the population;

- (b) Select parents from G(i) based on their fitness in G(i);

- (c) Apply search operators to parents and produce offspring which form G(i+1);

- (d) i = i + 1;

-

- UNTIL 'termination criterion' is satisfied

Fig. 1. A general framework of EA's.

2) Learning in ANN's: Learning in ANN's is typically accomplished using examples. This is also called "training" in ANN's because the learning is achieved by adjusting the connection weights1 in ANN's iteratively so that trained (or learned) ANN's can perform certain tasks. Learning in ANN's can roughly be divided into supervised, unsupervised, and reinforcement learning. Supervised learning is based on direct comparison between the actual output of an ANN and the desired correct output, also known as the target output. It is often formulated as the minimization of an error function such as the total mean square error between the actual output and the desired output summed over all available data. A gradient descent-based optimization algorithm such as backpropagation (BP) [6] can then be used to adjust connection weights in the ANN iteratively in order to minimize the error. Reinforcement learning is a special case of supervised learning where the exact desired output is unknown. It is based only on the information of whether or not the actual output is correct. Unsupervised learning is solely based on the correlations among input data. No information on "correct output" is available for learning.

The essence of a learning algorithm is the learning rule, i.e., a weight-updating rule which determines how connection weights are changed. Examples of popular learning rules include the delta rule, the Hebbian rule, the anti-Hebbian rule, and the competitive learning rule [7].

More detailed discussion of ANN's can be found in [7].

B. EA's

EA's refer to a class of population-based stochastic search algorithms that are developed from ideas and principles of natural evolution. They include evolution strategies (ES) [8], [9], evolutionary programming (EP) [10], [11], [12], and genetic algorithms (GA's) [13], [14]. One important feature of all these algorithms is their population-based search strategy. Individuals in a population compete and exchange information with each other in order to perform certain tasks. A general framework of EA's can be described by Fig. 1.

EA's are particularly useful for dealing with large complex problems which generate many local optima. They are less likely to be trapped in local minima than traditional

$^{1}$ Thresholds (biases) can be viewed as connection weights with fixed input -1.

gradient-based search algorithms. They do not depend on gradient information and thus are quite suitable for problems where such information is unavailable or very costly to obtain or estimate. They can even deal with problems where no explicit and/or exact objective function is available. These features make them much more robust than many other search algorithms. Fogel [15] and Bäck et al. [16] give a good introduction to various evolutionary algorithms for optimization.

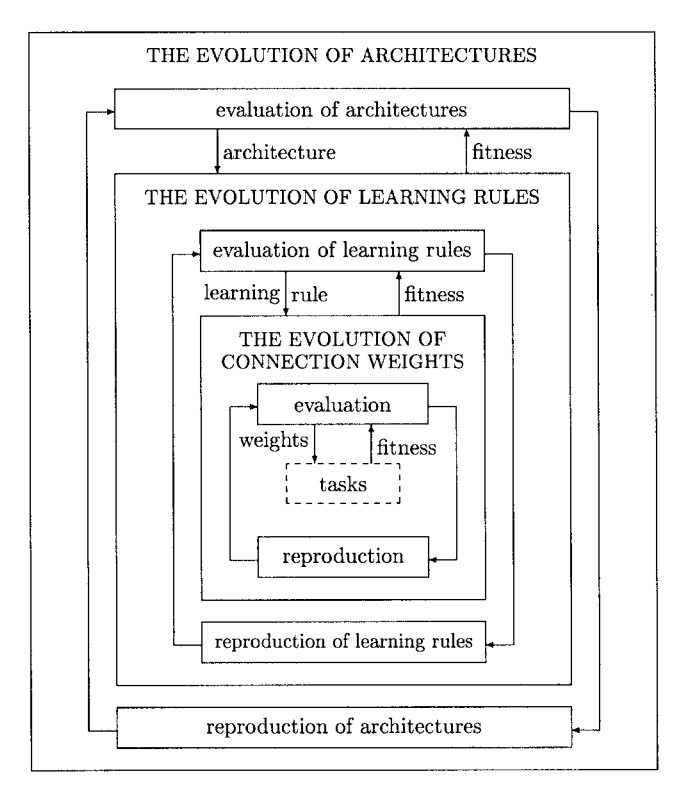

C. Evolution in EANN's

Evolution has been introduced into ANN's at roughly three different levels: connection weights; architectures; and learning rules. The evolution of connection weights introduces an adaptive and global approach to training, especially in the reinforcement learning and recurrent network learning paradigm where gradient-based training algorithms often experience great difficulties. The evolution of architectures enables ANN's to adapt their topologies to different tasks without human intervention and thus provides an approach to automatic ANN design as both ANN connection weights and structures can be evolved. The evolution of learning rules can be regarded as a process of "learning to learn" in ANN's where the adaptation of learning rules is achieved through evolution. It can also be regarded as an adaptive process of automatic discovery of novel learning rules.

D. Organization of the Article

The remainder of this paper is organized as follows. Section II discusses the evolution of connection weights. The aim is to find a near-optimal set of connection weights globally for an ANN with a fixed architecture using EA's. Various methods of encoding connection weights and different search operators used in EA's will be discussed. Comparisons between the evolutionary approach and conventional training algorithms, such as BP, will be made. In general, no single algorithm is an overall winner for all kinds of networks. The best training algorithm is problem dependent.

Section III is devoted to the evolution of architectures, i.e., finding a near-optimal ANN architecture for the tasks at hand. It is known that the architecture of an ANN determines the information processing capability of the ANN. Architecture design has become one of the most

-

- Decode each individual (genotype) in the current generation into a set of connection weights and construct a corresponding ANN with the weights.

-

- Evaluate each ANN by computing its total mean square error between actual and target outputs. (Other error functions can also be used.) The fitness of an individual is determined by the error. The higher the error, the lower the fitness. The optimal mapping from the error to the fitness is problem dependent. A regularization term may be included in the fitness function to penalize large weights.

-

- Select parents for reproduction based on their fitness.

-

- Apply search operators, such as crossover and/or mutation, to parents to generate offspring, which form the next generation.

Fig. 2. A typical cycle of the evolution of connection weights.

important tasks in ANN research and application. Two most important issues in the evolution of architectures, i.e., the representation and search operators used in EA's, will be addressed in this section. It is shown that evolutionary algorithms relying on crossover operators do not perform very well in searching for a near-optimal ANN architecture. Reasons and empirical results will be given in this section to explain why this is the case.

If imagining ANN's connection weights and architectures as their "hardware," it is easier to understand the importance of the evolution of ANN's "software"—learning rules. Section IV addresses the evolution of learning rules in ANN's and examines the relationship between learning and evolution, e.g., how learning guides evolution and how learning itself evolves. It is demonstrated that an ANN's learning ability can be improved through evolution. Although research on this topic is still in its early stages, further studies will no doubt benefit research in ANN's and machine learning as a whole.

Section V summarizes some other forms of combinations between ANN's and EA's. They do not intend to be exhaustive, simply indicative. They demonstrate the breadth of possible combinations between ANN's and EA's.

Section VI first describes a general framework of EANN's in terms of adaptive systems where interactions among three levels of evolution are considered. The framework provides a common basis for comparing different EANN models. The section then gives a brief summary of the paper and concludes with a few remarks.

II. THE EVOLUTION OF CONNECTION WEIGHTS

Weight training in ANN's is usually formulated as minimization of an error function, such as the mean square error between target and actual outputs averaged over all examples, by iteratively adjusting connection weights. Most training algorithms, such as BP and conjugate gradient algorithms [7], [17]–[19], are based on gradient descent. There have been some successful applications of BP in various areas [20]–[22], but BP has drawbacks due to its use of gradient descent [23], [24]. It often gets trapped in a local minimum of the error function and is incapable of finding

a global minimum if the error function is multimodal and/or nondifferentiable. A detailed review of BP and other learning algorithms can be found in [7], [17], and [25].

One way to overcome gradient-descent-based training algorithms' shortcomings is to adopt EANN's, i.e., to formulate the training process as the evolution of connection weights in the environment determined by the architecture and the learning task. EA's can then be used effectively in the evolution to find a near-optimal set of connection weights globally without computing gradient information. The fitness of an ANN can be defined according to different needs. Two important factors which often appear in the fitness (or error) function are the error between target and actual outputs and the complexity of the ANN. Unlike the case in gradient-descent-based training algorithms, the fitness (or error) function does not have to be differentiable or even continuous since EA's do not depend on gradient information. Because EA's can treat large, complex, nondifferentiable, and multimodal spaces, which are the typical case in the real world, considerable research and application has been conducted on the evolution of connection weights [24], [26]–[112].

The evolutionary approach to weight training in ANN's consists of two major phases. The first phase is to decide the representation of connection weights, i.e., whether in the form of binary strings or not. The second one is the evolutionary process simulated by an EA, in which search operators such as crossover and mutation have to be decided in conjunction with the representation scheme. Different representations and search operators can lead to quite different training performance. A typical cycle of the evolution of connection weights is shown in Fig. 2. The evolution stops when the fitness is greater than a predefined value (i.e., the training error is smaller than a certain value) or the population has converged.

A. Binary Representation

The canonical genetic algorithm (GA) [13], [14] has always used binary strings to encode alternative solutions, often termed chromosomes. Some of the early work in evolving ANN connection weights followed this approach

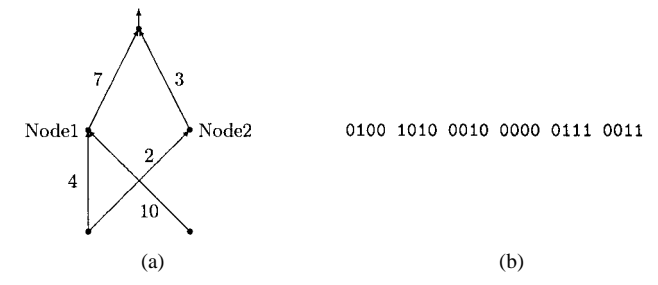

Fig. 3. (a) An ANN with connection weights shown. (b) A binary representation of the weights, assuming that each weight is represented by four bits.

[24], [26], [28], [37], [38], [41], [52], [53]. In such a representation scheme, each connection weight is represented by a number of bits with certain length. An ANN is encoded by concatenation of all the connection weights of the network in the chromosome.

A heuristic concerning the order of the concatenation is to put connection weights to the same hidden/output node together. Hidden nodes in ANN's are in essence feature extractors and detectors. Separating inputs to the same hidden node far apart in the binary representation would increase the difficulty of constructing useful feature detectors because they might be destroyed by crossover operators. It is generally very difficult to apply crossover operators in evolving connection weights since they tend to destroy feature detectors found during the evolutionary process.

Fig. 3 gives an example of the binary representation of an ANN whose architecture is predefined. Each connection weight in the ANN is represented by 4 bits, the whole ANN is represented by 24 bits where weight 0000 indicates no connection between two nodes.

The advantages of the binary representation lie in its simplicity and generality. It is straightforward to apply classical crossover (such as one-point or uniform crossover) and mutation to binary strings. There is little need to design complex and tailored search operators. The binary representation also facilitates digital hardware implementation of ANN's since weights have to be represented in terms of bits in hardware with limited precision.

There are several encoding methods, such as uniform, Gray, exponential, etc., that can be used in the binary representation. They encode real values using different ranges and precisions given the same number of bits. However, a tradeoff between representation precision and the length of chromosome often has to be made. If too few bits are used to represent each connection weight, training might fail because some combinations of real-valued connection weights cannot be approximated with sufficient accuracy by discrete values. On the other hand, if too many bits are used, chromosomes representing large ANN's will become extremely long and the evolution in turn will become very inefficient.

One of the problems faced by evolutionary training of ANN's is the permutation problem [32], [113], also known as the competing convention problem. It is caused by the

Fig. 4. (a) An ANN which is equivalent to that given in Fig. 3(a). (b) Its binary representation under the same representation scheme.

many-to-one mapping from the representation (genotype) to the actual ANN (phenotype) since two ANN's that order their hidden nodes differently in their chromosomes will still be equivalent functionally. For example, ANN's shown by Figs. 3(a) and 4(a) are equivalent functionally, but they have different chromosomes as shown by Figs. 3(b) and 4(b). In general, any permutation of the hidden nodes will produce functionally equivalent ANN's with different chromosome representations. The permutation problem makes crossover operator very inefficient and ineffective in producing good offspring.

B. Real-Number Representation

There have been some debates on the cardinality of the genotype alphabet. Some have argued that the minimal cardinality, i.e., the binary representation, might not be the best [48], [114]. Formal analysis of nonstandard representations and operators based on the concept of equivalent classes [115], [116] has given representations other than k ary strings a more solid theoretical foundation. Real numbers have been proposed to represent connection weights directly, i.e., one real number per connection weight [27], [29], [30], [48], [63]–[65], [74], [95], [96], [102], [110], [111], [117], [118]. For example, a real-number representation of the ANN given by Fig. 3(a) could be (4.0, 10.0, 2.0, 0.0, 7.0, 3.0).

As connection weights are represented by real numbers, each individual in an evolving population will be a real vector. Traditional binary crossover and mutation can no longer be used directly. Special search operators have to be designed. Montana and Davis [27] defined a large number of tailored genetic operators which incorporated many heuristics about training ANN's. The idea was to retain useful feature detectors formed around hidden nodes during evolution. Their results showed that the evolutionary training approach was much faster than BP for the problems they considered. Bartlett and Downs [30] also demonstrated that the evolutionary approach was faster and had better scalability than BP.

A natural way to evolve real vectors would be to use EP or ES since they are particularly well-suited for treating continuous optimization. Unlike GA's, the primary search operator in EP and ES is mutation. One of the major advantages of using mutation-based EA's is that they can reduce the negative impact of the permutation problem. Hence the evolutionary process can be more efficient. There

have been a number of successful examples of applying EP or ES to the evolution of ANN connection weights [29], [63]–[65], [67], [68], [95], [96], [102], [106], [111], [117], [119], [120]. In these examples, the primary search operator has been Gaussian mutation. Other mutation operators, such as Cauchy mutation [121], [122], can also be used. EP and ES also allow self adaptation of strategy parameters. Evolving connection weights by EP can be implemented as follows.

- 1) Generate an initial population of $\mu$ individuals at random and set k=1. Each individual is a pair of real-valued vectors, $(\mathbf{w}_i,\eta_i), \ \forall i \in \{1,\ldots,\mu\}$ , where $\mathbf{w}_i$ 's are connection weight vectors and $\eta_i$ 's are variance vectors for Gaussian mutations (also known as strategy parameters in self-adaptive EA's). Each individual corresponds to an ANN.

- 2) Each individual $(\mathbf{w}_i, \eta_i)$ , $i = 1, ..., \mu$ , creates a single offspring $(\mathbf{w}_i', \eta_i')$ by: for j = 1, ..., n

$$\eta_i'(j) = \eta_i(j) \exp(\tau' N(0,1) + \tau N_j(0,1)) \tag{2} $$

$$w_i'(j) = w_i(j) + \eta_i'(j)N_j(0,1) \tag{3} $$

where $w_i(j)$ , $w_i'(j)$ , $\eta_i(j)$ , and $\eta_i'(j)$ denote the jth component of the vectors $\mathbf{w}_i$ , $\mathbf{w}_i'$ , $\eta_i$ and $\eta_i'$ , respectively. N(0,1) denotes a normally distributed one-dimensional random number with mean zero and variance one. $N_j(0,1)$ indicates that the random number is generated anew for each value of j. The parameters $\tau$ and $\tau'$ are commonly set to $(\sqrt{2\sqrt{n}})^{-1}$ and $(\sqrt{2n})^{-1}$ [15], [123]. $N_j(0,1)$ in (3) may be replaced by Cauchy mutation [121], [122], [124] for faster evolution.

- Determine the fitness of every individual, including all parents and offspring, based on the training error. Different error functions may be used here.

- 4) Conduct pairwise comparison over the union of parents $(\mathbf{w}_i, \eta_i)$ and offspring $(\mathbf{w}_i', \eta_i')$ , $\forall i \in \{1, \dots, \mu\}$ . For each individual, q opponents are chosen uniformly at random from all the parents and offspring. For each comparison, if the individual's fitness is no smaller than the opponent's, it receives a "win." Select $\mu$ individuals out of $(\mathbf{w}_i, \eta_i)$ and $(\mathbf{w}_i', \eta_i')$ , $\forall i \in \{1, \dots, \mu\}$ , that have most wins to form the next generation. (This tournament selection scheme may be replaced by other selection schemes, such as [1251.)

- 5) Stop if the halting criterion is satisfied; otherwise, k = k + 1 and go to Step 2).

C. Comparison Between Evolutionary Training and Gradient-Based Training

As indicated at the beginning of Section II, the evolutionary training approach is attractive because it can handle the global search problem better in a vast, complex, multimodal, and nondifferentiable surface. It does not depend on gradient information of the error (or fitness) function and thus is particularly appealing when this information

is unavailable or very costly to obtain or estimate. For example, the evolutionary approach has been used to train recurrent ANN's [41], [60], [65], [100], [102], [103], [106], [117], [126]–[128], higher order ANN's [52], [53], and fuzzy ANN's [76], [77], [129], [130]. Moreover, the same EA can be used to train many different networks regardless of whether they are feedforward, recurrent, or higher order ANN's. The general applicability of the evolutionary approach saves a lot of human efforts in developing different training algorithms for different types of ANN's.

The evolutionary approach also makes it easier to generate ANN's with some special characteristics. For example, the ANN's complexity can be decreased and its generalization increased by including a complexity (regularization) term in the fitness function. Unlike the case in gradient-based training, this term does not need to be differentiable or even continuous. Weight sharing and weight decay can also be incorporated into the fitness function easily.

Evolutionary training can be slow for some problems in comparison with fast variants of BP [131] and conjugate gradient algorithms [19], [132]. However, EA's are generally much less sensitive to initial conditions of training. They always search for a globally optimal solution, while a gradient descent algorithm can only find a local optimum in a neighborhood of the initial solution.

For some problems, evolutionary training can be significantly faster and more reliable than BP [30], [34], [40], [63], [83], [89]. Prados [34] described a GA-based training algorithm which is "significantly faster than methods that use the generalized delta rule (GDR)." For the three tests reported in his paper [34], the GA-based training algorithm "took a total of about 3 hours and 20 minutes, and the GDR took a total of about 23 hours and 40 minutes." Bartlett and Downs [30] also gave a modified GA which was "an order of magnitude" faster than BP for the 7-bit parity problem. The modified GA seemed to have better scalability than BP since it was "around twice" as slow as BP for the XOR problem but faster than BP for the larger 7-bit parity problem.

Interestingly, quite different results were reported by Kitano [133]. He found that the GA–BP method, a technique that runs a GA first and then BP, "is, at best, equally efficient to faster variants of back propagation in very small scale networks, but far less efficient in larger networks." The test problems he used included the XOR problem, various size encoder/decoder problems, and the two-spiral problem. However, there have been many other papers which report excellent results using hybrid evolutionary and gradient descent algorithms [32], [67], [70], [71], [74], [80], [81], [86], [103], [105], [110]–[112].

The discrepancy between two seemingly contradictory results can be attributed at least partly to the different EA's and BP compared. That is, whether the comparison is between a classical binary GA and a fast BP algorithm, or between a fast EA and a classical BP algorithm. The discrepancy also shows that there is no clear winner in terms of the best training algorithm. The best one is always problem dependent. This is certainly true according to the

no-free-lunch theorem [134]. In general, hybrid algorithms tend to perform better than others for a large number of problems.

D. Hybrid Training

Most EA's are rather inefficient in fine-tuned local search although they are good at global search. This is especially true for GA's. The efficiency of evolutionary training can be improved significantly by incorporating a local search procedure into the evolution, i.e., combining EA's global search ability with local search's ability to fine tune. EA's can be used to locate a good region in the space and then a local search procedure is used to find a near-optimal solution in this region. The local search algorithm could be BP [32], [133] or other random search algorithms [30], [135]. Hybrid training has been used successfully in many application areas [32], [67], [70], [71], [74], [80], [81], [86], [103], [105], [110]–[112].

Lee [81] and many others [32], [136]–[138] used GA's to search for a near-optimal set of initial connection weights and then used BP to perform local search from these initial weights. Their results showed that the hybrid GA/BP approach was more efficient than either the GA or BP algorithm used alone. If we consider that BP often has to run several times in practice in order to find good connection weights due to its sensitivity to initial conditions, the hybrid training algorithm will be quite competitive. Similar work on the evolution of initial weights has also been done on competitive learning neural networks [139] and Kohonen networks [140].

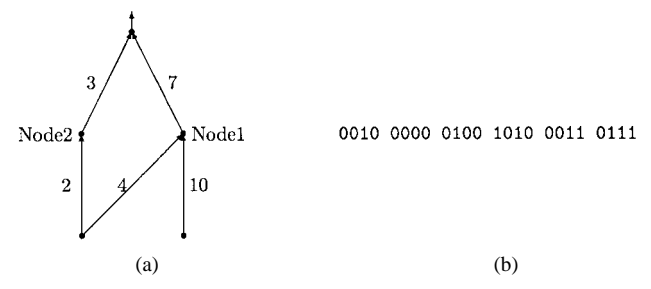

It is interesting to consider finding good initial weights as locating a good region in the weight space. Defining that basin of attraction of a local minimum as being composed of all the points, sets of weights in this case, which can converge to the local minimum through a local search algorithm, then a global minimum can easily be found by the local search algorithm if an EA can locate a point, i.e., a set of initial weights, in the basin of attraction of the global minimum. Fig. 5 illustrates a simple case where there is only one connection weight in the ANN. If an EA can find an initial weight such as $w_{I2}$ , it would be easy for a local search algorithm to arrive at the globally optimal weight $w_B$ even though $w_{I2}$ itself is not as good as $w_{I1}$ .

III. THE EVOLUTION OF ARCHITECTURES

Section II assumed that the architecture of an ANN is predefined and fixed during the evolution of connection weights. This section discusses the design of ANN architectures. The architecture of an ANN includes its topological structure, i.e., connectivity, and the transfer function of each node in the ANN. As indicated in the beginning of this paper, architecture design is crucial in the successful application of ANN's because the architecture has significant impact on a network's information processing capabilities. Given a learning task, an ANN with only a few connections and linear nodes may not be able to perform the task at all due to its limited capability, while an ANN with

Fig. 5. An illustration of using an EA to find good initial weights such that a local search algorithm can find the globally optimal weights easily. $w_{I2}$ in this figure is an optimal initial weight because it can lead to the global optimum $w_B$ using a local search algorithm.

a large number of connections and nonlinear nodes may overfit noise in the training data and fail to have good generalization ability.

Up to now, architecture design is still very much a human expert's job. It depends heavily on the expert experience and a tedious trial-and-error process. There is no systematic way to design a near-optimal architecture for a given task automatically. Research on constructive and destructive algorithms represents an effort toward the automatic design of architectures [141]–[148]. Roughly speaking, a constructive algorithm starts with a minimal network (network with minimal number of hidden layers, nodes, and connections) and adds new layers, nodes, and connections when necessary during training while a destructive algorithm does the opposite, i.e., starts with the maximal network and deletes unnecessary layers, nodes, and connections during training. However, as indicated by Angeline et al. [149], "Such structural hill climbing methods are susceptible to becoming trapped at structural local optima." In addition, they "only investigate restricted topological subsets rather than the complete class of network architectures" [149].

Design of the optimal architecture for an ANN can be formulated as a search problem in the architecture space where each point represents an architecture. Given some performance (optimality) criteria, e.g., lowest training error, lowest network complexity, etc., about architectures, the performance level of all architectures forms a discrete surface in the space. The optimal architecture design is equivalent to finding the highest point on this surface. There are several characteristics of such a surface as indicated by Miller et al. [150] which make EA's a better candidate for searching the surface than those constructive and destructive algorithms mentioned above. These characteristics are [150]:

- the surface is infinitely large since the number of possible nodes and connections is unbounded;

-

the surface is nondifferentiable since changes in the number of nodes or connections are discrete and can have a discontinuous effect on EANN's performance;

-

Decode each individual in the current generation into an architecture. If the indirect encoding scheme is used, further detail of the architecture is specified by some developmental rules or a training process.

- Train each ANN with the decoded architecture by a predefined learning rule (some parameters of the learning rule could be evolved during training) starting from different sets of random initial connection weights and, if any, learning rule parameters.

- Compute the fitness of each individual (encoded architecture) according to the above training result and other performance criteria such as the complexity of the architecture.

-

- Select parents from the population based on their fitness.

-

- Apply search operators to the parents and generate offspring which form the next generation.

Fig. 6. A typical cycle of the evolution of architectures.

- the surface is complex and noisy since the mapping from an architecture to its performance is indirect, strongly epistatic, and dependent on the evaluation method used;

- 4) the surface is deceptive since similar architectures may have quite different performance;

- 5) the surface is multimodal since different architectures may have similar performance.

Similar to the evolution of connection weights, two major phases involved in the evolution of architectures are the genotype representation scheme of architectures and the EA used to evolve ANN architectures. One of the key issues in encoding ANN architectures is to decide how much information about an architecture should be encoded in the chromosome. At one extreme, all the details, i.e., every connection and node of an architecture, can be specified by the chromosome. This kind of representation scheme is called direct encoding. At the other extreme, only the most important parameters of an architecture, such as the number of hidden layers and hidden nodes in each layer, are encoded. Other details about the architecture are left to the training process to decide. This kind of representation scheme is called indirect encoding. After a representation scheme has been chosen, the evolution of architectures can progress according to the cycle shown in Fig. 6. The cycle stops when a satisfactory ANN is found.

Considerable research on evolving ANN architectures has been carried out in recent years [33], [42], [45], [118], [127], [128], [130], [138], [149]–[225]. Most of the research has concentrated on the evolution of ANN topological structures. Relatively little has been done on the evolution of node transfer functions, let alone the simultaneous evolution of both topological structures and node transfer functions. In this paper, we will analyze the genotypical representation scheme of topological structures in Sections III-A and III-B. For convenience, the term architecture will be used interchangeably with the

term topological structure (topology) in these two sections. Section III-C discusses the evolution of node transfer functions briefly. Then we explain why the simultaneous evolution of ANN connection weights and architectures is beneficial and what search operators should be used in evolving architectures in Section III-D.

A. The Direct Encoding Scheme

Two different approaches have been taken in the direct encoding scheme. The first separates the evolution of architectures from that of connection weights [24], [150], [153], [154], [165], [167], [169], [170]. The second approach evolves architectures and connection weights simultaneously [149], [179], [180], [182], [185]–[200]. This section will focus on the first approach. The second approach will be discussed in Section III-D.

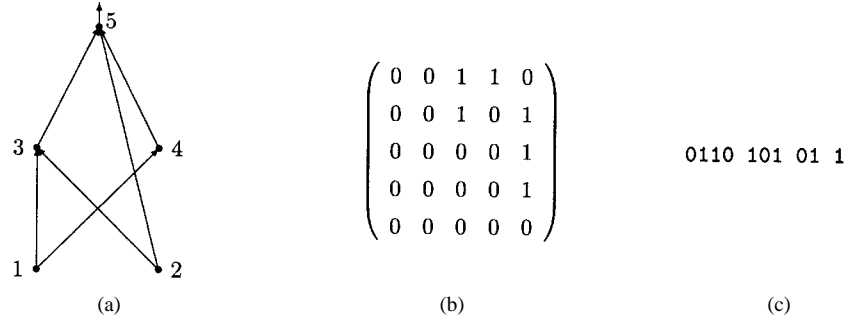

In the first approach, each connection of an architecture is directly specified by its binary representation [24], [150], [153], [154], [165], [167], [169], [170], [202]. For example, an $N \times N$ matrix $C = (c_{ij})_{N \times N}$ can represent an ANN architecture with N nodes, where $c_{ij}$ indicates presence or absence of the connection from node i to node j. We can use $c_{ij} = 1$ to indicate a connection and $c_{ij} = 0$ to indicate no connection. In fact, $c_{ij}$ can represent real-valued connection weights from node i to node j so that the architecture and connection weights can be evolved simultaneously [37], [42], [45], [165], [166], [169]–[171].

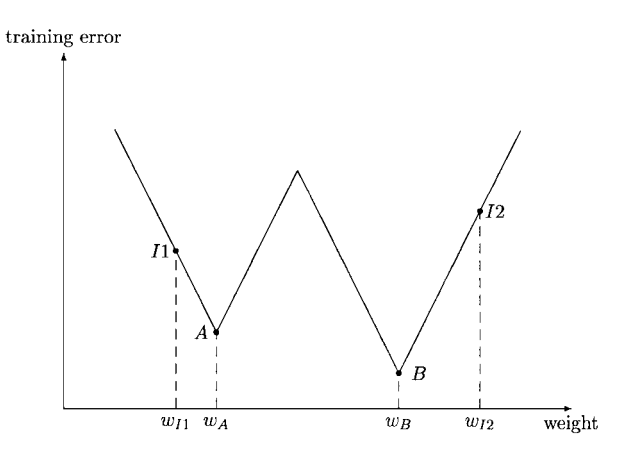

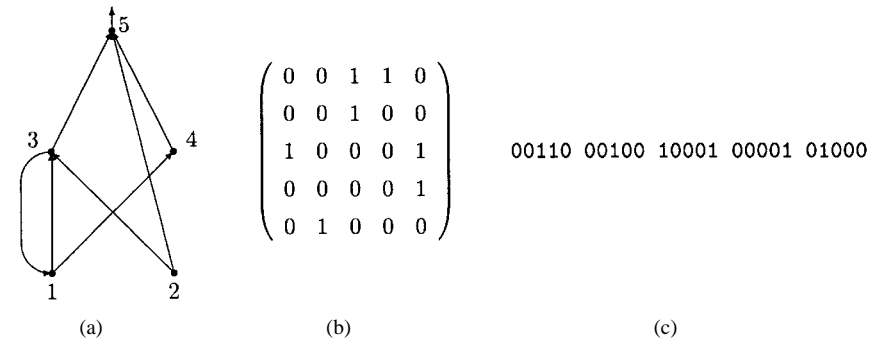

Each matrix C has a direct one-to-one mapping to the corresponding ANN architecture. The binary string representing an architecture is the concatenation of rows (or columns) of the matrix. Constraints on architectures being explored can easily be incorporated into such a representation scheme by imposing constraints on the matrix, e.g., a feedforward ANN will have nonzero entries only in the upper-right triangle of the matrix. Figs. 7 and 8 give two examples of the direct encoding scheme of ANN architectures. It is obvious that such an encoding scheme can handle both feedforward and recurrent ANN's.

Fig. 7. An example of the direct encoding of a feedforward ANN. (a), (b), and (c) show the architecture, its connectivity matrix, and its binary string representation, respectively. Because only feedforward architectures are under consideration, the binary string representation only needs to consider the upper-right triangle of the matrix.

Fig. 8. An example of the direct encoding of a recurrent ANN. (a), (b), and (c) show the architecture, its connectivity matrix, and its binary string representation, respectively.

Fig. 7(a) shows a feedforward ANN with two inputs and one output. Its connectivity matrix is given by Fig. 7(b), where entry $c_{ij}$ indicates the presence or absence of a connection from node i to node j. For example, the first row indicates connections from node 1 to all other nodes. The first two columns are 0's because there is no connection from node 1 to itself and no connection to node 2. However, node 1 is connected to nodes 3 and 4. Hence columns 3 and 4 have 1's. Converting this connectivity matrix to a chromosome is straightforward. We can concatenate all the rows (or columns) and obtain

Since the ANN is feedforward, we only need to represent the upper triangle of the connectivity matrix in order to reduce the chromosome length. The reduced chromosome is given by Fig. 7(c). An EA can then be employed to evolve a population of such chromosomes. In order to evaluate the fitness of each chromosome, we need to convert a chromosome back to an ANN, initialize it with random weights, and train it. The training error will be used to measure the fitness. It is worth noting that the ANN in Fig. 7 has a shortcut connection from the input to output. Such shortcuts pose no problems to the representation and evolution. An EA is capable of exploring all possible connectivities.

Fig. 8 shows a recurrent ANN. Its representation is basically the same as that for feedforward ANN's. The only difference is that no reduction in chromosome length

is possible if we want to explore the whole connectivity space. The EA used to evolve recurrent ANN's can be the same as that used to evolve feedforward ones.

The direct encoding scheme as described above is quite straightforward to implement. It is very suitable for the precise and fine-tuned search of a compact ANN architecture, since a single connection can be added or removed from the ANN easily. It may facilitate rapid generation and optimization of tightly pruned interesting designs that no one has hit upon so far [150].

Another flexibility provided by the evolution of architectures stems from the fitness definition. There is virtually no limitation such as being differentiable or continuous on how the fitness function should be defined at Step 3 in Fig. 6. The training result pertaining to an architecture such as the error and the training time is often used in the fitness function. The complexity measurement such as the number of nodes and connections is also used in the fitness function. As a matter of fact, many criteria based on the information theory or statistics [226]-[228] can readily be introduced into the fitness function without much difficulty. Improvement on ANN's generalization ability can be expected if these criteria are adopted. Schaffer et al. [153] have presented an experiment which showed that an ANN designed by the evolutionary approach had better generalization ability than one trained by BP using a human-designed architecture.

One potential problem of the direct encoding scheme is scalability. A large ANN would require a very large matrix and thus increase the computation time of the evolution. One way to cut down the size of matrices is to use domain knowledge to reduce the search space. For example, if complete connection is to be used between two neighboring layers in a feedforward ANN, its architecture can be encoded by just the number of hidden layers and nodes in each hidden layer. The length of chromosome can be reduced greatly in this case [153], [154]. However, doing so requires sufficient domain knowledge and expertise, which are difficult to obtain in practice. We also run the risk of missing some very good solutions when we restrict the search space manually.

The permutation problem as illustrated by Figs. 3 and 4 in Section II-A still exists and causes unwanted side effects in the evolution of architectures. Because two functionally equivalent ANN's which order their hidden nodes differently have two different genotypical representations, the probability of producing a highly fit offspring by recombining them is often very low. Some researchers thus avoided crossover and adopted only mutations in the evolution of architectures [45], [128], [149], [179], [185]–[197], [217], [223], although it has been shown that crossover may be useful and important in increasing the efficiency of evolution for some problems [48], [113], [212], [229]. Hancock [113] suggested that the permutation problem might "not be as severe as had been supposed" with the population size and the selection mechanism he used because "The increased number of ways of solving the problem outweigh the difficulties of bringing building blocks together." Thierens [101] proposed a genetic encoding scheme of ANN's which can avoid the permutation problem, however, only very limited experimental results were presented. It is worth indicating that most studies on the permutation problem concentrate on the GA used, e.g., genetic operators, population sizes, selection mechanisms, etc. While it is necessary to investigate the algorithm, it is equally important to study the genotypical representation scheme, since the performance surface defined in the beginning of Section III is determined by the representation. More research is needed to further understand the impact of the permutation problem on the evolution of architectures.

B. The Indirect Encoding Scheme

In order to reduce the length of the genotypical representation of architectures, the indirect encoding scheme has been used by many researchers [151], [152], [155], [156], [159], [160], [168], [184], [205], [208], [211], [230]–[232] where only some characteristics of an architecture are encoded in the chromosome. The details about each connection in an ANN is either predefined according to prior knowledge or specified by a set of deterministic developmental rules. The indirect encoding scheme can produce more compact genotypical representation of ANN architectures, but it may not be very good at finding a compact ANN with good generalization ability. Some [151], [230], [231] have argued that the indirect encoding scheme is biologically more plausible than the direct one, because it is impossible for genetic information encoded in

chromosomes to specify independently the whole nervous system according to the discoveries of neuroscience.

1) Parametric Representation: ANN architectures may be specified by a set of parameters such as the number of hidden layers, the number of hidden nodes in each layer, the number of connections between two layers, etc. These parameters can be encoded in various forms in a chromosome. Harp et al. [152], [156] used a "blueprint" to represent an architecture which consists of one or more segments representing an area (layer) and its efferent connectivity (projections). The first and last area are constrained to be the input and output area, respectively. Each segment includes two parts of the information: 1) that about the area itself, such as the number of nodes in the area and the spatial organization of the area, and 2) that about the efferent connectivity. It should be noted that only the connectivity pattern instead of each connection is specified here. The detailed node-to-node connection is specified by implicit developmental rules, e.g., the network instantiation software used by Harp et al. [152], [156]. Similar parametric representation methods with different sets of parameters have also been proposed by others [155], [159]. An interesting aspect of Harp et al.'s work is their combination of learning parameters with architectures in the genotypical representation. The learning parameters can evolve along with architecture parameters. The interaction between the two can be explored through evolution.

Although the parametric representation method can reduce the length of binary chromosome specifying ANN's architectures, EA's can only search a limited subset of the whole feasible architecture space. For example, if we encode only the number of hidden nodes in the hidden layer, we basically assume strictly layered feedforward ANN's with a single hidden layer. We will have to assume two neighboring layers are fully connected as well. In general, the parametric representation method will be most suitable when we know what kind of architectures we are trying to find.

2) Developmental Rule Representation: A quite different indirect encoding method from the above is to encode developmental rules, which are used to construct architectures, in chromosomes [151], [168], [184], [205], [230], [232]. The shift from the direct optimization of architectures to the optimization of developmental rules has brought some benefits, such as more compact genotypical representation, to the evolution of architectures. The destructive effect of crossover will also be lessened since the developmental rule representation is capable of preserving promising building blocks found so far [151]. But this method also has some problems [233].

A developmental rule is usually described by a recursive equation [230] or a generation rule similar to a production rule in a production system with a left-hand side (LHS) and a right-hand side (RHS) [151]. The connectivity pattern of the architecture in the form of a matrix is constructed from a basis, i.e., a single-element matrix, by repetitively applying suitable developmental rules to nonterminal elements in

$$S \longrightarrow \begin{pmatrix} A & B \\ C & D \end{pmatrix}$$

$$A \longrightarrow \begin{pmatrix} a & a \\ a & a \end{pmatrix} \quad B \longrightarrow \begin{pmatrix} i & i \\ i & a \end{pmatrix} \quad C \longrightarrow \begin{pmatrix} i & a \\ a & c \end{pmatrix} \quad D \longrightarrow \begin{pmatrix} a & e \\ a & e \end{pmatrix} \quad \cdots$$

$$a \longrightarrow \begin{pmatrix} 0 & 0 \\ 0 & 0 \end{pmatrix} \quad c \longrightarrow \begin{pmatrix} 0 & 0 \\ 1 & 0 \end{pmatrix} \quad e \longrightarrow \begin{pmatrix} 0 & 1 \\ 0 & 0 \end{pmatrix} \quad i \longrightarrow \begin{pmatrix} 1 & 0 \\ 0 & 0 \end{pmatrix} \quad \cdots$$

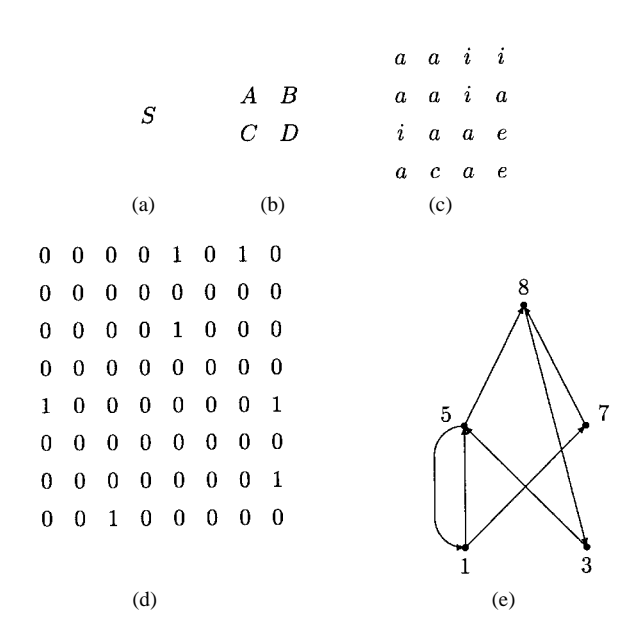

Fig. 9. Examples of some developmental rules used to construct a connectivity matrix. S is the initial element (or state).

the current matrix until the matrix contains only terminal2 elements which indicate the presence or absence of a connection, that is, until a connectivity pattern is fully specified.

Some examples of developmental rule are given in Fig. 9. Each developmental rule consists of a LHS which is a nonterminal element and a RHS which is a 2 2 matrix with either terminal or nonterminal elements. A typical step of constructing a connection matrix is to find rules whose LHS's appear in the current matrix and replace all the appearance with respective RHS's. For example, given a set of rules as described by Fig. 9, where is the starting symbol (state), one step application of these rules will produce the matrix

$$\begin{pmatrix} A & B \\ C & D \end{pmatrix}$$

by replacing with . If we apply these rules again, we can generate the following matrix:

$$\begin{pmatrix} a & a & i & i \\ a & a & i & a \\ i & a & a & e \\ a & c & a & e \end{pmatrix}$$

by replacing with with with , and with . Another step of applying these rules would lead us to the matrix

$$\begin{pmatrix} 0 & 0 & 0 & 0 & 1 & 0 & 1 & 0 \\ 0 & 0 & 0 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 &$$

by replacing with with with , and with . Since the above matrix consists of ones and zeros only (i.e., terminals only), there will be no further application of developmental rules. The above matrix will be an ANN's connection matrix.

Fig. 10 summarizes the previous three rule-rewriting steps and the final ANN generated according to Fig. 10(d).

Fig. 10. Development of an EANN architecture using the rules given in Fig. 9: (a) the initial state; (b) step 1; (c) step 2; (d) step 3 when all the entries in the matrix are terminal elements, i.e., either 1 or 0; and (e) the architecture. The nodes in the architecture are numbered from one to eight. Isolated nodes are not shown.

Note that nodes 2, 4, and 6 do not appear in the ANN because they are not connected to any other nodes.

The example described by Figs. 9 and 10 illustrates how an ANN architecture can be defined given a set of rules. The question now is how to get such a set of rules to construct an ANN. One answer is to evolve them. We can encode the whole rule set as an individual [151] (the socalled Pitt approach) or encode each rule as an individual [184] (the so-called Michigan approach). Each rule may be represented by four allele positions corresponding to four elements in the RHS of the rule. The LHS can be represented implicitly by the rule's position in the chromosome. Each position in a chromosome can take one of many different values, depending on how many nonterminal elements (symbols) we use in the rule set. For example, the nonterminals may range from " " to " " and " " to " ." The 16 rules with " " to " " on the LHS and 2 2 matrices with only ones and zeros on the RHS are predefined and do not participate in evolution in order to guarantee that different connectivity patterns can be reached. Since there are 26 different rules, whose LHS is " ," " ," ," ," respectively, a chromosome encoding all of them would need 26 4 104 alleles, four per rule.

2 In this paper, a terminal element is either 1 (existence of a connection) or 0 (nonexistence of a connection) and a nonterminal element is a symbol other than 1 and 0. These definitions are slightly different from those used by others [151].

The LHS of a rule is implicitly determined by its position in the chromosome. For example, the rule set in Fig. 9 can be represented by the following chromosome:

ABCDaaaaiiiaiaacaeae...

where the first four elements indicate the RHS of rule "S", the second four indicate the RHS of rule "A," etc.

Some good results from the developmental rule representation method have been reported [151] using various size encoder/decoder problems. However, the method has some limitations. It often needs to predefine the number of rewriting steps. It does not allow recursive rules. It is not very good at evolving detailed connectivity patterns among individual nodes. A compact genotypical representation does not imply a compact phenotypical representation, i.e., a compact ANN architecture. Recent work by Siddigi and Lucas [233] shows that the direct encoding scheme can be at least as good as the developmental rule method. They have reimplemented Kitano's system and discovered that the performance difference between the direct and indirect encoding schemes was not caused by the encoding scheme itself, but by how sparsely connected the initial ANN architectures were in the initial population [151]. The direct encoding scheme achieved the same performance as that achieved by the developmental rule representation when the initial conditions were the same [233].

The developmental rule representation method normally separates the evolution of architectures from that of connection weights. This creates some problems for evolution. Section III-D will discuss these in more detail.

Mjolsness et al. [230] described a similar rule encoding method where rules are represented by recursive equations which specify the growth of connection matrices. Coefficients of these recursive equations, represented by decomposition matrices, are encoded in genotypes and optimized by simulated annealing instead of EA's. Connection weights are optimized along with connectivity by simulated annealing since each entry of a connection matrix can have a real-valued weight. One advantage of using simulated annealing instead of GA's in the evolution is the avoidance of the destructive effect of crossover. Wilson [234] also used simulated annealing in ANN architecture design.

- 3) Fractal Representation: Merrill and Port [231] proposed another method for encoding architectures which is based on the use of fractal subsets of the plane. They argued that the fractal representation of architectures was biologically more plausible than the developmental rule representation. They used three real-valued parameters, i.e., an edge code, an input coefficient, and an output coefficient to specify each node in an architecture. In a sense, this encoding method is closer to the direct encoding scheme rather to the indirect one. Fast simulated annealing [235] was used in the evolution.

- 4) Other Representations: A very different approach to the evolution of architectures has been proposed by Andersen and Tsoi [236]. Their approach is unique in that each individual in a population represents a hidden node rather than the whole architecture. An architecture is built layer by layer, i.e., hidden layers are added one by one if the

current architecture cannot reduce the training error below certain threshold. Each hidden layer is constructed automatically through an evolutionary process which employs the GA with fitness sharing. Fitness sharing encourages the formation of different feature detectors (hidden nodes) in the population. The number of hidden nodes in each hidden layer can vary.

One limitation of this approach [236] is that it could only deal with strictly layered feedforward ANN's. Another limitation is that there are usually several hidden nodes in the same species which have very similar functionality, i.e., which are basically the same feature detector in a population. Such redundancy needs to be removed by an additional clean-up algorithm.

Smith and Cribbs [181], [237] also used an individual to represent a hidden node rather than the whole ANN. Their approach can only deal with strictly three-layered feedforward ANN's.

C. The Evolution of Node Transfer Functions

The discussion on the evolution of architectures so far only deals with the topological structure of an architecture. The transfer function of each node in the architecture has been assumed to be fixed and predefined by human experts, yet the transfer function has been shown to be an important part of an ANN architecture and have significant impact on ANN's performance [238]–[240]. The transfer function is often assumed to be the same for all the nodes in an ANN, at least for all the nodes in the same layer.

Stork et al. [241] were, to our best knowledge, the first to apply EA's to the evolution of both topological structures and node transfer functions even though only simple ANN's with seven nodes were considered. The transfer function was specified in the structural genes in their genotypic representation. It was much more complex than the usual sigmoid function because they tried to model a biological neuron in the tailflip circuitry of crayfish.

White and Ligomenides [171] adopted a simpler approach to the evolution of both topological structures and node transfer functions. For each individual (i.e., ANN) in the initial population, 80% nodes in the ANN used the sigmoid transfer function and 20% nodes used the Gaussian transfer function. The evolution was used to decide the optimal mixture between these two transfer functions automatically. The sigmoid and Gaussian transfer function themselves were not evolvable. No parameters of the two functions were evolved.

Liu and Yao [191] used EP to evolve ANN's with both sigmoidal and Gaussian nodes. Rather than fixing the total number of nodes and evolve mixture of different nodes, their algorithm allowed growth and shrinking of the whole ANN by adding or deleting a node (either sigmoidal or Gaussian). The type of node added or deleted was determined at random. Good performance was reported for some benchmark problems [191]. Hwang et al. [225] went one step further. They evolved ANN topology, node transfer function, as well as connection weights for projection neural networks.

Sebald and Chellapilla [242] used the evolution of node transfer function as an example to show the importance of evolving representations. Representation and search are the two key issues in problem solving. Co-evolving solutions and their representations may be an effective way to tackle some difficult problems where little human expertise is available.

D. Simultaneous Evolution of Architectures and Connection Weights

The evolutionary approaches discussed so far in designing ANN architectures evolve architectures only, without any connection weights. Connection weights have to be learned after a near-optimal architecture is found. This is especially true if one uses the indirect encoding scheme, such as the developmental rule method. One major problem with the evolution of architectures without connection weights is noisy fitness evaluation [194]. In other words, fitness evaluation as described in step 3 of Fig. 6 is very inaccurate and noisy because a phenotype's (i.e., an ANN with a full set of weights) fitness was used to approximate its genotype's (i.e., an ANN without any weight information) fitness. There are two major sources of noise [194].

- The first source is the random initialization of the weights. Different random initial weights may produce different training results. Hence, the same genotype may have quite different fitness due to different random initial weights used in training.

- 2) The second source is the training algorithm. Different training algorithms may produce different training results even from the same set of initial weights. This is especially true for multimodal error functions. For example, BP may reduce an ANN's error to 0.05 through training, but an EA could reduce the error to 0.001 due to its global search capability.

Such noise may mislead evolution because the fact that the fitness of a phenotype generated from genotype $G_1$ is higher than that generated from genotype $G_2$ does not mean that $G_1$ truly has higher quality than $G_2$ . In order to reduce such noise, an architecture usually has to be trained many times from different random initial weights. The average result is then used to estimate the genotype's mean fitness. This method increases the computation time for fitness evaluation dramatically. It is one of the major reasons why only small ANN's were evolved in previous studies.

In essence, the noise identified in this paper is caused by the one-to-many mapping from genotypes to phenotypes. Angeline et al. [149] and Fogel [12], [243] have provided a more general discussion on the mapping between genotypes and phenotypes. It is clear that the evolution of architectures without any weight information has difficulties in evaluating fitness accurately. As a result, the evolution would be very inefficient.

One way to alleviate this problem is to evolve ANN architectures and connection weights simultaneously [37], [42], [45], [149], [165], [166], [169]–[172], [179], [180],

[182], [185]–[200], [230], [232]. In this case, each individual in a population is a fully specified ANN with complete weight information. Since there is a one-to-one mapping between a genotype and its phenotype, fitness evaluation is accurate.

One issue in evolving ANN's is the choice of search operators used in EA's. Both crossover-based and mutation-based EA's have been used. However, use of crossover appears to contradict the basic ideas behind ANN's, because crossover works best when there exist "building blocks" but it is unclear what a building block might be in an ANN since ANN's emphasize distributed (knowledge) representation [244]. The knowledge in an ANN is distributed among all the weights in the ANN. Recombining one part of an ANN with another part of another ANN is likely to destroy both ANN's.

However, if ANN's do not use a distributed representation but rather a localized one, such as radial basis function (RBF) networks or nearest-neighbor multilayer perceptrons, crossover might be a very useful operator. There has been some work in this area where good results were reported [119], [120], [245]–[253]. In general, ANN's using distributed representation are more compact and have better generalization capability for most practical problems.

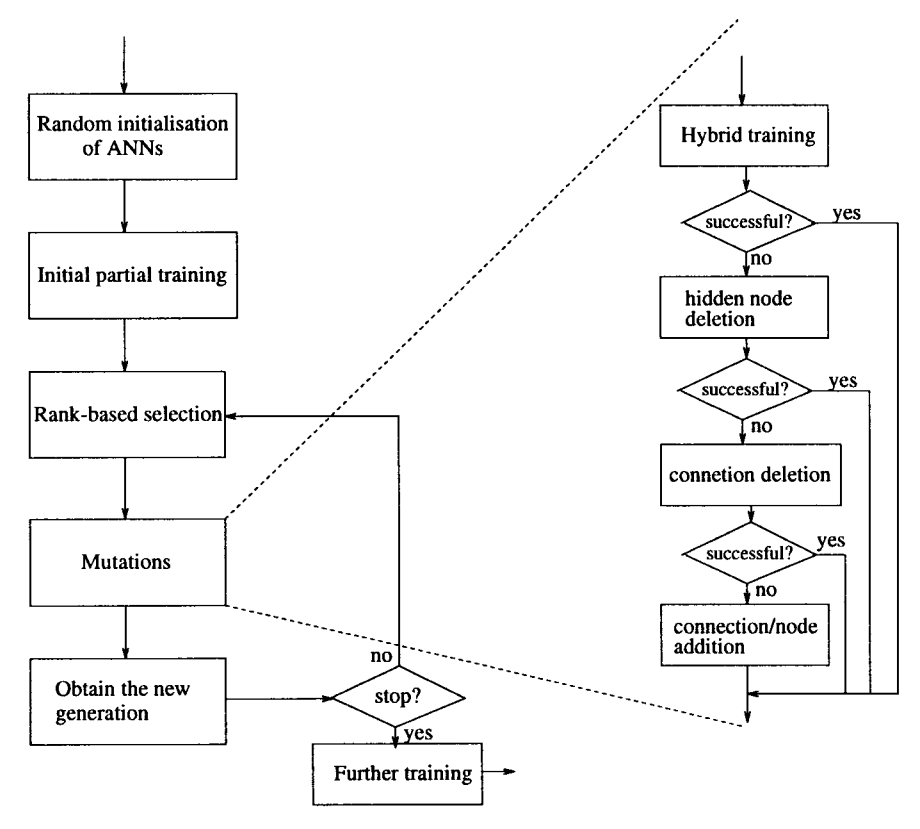

Yao and Liu [193], [194] developed an automatic system, EPNet, based on EP for simultaneous evolution of ANN architectures and connection weights. EPNet does not use any crossover operators for the reason given above. It relies on a number of mutation operators to modify architectures and weights. Behavioral (i.e., functional) evolution, rather genetic evolution, is emphasized in EPNet. A number of techniques were adopted to maintain the behavioral link between a parent and its offspring [190]. Fig. 11 shows the main structure of EPNet.

EPNet uses rank-based selection [125] and five mutations: hybrid training; node deletion; connection deletion; connection addition; and node addition [188], [194], [254]. Hybrid training is the only mutation in EPNet which modifies ANN's weights. It is based on a modified BP (MBP) algorithm with an adaptive learning rate and simulated annealing. The other four mutations are used to grow and prune hidden nodes and connections.

The number of epochs used by MBP to train each ANN's in a population is defined by two user-specified parameters. There is no guarantee that an ANN will converge to even a local optimum after those epochs. Hence this training process is called partial training. It is used to bridge the behavioral gap between a parent and its offspring.

The five mutations are attempted sequentially. If one mutation leads to a better offspring, it is regarded as successful. No further mutation will be applied. Otherwise the next mutation is attempted. The motivation behind ordering mutations is to encourage the evolution of compact ANN's without sacrificing generalization. A validation set is used in EPNet to measure the fitness of an individual.

EPNet has been tested extensively on a number of benchmark problems and achieved excellent results, including parity problems of size from four to eight, the two-spiral

Fig. 11. The main structure of EPNet.

problem, the breast cancer problem, the diabetes problem, the heart disease problem, the thyroid problem, the Australian credit card problem, the Mackey–Glass time series prediction problem, etc. Very compact ANN's with good generalization ability have been evolved [185]–[195]. There are some other different EP-based systems for designing ANN's [128], [129], [217], [223], but none has been tested on as many different benchmark problems.

IV. THE EVOLUTION OF LEARNING RULES

An ANN training algorithm may have different performance when applied to different architectures. The design of training algorithms, more fundamentally the learning rules used to adjust connection weights, depends on the type of architectures under investigation. Different variants of the Hebbian learning rule have been proposed to deal with different architectures. However, designing an optimal learning rule becomes very difficult when there is little prior knowledge about the ANN's architecture, which is often the case in practice. It is desirable to develop an automatic and systematic way to adapt the learning rule to an architecture and the task to be performed. Designing a learning rule manually often implies that some assumptions, which are not necessarily true in practice, have to be made. For example, the widely accepted Hebbian learning rule has recently been shown to be outperformed by a new rule proposed by Artola et al. [255] in many cases [256]. The new rule can learn more patterns than the optimal Hebbian rule and can learn exceptions as well as regularities. It is, however, still difficult to say that this rule is optimal for

all ANN's. In fact, what is needed from an ANN is its ability to adjust its learning rule adaptively according to its architecture and the task to be performed. In other words, an ANN should learn its learning rule dynamically rather than have it designed and fixed manually. Since evolution is one of the most fundamental forms of adaptation, it is not surprising that the evolution of learning rules has been introduced into ANN's in order to learn their learning rules.

The relationship between evolution and learning is extremely complex. Various models have been proposed [257]-[271], but most of them deal with the issue of how learning can guide evolution [257]-[260] and the relationship between the evolution of architectures and that of connection weights [261]-[263]. Research into the evolution of learning rules is still in its early stages [264]-[267], [269], [270]. This research is important not only in providing an automatic way of optimizing learning rules and in modeling the relationship between learning and evolution, but also in modeling the creative process since newly evolved learning rules can deal with a complex and dynamic environment. This research will help us to understand better how creativity can emerge in artificial systems, like ANN's, and how to model the creative process in biological systems. A typical cycle of the evolution of learning rules can be described by Fig. 12. The iteration stops when the population converges or a predefined maximum number of iterations has been reached.

Similar to the reason explained in Section III-D, the fitness evaluation of each individual, i.e., the encoded learning rule, is very noisy because we use phenotype's

-

- Decode each individual in the current generation into a learning rule.

-

- Construct a set of ANNs with randomly generated architectures and initial connection weights, and train them using the decoded learning rule.

-

- Calculate the fitness of each individual (encoded learning rule) according to the average training result.

-

- Select parents from the current generation according to their fitness.

-

- Apply search operators to parents to generate offspring which form the new generation.

Fig. 12. A typical cycle of the evolution of learning rules.

fitness (i.e., an ANN's training result) to approximate genotype's fitness (i.e., a learning rule's fitness). Such approximation may be inaccurate. Some techniques have been used to alleviate this problem, e.g., using a weighted average of the training results from ANN's with different initial connection weights in the fitness function. If the ANN architecture is predefined and fixed, the evolved learning rule would be optimized toward this architecture. If a near-optimal learning rule for different ANN architectures is to be evolved, the fitness evaluation should be based on the average training result from different ANN architectures in order to avoid overfitting a particular architecture.

A. The Evolution of Algorithmic Parameters

The adaptive adjustment of BP parameters (such as the learning rate and momentum) through evolution could be considered as the first attempt of the evolution of learning rules [32], [152], [272]. Harp et al. [152] encoded BP's parameters in chromosomes together with ANN's architecture. This evolutionary approach is different from the nonevolutionary one such as offered by Jacobs [273] because the simultaneous evolution of both algorithmic parameters and architectures facilitates exploration of interactions between the learning algorithm and architectures such that a near-optimal combination of BP with an architecture can be found.

Other researchers [32], [139], [213], [272] also used an evolutionary process to find parameters for BP but ANN's architecture was predefined. The parameters evolved in this case tend to be optimized toward the architecture rather than being generally applicable to learning. There are a number of BP algorithms with an adaptive learning rate and momentum where a nonevolutionary approach is used. Further comparison between the two approaches would be quite useful.

B. The Evolution of Learning Rules

The evolution of algorithmic parameters is certainly interesting but it hardly touches the fundamental part of a training algorithm, i.e., its learning rule or weight updating rule. Adapting a learning rule through evolution is expected to enhance ANN's adaptivity greatly in a dynamic environment.

Unlike the evolution of connection weights and architectures which only deal with static objects in an ANN, i.e., weights and architectures, the evolution of learning rules has to work on the dynamic behavior of an ANN. The key issue here is how to encode the dynamic behavior of a learning rule into static chromosomes. Trying to develop a universal representation scheme which can specify any kind of dynamic behaviors is clearly impractical, let alone the prohibitive long computation time required to search such a learning rule space. Constraints have to be set on the type of dynamic behaviors, i.e., the basic form of learning rules being evolved in order to reduce the representation complexity and the search space.

Two basic assumptions which have often been made on learning rules are: 1) weight updating depends only on local information such as the activation of the input node, the activation of the output node, the current connection weight, etc., and 2) the learning rule is the same for all connections in an ANN. A learning rule is assumed to be a linear function of these local variables and their products. That is, a learning rule can be described by the function [5]

$$\Delta w(t) = \sum_{k=1}^{n} \sum_{i_1, i_2, \dots, i_k=1}^{n} \left( \theta_{i_1 i_2 \dots i_k} \prod_{j=1}^{k} x_{i_j} (t-1) \right) \tag{4} $$

where t is time, $\Delta w$ is the weight change, $x_1, x_2, \ldots, x_n$ are local variables, and the $\theta$ 's are real-valued coefficients which will be determined by evolution. In other words, the evolution of learning rules in this case is equivalent to the evolution of real-valued vectors of $\theta$ 's. Different $\theta$ 's determine different learning rules. Due to a large number of possible terms in (4), which would make evolution very slow and impractical, only a few terms have been used in practice according to some biological or heuristic knowledge.

There are three major issues involved in the evolution of learning rules: 1) determination of a subset of terms described in (4); 2) representation of their real-valued coefficients as chromosomes; and 3) the EA used to evolve these chromosomes. Chalmers [264] defined a learning rule as a linear combination of four local variables and their six pairwise products. No third- or fourth-order^{3} terms

<sup>3The order is defined as the number of variables in a product.

were used. Ten coefficients and a scale parameter were encoded in a binary string via exponential encoding. The architecture used in the fitness evaluation was fixed because only single-layer ANN's were considered and the number of inputs and outputs were fixed by the learning task at hand. After 1000 generations, starting from a population of randomly generated learning rules, the evolution discovered the well-known delta rule [7], [274] and some of its variants. These experiments, although simple and preliminary, demonstrated the potential of evolution in discovering novel learning rules from a set of randomly generated rules. However, constraints set on learning rules could prevent some from being evolved such as those which include third-or fourth-order terms.

Similar experiments on the evolution of learning rules were also carried out by others [265], [266], [267], [269], [270]. Fontanari and Meir [267] used Chalmers' approach to evolve learning rules for binary perceptrons. They also considered four local variables but only seven terms were adopted in their learning rules, which included one firstorder, three second-order, and three third-order terms in (4). Baxter [269] took one step further than just the evolution of learning rules. He tried to evolve complete ANN's (including connection weights, architectures, and learning rules) in a single level of evolution. It is clear that the search space of possible ANN's would be enormous if constraints were not set on the connection weights, architectures, and learning rules. In his experiments, only ANN's with binary threshold nodes were considered, so the weights could only be +1 or -1. The number of nodes in ANN's was fixed. The learning rule only considered two Boolean variables. Although Baxter's experiments were rather simple, they confirmed that complex behaviors could be learned and the ANN's learning ability could be improved through evolution [269].

Bengio et al.'s approach [265], [266] is slightly different from Chalmers' in the sense that gradient descent algorithms and simulated annealing, rather than EA's, were used to find near-optimal $\theta$ 's. In their experiments, four local variables and one zeroth-order, three first-order, and three second-order terms in (4) were used.

Research related to the evolution of learning rules includes Parisi et al.'s work on "econets," although they did not evolve learning rules explicitly [260], [275]. They emphasized the crucial role of the environment in which the evolution occured while only using some simple neural networks. The issue of environmental diversity is closely related to the noisy fitness evaluation as pointed out in Section III-D and at the beginning of Section IV. There are two possible sources of noise. The first is the decoding process (morphogenesis) of chromosomes. The second is introduced when a decoded learning rule is evaluated by using it to train ANN's. The environmental diversity is essential in obtaining a good approximation to the fitness of the decoded learning rule and thus in reducing the noise from the second source. If a general learning rule which is applicable to a wide range of ANN architectures and learning tasks is needed, the environmental diversity has to be very high, i.e., many different architectures and learning tasks have to be used in the fitness evaluation.

V. OTHER COMBINATIONS BETWEEN ANN'S AND EA'S

A. The Evolution of Input Features

For many practical problems, the possible inputs to an ANN can be quite large. There may be some redundancy among different inputs. A large number of inputs to an ANN increase its size and thus require more training data and longer training times in order to achieve a reasonable generalization ability. Preprocessing is often needed to reduce the number of inputs to an ANN. Various dimension reduction techniques, including the principal component analysis, have been used for this purpose.

The problem of finding a near-optimal set of input features to an ANN can be formulated as a search problem. Given a large set of potential inputs, we want to find a nearoptimal subset which has the fewest number of features but the performance of the ANN using this subset is no worse than that of the ANN using the whole input set. EA's have been used to perform such a search effectively [267], [277]–[287]. Very good results, i.e., better performance with fewer inputs, have been reported from these studies. In the evolution of input features, each individual in the population represents a subset of all possible inputs. This can be implemented using a binary chromosome whose length is the same as the total number of input features. Each bit in the chromosome corresponds to a feature. "1" indicates presence of a feature, while "0" indicates absence of the feature. The evaluation of an individual is carried out by training an ANN with these inputs and using the result to calculate its fitness value. The ANN architecture is often fixed. Such evaluation is very noisy, however, because of the reason explained in Section III-D.

Not only does the evolution of input features provide a way to discover important features from all possible inputs automatically, it can also be used to discover new training examples. Zhang and Veenker [288] described an active learning paradigm where a training algorithm based on EA's can self-select training examples. Cho and Cha [289] proposed another algorithm for evolving training sets by adding virtual samples.

B. ANN as Fitness Estimator

EA's have been used with success to optimize various control parameters [290]–[292]. However, it is very time consuming and costly to obtain fitness values for some control problems as it is impractical to run a real system for each combination of control parameters. In order to get around this problem and make evolution more efficient, fitness values are often approximated rather than computed exactly. ANN's are often used to model and approximate a real control system due to their good generalization abilities. The input to such ANN's will be a set of control parameters. The output will be the control system output from which an evaluation of the whole system can easily be

obtained. When an EA is used to search for a near-optimal set of control parameters, the ANN will be used in fitness evaluation rather than the real control system [293]–[297].

This combination of ANN's and EA's has a couple of advantages in evolving control systems. First, the time-consuming fitness evaluation based on real control systems is replaced by fast fitness evaluation based on ANN's. Second, this combination provides safer evolution of control systems. EA's are stochastic algorithms. It is possible that some poor control parameters may be generated in the evolutionary process. These parameters could damage a real control system. If we use ANN's to estimate fitness, we do not need to use the real system and thus can avoid damages to the real system. However, how successful this combination approach will be depends largely on how well ANN's learn and generalize.

C. Evolving ANN Ensembles