title: Natural Evolution Strategies url: http://arxiv.org/abs/1106.4487v1

Natural Evolution Strategies

Daan Wierstra daan.wierstra@epfl.ch

Laboratory of Computational Neuroscience, Brain Mind Institute Ecole Polytechnique F´ed´erale de Lausanne (EPFL) ´ Lausanne, Switzerland

Tom Schaul tom@idsia.ch Tobias Glasmachers tobias@idsia.ch Yi Sun yi@idsia.ch J¨urgen Schmidhuber juergen@idsia.ch

Istituto Dalle Molle di Studi sull'Intelligenza Artificiale (IDSIA) University of Lugano (USI)/SUPSI Galleria 2, 6928, Lugano, Switzerland

Editor: unknown

Abstract

This paper presents Natural Evolution Strategies (NES), a recent family of algorithms that constitute a more principled approach to black-box optimization than established evolutionary algorithms. NES maintains a parameterized distribution on the set of solution candidates, and the natural gradient is used to update the distribution's parameters in the direction of higher expected fitness. We introduce a collection of techniques that address issues of convergence, robustness, sample complexity, computational complexity and sensitivity to hyperparameters. This paper explores a number of implementations of the NES family, ranging from general-purpose multi-variate normal distributions to heavytailed and separable distributions tailored towards global optimization and search in high dimensional spaces, respectively. Experimental results show best published performance on various standard benchmarks, as well as competitive performance on others.

Keywords: Natural Gradient, Stochastic Search, Evolution Strategies, Black-box Optimization, Sampling

1. Introduction

Many real world optimization problems are too difficult or complex to model directly. Therefore, they might best be solved in a 'black-box' manner, requiring no additional information on the objective function (i.e., the 'fitness' or 'cost') to be optimized besides fitness evaluations at certain points in parameter space. Problems that fall within this category are numerous, ranging from applications in health and science (Winter et al., 2005; Shir and B¨ack, 2007; Jebalia et al., 2007) to aeronautic design (Hasenj¨ager et al., 2005; Klockgether and Schwefel, 1970) and control (Hansen et al., 2009).

Numerous algorithms in this vein have been developed and applied in the past fifty years (see section 2 for an overview), in many cases providing good and even near-optimal solutions to hard tasks, which otherwise would have required domain experts to hand-craft solutions at substantial cost and often with worse results. The near-infeasibility of finding globally optimal solutions requires a fair amount of heuristics in black-box optimization algorithms, leading to a proliferation of sometimes highly performant yet unprincipled methods.

In this paper, we introduce Natural Evolution Strategies (NES), a novel black-box optimization framework which is derived from first principles and simultaneously provides state-of-the-art performance. The core idea is to maintain and iteratively update a search distribution from which search points are drawn and their fitness evaluated. The search distribution is then updated in the direction of higher expected fitness, using ascent along the natural gradient.

1.1 Continuous Black-Box Optimization

The problem of black-box optimization has spawned a wide variety of approaches. A first class of methods was inspired by classic optimization methods, including simplex methods such as Nelder-Mead (Nelder and Mead, 1965), as well as members of the quasi-Newton family of algorithms. Simulated annealing (Kirkpatrick et al., 1983), a popular method introduced in 1983, was inspired by thermodynamics, and is in fact an adaptation of the Metropolis-Hastings algorithm. More heuristic methods, such as those inspired by evolution, have been developed from the early 1950s on. These include the broad class of genetic algorithms (Holland, 1992; Goldberg, 1989), differential evolution (Storn and Price, 1997), estimation of distribution algorithms (Larra˜naga, 2002), particle swarm optimization (Kennedy and Eberhart, 2001), and the cross-entropy method (Rubinstein and Kroese, 2004).

Evolution strategies (ES), introduced by Ingo Rechenberg and Hans-Paul Schwefel in the 1960s and 1970s (Rechenberg and Eigen, 1973; Schwefel, 1977), were designed to cope with high-dimensional continuous-valued domains and have remained an active field of research for more than four decades (Beyer and Schwefel, 2002). ESs involve evaluating the fitness of real-valued genotypes in batch ('generation'), after which the best genotypes are kept, while the others are discarded. Survivors then procreate (by slightly mutating all of their genes) in order to produce the next batch of offspring. This process, after several generations, was shown to lead to reasonable to excellent results for many difficult optimization problems. The algorithm framework has been developed extensively over the years to include self-adaptation of the search parameters, and the representation of correlated mutations by the use of a full covariance matrix. This allowed the framework to capture interrelated dependencies by exploiting the covariances while 'mutating' individuals for the next generation. The culminating algorithm, covariance matrix adaptation evolution strategy (CMA-ES; Hansen and Ostermeier, 2001), has proven successful in numerous studies (e.g., Friedrichs and Igel, 2005; Muller et al., 2002; Shepherd et al., 2006).

While evolution strategies prove to be an effective framework for black-box optimization, their ad hoc procedures remain heuristic in nature. Thoroughly analyzing the actual dynamics of the procedure turns out to be difficult, the considerable efforts of various researchers notwithstanding (Beyer, 2001; Jebalia et al., 2010; Auger, 2005). In other words, ESs (including CMA-ES), while powerful, still lack a clear derivation from first principles.

1.2 The NES Family

Natural Evolution Strategies (NES) are well-grounded, evolution-strategy inspired blackbox optimization algorithms, which instead of maintaining a population of search points, iteratively update a search distribution. Like CMA-ES, they can be cast into the framework of evolution strategies.

The general procedure is as follows: the parameterized search distribution is used to produce a batch of search points, and the fitness function is evaluated at each such point. The distribution's parameters (which include strategy parameters) allow the algorithm to adaptively capture the (local) structure of the fitness function. For example, in the case of a Gaussian distribution, this comprises the mean and the covariance matrix. From the samples, NES estimates a search gradient on the parameters towards higher expected fitness. NES then performs a gradient ascent step along the natural gradient, a secondorder method which, unlike the plain gradient, renormalizes the update w.r.t. uncertainty. This step is crucial, since it prevents oscillations, premature convergence, and undesired effects stemming from a given parameterization.The entire process reiterates until a stopping criterion is met.

All members of the 'NES family' operate based on the same principles. They differ in the type of distribution and the gradient approximation method used. Different search spaces require different search distributions; for example, in low dimensionality it can be highly beneficial to model the full covariance matrix. In high dimensions, on the other hand, a more scalable alternative is to limit the covariance to the diagonal only. In addition, highly multi-modal search spaces may benefit from more heavy-tailed distributions (such as Cauchy, as opposed to the Gaussian). A last distinction arises between distributions where we can analytically compute the natural gradient, and more general distributions where we need to estimate it from samples.

1.3 Paper Outline

This paper builds upon and extends our previous work on Natural Evolution Strategies (Wierstra et al., 2008; Sun et al., 2009a,b; Glasmachers et al., 2010a,b; Schaul et al., 2011), and introduces novel performance- and robustness-enhancing techniques (in sections 3.3 and 3.4), as well as an extension to rotationally symmetric distributions (section 5.2) and a plethora of new experimental results.

The paper is structured as follows. Section 2 presents the general idea of search gradients as introduced by Wierstra et al. (2008), outlining how to perform stochastic search using parameterized distributions while doing gradient ascent towards higher expected fitness. The limitations of the plain gradient are exposed in section 2.2, and subsequently addressed by the introduction of the natural gradient (section 2.3), resulting in the canonical NES algorithm.

Section 3 then regroups a collection of techniques that enhance NES's performance and robustness. This includes fitness shaping (designed to render the algorithm invariant w.r.t. order-preserving fitness transformations (Wierstra et al., 2008), section 3.1), importance mixing (designed to recycle samples so as to reduce the number of required fitness evaluations (Sun et al., 2009b), section 3.2), adaptation sampling which is a novel technique

for adjusting learning rates online (section 3.3), and finally restart strategies, designed to improve success rates on multi-modal problems (section 3.4).

In section 4, we look in more depth at multivariate Gaussian search distributions, constituting the most common case. We show how to constrain the covariances to positive-definite matrices using the exponential map (section 4.1), and how to use a change of coordinates to reduce the computational complexity from $\mathcal{O}(d^6)$ to $\mathcal{O}(d^3)$ , with d being the search space dimension, resulting in the xNES algorithm (Glasmachers et al., 2010b, section 4.2).

Next, in section 5, we develop the breadth of the framework, motivating its usefulness and deriving a number of NES variants with different search distributions. First, we show how a restriction to diagonal parameterization permits the approach to scale to very high dimensions due to its linear complexity (Schaul et al., 2011; section 5.1). Second, we provide a novel formulation of NES for the whole class of multi-variate versions of distributions with rotation symmetries (section 5.2), including heavy-tailed distributions with infinite variance, such as the Cauchy distribution (Schaul et al., 2011, section 5.3).

The ensuing experimental investigations show the competitiveness of the approach on a broad range of benchmarks (section 6). The paper ends with a discussion on the effectiveness of the different techniques and types of distributions and an outlook towards future developments (section 7).

2. Search Gradients

The core idea of Natural Evolution Strategies is to use search gradients to update the parameters of the search distribution. We define the search gradient as the sampled gradient of expected fitness. The search distribution can be taken to be a multinormal distribution, but could in principle be any distribution for which we can find derivatives of its log-density w.r.t. its parameters. For example, useful distributions include Gaussian mixture models and the Cauchy distribution with its heavy tail.

If we use $\theta$ to denote the parameters of distribution $\pi(\mathbf{z} \mid \theta)$ and f(x) to denote the fitness function for samples $\mathbf{z}$ , we can write the expected fitness under the search distribution as

$$J(\theta) = \mathbb{E}_{\theta}[f(\mathbf{z})] = \int f(\mathbf{z}) \, \pi(\mathbf{z} \,|\, \theta) \, d\mathbf{z}. \tag{1}$$

The so-called 'log-likelihood trick' enables us to write

$$\nabla_{\theta} J(\theta) = \nabla_{\theta} \int f(\mathbf{z}) \ \pi(\mathbf{z} \mid \theta) \ d\mathbf{z}$$

$$= \int f(\mathbf{z}) \ \nabla_{\theta} \pi(\mathbf{z} \mid \theta) \ d\mathbf{z}$$

$$= \int f(\mathbf{z}) \ \nabla_{\theta} \pi(\mathbf{z} \mid \theta) \ \frac{\pi(\mathbf{z} \mid \theta)}{\pi(\mathbf{z} \mid \theta)} \ d\mathbf{z}$$

$$= \int \left[ f(\mathbf{z}) \ \nabla_{\theta} \log \pi(\mathbf{z} \mid \theta) \right] \pi(\mathbf{z} \mid \theta) \ d\mathbf{z}$$

$$= \mathbb{E}_{\theta} \left[ f(\mathbf{z}) \ \nabla_{\theta} \log \pi(\mathbf{z} \mid \theta) \right].$$

From this form we obtain the Monte Carlo estimate of the search gradient from samples $\mathbf{z}_1 \dots \mathbf{z}_{\lambda}$ as

$$\nabla_{\theta} J(\theta) \approx \frac{1}{\lambda} \sum_{k=1}^{\lambda} f(\mathbf{z}_k) \, \nabla_{\theta} \log \pi(\mathbf{z}_k \mid \theta), \tag{2}$$

where $\lambda$ is the population size. This gradient on expected fitness provides a search direction in the space of search distributions. A straightforward gradient ascent scheme can thus iteratively update the search distribution

$$\theta \leftarrow \theta + \eta \nabla_{\theta} J(\theta),$$

where $\eta$ is a learning rate parameter. Algorithm 1 provides the pseudocode for this very general approach to black-box optimization by using a search gradient on search distributions.

Algorithm 1: Canonical Search Gradient algorithm

\begin{aligned} & \textbf{input: } f, \, \theta_{init} \\ & \textbf{repeat} \\ & \middle| & \textbf{for } k = 1 \dots \lambda \ \textbf{do} \\ & \middle| & \text{draw sample } \mathbf{z}_k \sim \pi(\cdot|\theta) \\ & \text{evaluate the fitness } f(\mathbf{z}_k) \\ & \text{calculate log-derivatives } \nabla_{\theta} \log \pi(\mathbf{z}_k|\theta) \\ & \textbf{end} \\ & \nabla_{\theta} J \leftarrow \frac{1}{\lambda} \sum_{k=1}^{\lambda} \nabla_{\theta} \log \pi(\mathbf{z}_k|\theta) \cdot f(\mathbf{z}_k) \\ & \theta \leftarrow \theta + \eta \cdot \nabla_{\theta} J \\ & \textbf{until stopping criterion is met} \end{aligned}

Utilizing the search gradient in this framework is similar to evolution strategies in that it iteratively generates the fitnesses of batches of vector-valued samples – the ES's so-called candidate solutions. It is different however, in that it represents this 'population' as a parameterized distribution, and in the fact that it uses a search gradient to update the parameters of this distribution, which is computed using the fitnesses.

2.1 Search Gradient for Gaussian Distributions

In the case of the 'default' d-dimensional multi-variate normal distribution, we collect the parameters of the Gaussian, the mean $\boldsymbol{\mu} \in \mathbb{R}^d$ (candidate solution center) and the covariance matrix $\boldsymbol{\Sigma} \in \mathbb{R}^{d \times d}$ (mutation matrix), in a single concatenated vector $\boldsymbol{\theta} = \langle \boldsymbol{\mu}, \boldsymbol{\Sigma} \rangle$ . However, to sample efficiently from this distribution we need a square root of the covariance matrix (a matrix $\mathbf{A} \in \mathbb{R}^{d \times d}$ fulfilling $\mathbf{A}^{\top} \mathbf{A} = \boldsymbol{\Sigma}$ ). Then $\mathbf{z} = \boldsymbol{\mu} + \mathbf{A}^{\top} \mathbf{s}$ transforms a standard normal vector $\mathbf{s} \sim \mathcal{N}(0, \mathbb{I})$ into a sample $\mathbf{z} \sim \mathcal{N}(\boldsymbol{\mu}, \boldsymbol{\Sigma})$ . Here, $\mathbb{I} = \operatorname{diag}(1, \dots, 1) \in \mathbb{R}^{d \times d}$ denotes the

1. For any matrix $\mathbf{Q}$ , $\mathbf{Q}^{\top}$ denotes its transpose.

identity matrix. Let

$$\pi(\mathbf{z} \mid \theta) = \frac{1}{(\sqrt{2\pi})^d \det(\mathbf{A})} \cdot \exp\left(-\frac{1}{2} \|\mathbf{A}^{-1} \cdot (\mathbf{z} - \boldsymbol{\mu})\|^2\right)$$ $$= \frac{1}{\sqrt{(2\pi)^d \det(\boldsymbol{\Sigma})}} \cdot \exp\left(-\frac{1}{2} (\mathbf{z} - \boldsymbol{\mu})^\top \boldsymbol{\Sigma}^{-1} (\mathbf{z} - \boldsymbol{\mu})\right)$$

denote the density of the multinormal search distribution $\mathcal{N}(\mu, \Sigma)$ .

In order to calculate the derivatives of the log-likelihood with respect to individual elements of $\theta$ for this multinormal distribution, first note that

$$\log \pi (\mathbf{z}|\theta) = -\frac{d}{2}\log(2\pi) - \frac{1}{2}\log\det \mathbf{\Sigma} - \frac{1}{2}(\mathbf{z} - \boldsymbol{\mu})^{\top} \mathbf{\Sigma}^{-1}(\mathbf{z} - \boldsymbol{\mu}).$$

We will need its derivatives, that is, $\nabla_{\mu} \log \pi (\mathbf{z}|\theta)$ and $\nabla_{\Sigma} \log \pi (\mathbf{z}|\theta)$ . The first is trivially

$$\nabla_{\mu} \log \pi \left( \mathbf{z} | \theta \right) = \mathbf{\Sigma}^{-1} \left( \mathbf{z} - \boldsymbol{\mu} \right), \tag{3}$$

while the latter is

$$\nabla_{\Sigma} \log \pi \left( \mathbf{z} | \theta \right) = \frac{1}{2} \Sigma^{-1} \left( \mathbf{z} - \boldsymbol{\mu} \right) \left( \mathbf{z} - \boldsymbol{\mu} \right)^{\top} \Sigma^{-1} - \frac{1}{2} \Sigma^{-1}. \tag{4}$$

In order to preserve symmetry, to ensure non-negative variances and to keep the mutation matrix $\Sigma$ positive definite, $\Sigma$ needs to be constrained. One way to accomplish that is by representing $\Sigma$ as a product $\Sigma = \mathbf{A}^{\top} \mathbf{A}$ (for a more sophisticated solution to this issue, see section 4.1). Instead of using the log-derivatives on $\nabla_{\Sigma} \log \pi(\mathbf{z})$ directly, we then compute the derivatives with respect to $\mathbf{A}$ as

$$\nabla_{\mathbf{A}} \log \pi \left( \mathbf{z} \right) = \mathbf{A} \left[ \nabla_{\boldsymbol{\Sigma}} \log \pi \left( \mathbf{z} \right) + \nabla_{\boldsymbol{\Sigma}} \log \pi \left( \mathbf{z} \right)^{\top} \right].$$

Using these derivatives to calculate $\nabla_{\theta}J$ , we can then update parameters $\theta = \langle \boldsymbol{\mu}, \boldsymbol{\Sigma} = \mathbf{A}^{\top} \mathbf{A} \rangle$ as $\theta \leftarrow \theta + \eta \nabla_{\theta}J$ using learning rate $\eta$ . This produces a new center $\boldsymbol{\mu}$ for the search distribution, and simultaneously self-adapts its associated covariance matrix $\boldsymbol{\Sigma}$ . To summarize, we provide the pseudocode for following the search gradient in the case of a multinormal search distribution in Algorithm 2.

2.2 Limitations of Plain Search Gradients

As the attentive reader will have realized, there exists at least one major issue with applying the search gradient as-is in practice: It is impossible to precisely locate a (quadratic) optimum, even in the one-dimensional case. Let d = 1, $\theta = \langle \mu, \sigma \rangle$ , and samples $z \sim \mathcal{N}(\mu, \sigma)$ . Equations (3) and (4), the gradients on $\mu$ and $\sigma$ , become

$$\nabla_{\mu} J = \frac{z - \mu}{\sigma^2}$$

$$\nabla_{\sigma} J = \frac{(z - \mu)^2 - \sigma^2}{\sigma^3}$$

Algorithm 2: Search Gradient algorithm: Multinormal distribution

\begin{split} & \text{input: } f, \, \boldsymbol{\mu}_{init}, \boldsymbol{\Sigma}_{init} \\ & \text{repeat} \\ & & \text{for } k = 1 \dots \lambda \text{ do} \\ & & \text{draw sample } \mathbf{z}_k \sim \mathcal{N}(\boldsymbol{\mu}, \boldsymbol{\Sigma}) \\ & \text{evaluate the fitness } f(\mathbf{z}_k) \\ & \text{calculate log-derivatives:} \\ & & \nabla_{\boldsymbol{\mu}} \log \pi \left( \mathbf{z}_k | \boldsymbol{\theta} \right) = \boldsymbol{\Sigma}^{-1} \left( \mathbf{z}_k - \boldsymbol{\mu} \right) \\ & & \nabla_{\boldsymbol{\Sigma}} \log \pi \left( \mathbf{z}_k | \boldsymbol{\theta} \right) = -\frac{1}{2} \boldsymbol{\Sigma}^{-1} + \frac{1}{2} \boldsymbol{\Sigma}^{-1} \left( \mathbf{z}_k - \boldsymbol{\mu} \right) \left( \mathbf{z}_k - \boldsymbol{\mu} \right)^{\top} \boldsymbol{\Sigma}^{-1} \\ & \text{end} \\ & \nabla_{\boldsymbol{\mu}} J \leftarrow \frac{1}{\lambda} \sum_{k=1}^{\lambda} \nabla_{\boldsymbol{\mu}} \log \pi(\mathbf{z}_k | \boldsymbol{\theta}) \cdot f(\mathbf{z}_k) \\ & \nabla_{\boldsymbol{\Sigma}} J \leftarrow \frac{1}{\lambda} \sum_{k=1}^{\lambda} \nabla_{\boldsymbol{\Sigma}} \log \pi(\mathbf{z}_k | \boldsymbol{\theta}) \cdot f(\mathbf{z}_k) \\ & \boldsymbol{\mu} \leftarrow \boldsymbol{\mu} + \boldsymbol{\eta} \cdot \nabla_{\boldsymbol{\mu}} J \\ & \boldsymbol{\Sigma} \leftarrow \boldsymbol{\Sigma} + \boldsymbol{\eta} \cdot \nabla_{\boldsymbol{\Sigma}} J \\ & \text{until stopping criterion is met} \end{split}

and the updates, assuming simple hill-climbing (i.e. a population size $\lambda = 1$ ) read:

$$\mu \leftarrow \mu + \eta \frac{z - \mu}{\sigma^2}$$

$$\sigma \leftarrow \sigma + \eta \frac{(z - \mu)^2 - \sigma^2}{\sigma^3}.$$

For any objective function f that requires locating an (approximately) quadratic optimum with some degree of precision (e.g. $f(\mathbf{z}) = \mathbf{z}^2$ ), $\sigma$ must decrease, which in turn increases the variance of the updates, as $\Delta \mu \propto \frac{1}{\sigma}$ and $\Delta \sigma \propto \frac{1}{\sigma}$ for a typical sample z. In fact, the updates become increasingly unstable, the smaller $\sigma$ becomes, an effect which a reduced learning rate or an increased population size can only delay but not avoid. Figure 1 illustrates this effect. Conversely, whenever $\sigma \gg 1$ is large, the magnitude of a typical update is severely reduced.

Clearly, this update is not at all scale-invariant: Starting with $\sigma \gg 1$ makes all updates minuscule, whereas starting with $\sigma \ll 1$ makes the first update huge and therefore unstable.

We conjecture that this limitation constitutes one of the main reasons why search gradients have not been developed before: in isolation, the plain search gradient's performance is both unstable and unsatisfying, and it is only the application of natural gradients (introduced in section 2.3) which tackles these issues and renders search gradients into a viable optimization method.

2.3 Using the Natural Gradient

Instead of using the plain stochastic gradient for updates, NES follows the natural gradient. The natural gradient was first introduced by Amari in 1998, and has been shown to possess numerous advantages over the plain gradient (Amari, 1998; Amari and Douglas, 1998). In terms of mitigating the slow convergence of plain gradient ascent in optimization landscapes

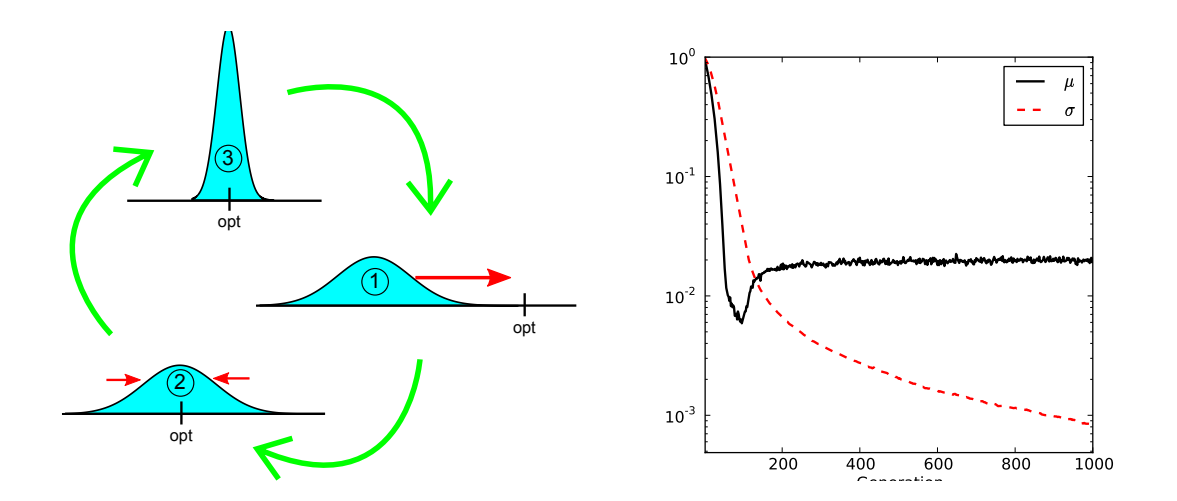

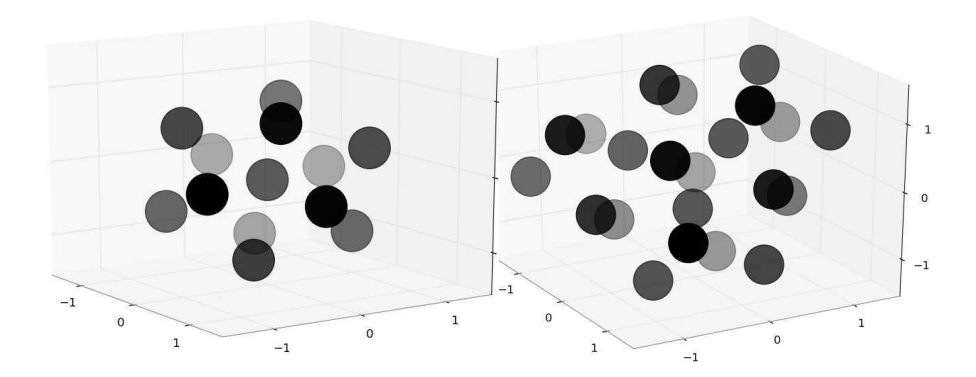

Figure 1: Left: Schematic illustration of how the search distribution adapts in the onedimensional case: from (1) to (2), µ is adjusted to make the distribution cover the optimum. From (2) to (3), σ is reduced to allow for a precise localization of the optimum. The step from (3) to (1) then is the problematic case, where a small σ induces a largely overshooting update, making the search start over again. Right: Progression of µ (black) and σ (red, dashed) when following the search gradient towards minimizing f(z) = z 2 , executing Algorithm 2. Plotted are median values over 1000 runs, with a small learning rate η = 0.01 and λ = 10, both of which mitigate the instability somewhat, but still show the failure to precisely locate the optimum (for which both µ and σ need to approach 0).

with ridges and plateaus, natural gradients are a more principled (and hyper-parameterfree) approach than, for example, the commonly used momentum heuristic.

The plain gradient ∇J simply follows the steepest ascent in the space of the actual parameters θ of the distribution. This means that for a given small step-size ε, following it will yield a new distribution with parameters chosen from the hypersphere of radius ǫ and center θ that maximizes J. In other words, the Euclidean distance in parameter space between subsequent distributions is fixed. Clearly, this makes the update dependent on the particular parameterization of the distribution, therefore a change of parameterization leads to different gradients and different updates. See also Figure 2 for an illustration of how this effectively renormalizes updates w.r.t. uncertainty.

The key idea of the natural gradient is to remove this dependence on the parameterization by relying on a more 'natural' measure of distance D(θ ′ ||θ) between probability distributions π (z|θ) and π (z|θ ′ ). One such natural distance measure between two probability distributions is the Kullback-Leibler divergence (Kullback and Leibler, 1951). The natural gradient can then be formalized as the solution to the constrained optimization

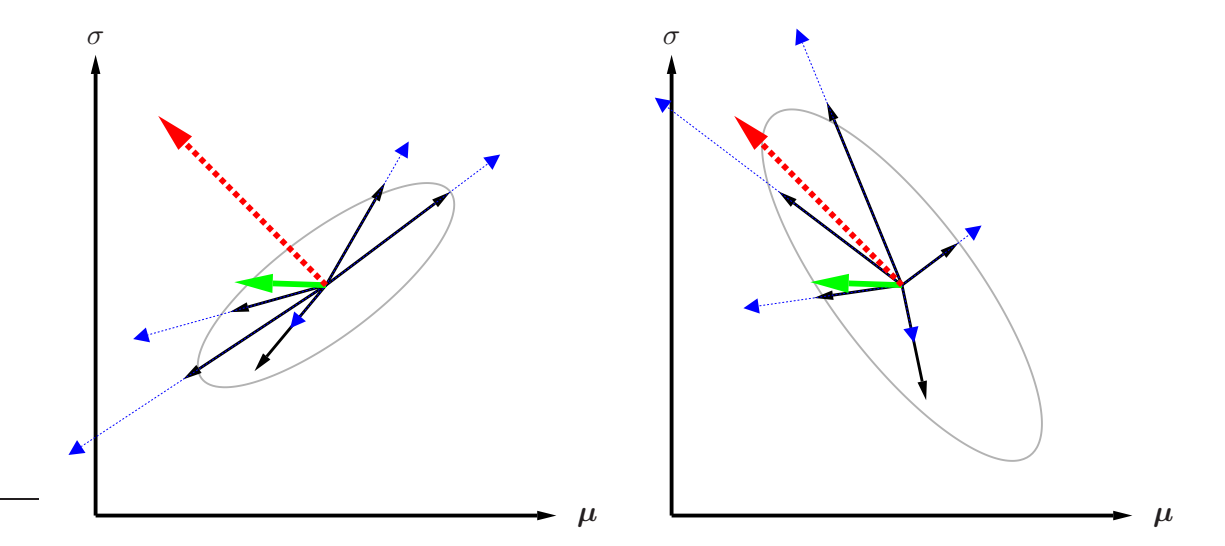

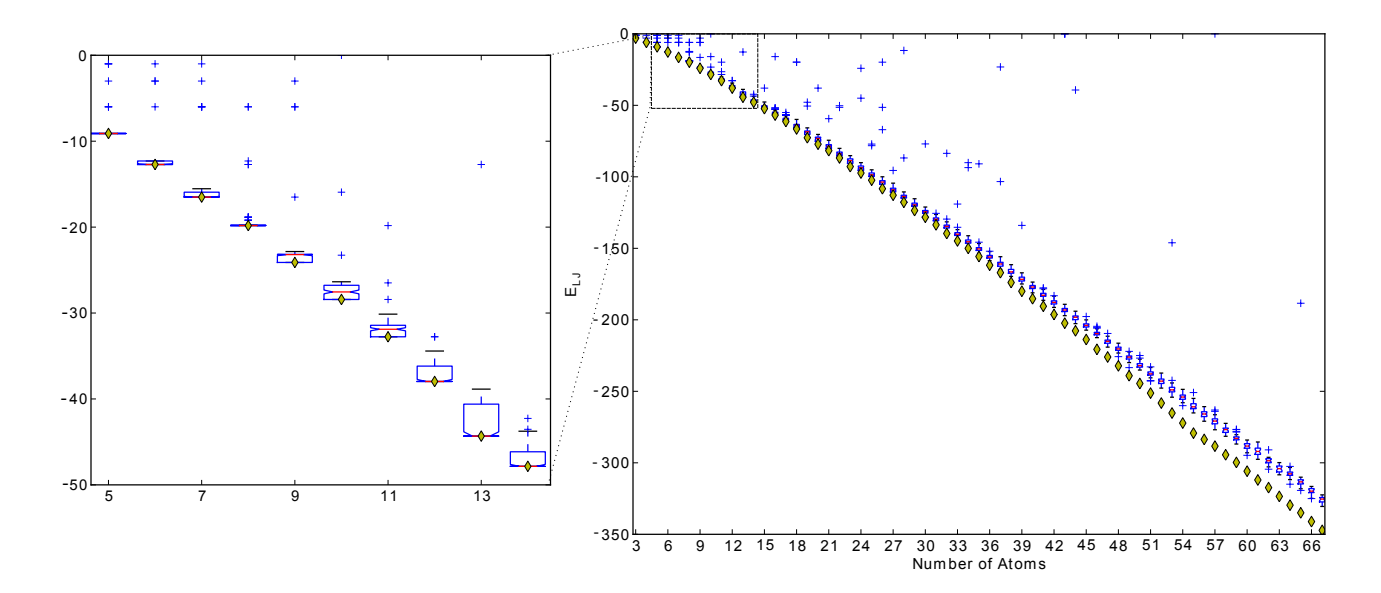

Figure 2: Illustration of plain versus natural gradient in parameter space. Consider two parameters, e.g. $\theta = (\mu, \sigma)$ , of the search distribution. In the plot on the left, the solid (black) arrows indicate the gradient samples $\nabla_{\theta} \log \pi(\mathbf{z} \mid \theta)$ , while the dotted (blue) arrows correspond to $f(\mathbf{z}) \cdot \nabla_{\theta} \log \pi(\mathbf{z} \mid \theta)$ , that is, the same gradient estimates, but scaled with fitness. Combining these, the bold (green) arrow indicates the (sampled) fitness gradient $\nabla_{\theta} J$ , while the bold dashed (red) arrow indicates the corresponding natural gradient $\tilde{\nabla}_{\theta} J$ .

Being random variables with expectation zero, the distribution of the black arrows is governed by their covariance, indicated by the gray ellipse. Notice that this covariance is a quantity in parameter space (where the $\theta$ reside), which is not to be confused with the covariance of the distribution in the search space (where the samples z reside).

In contrast, solid (black) arrows on the right represent $\tilde{\nabla}_{\theta} \log \pi(\mathbf{z} \mid \theta)$ , and dotted (blue) arrows indicate the natural gradient samples $f(\mathbf{z}) \cdot \tilde{\nabla}_{\theta} \log \pi(\mathbf{z} \mid \theta)$ , resulting in the natural gradient (dashed red).

The covariance of the solid arrows on the right hand side turns out to be the inverse of the covariance of the solid arrows on the left. This has the effect that when computing the natural gradient, directions with high variance (uncertainty) are penalized and thus shrunken, while components with low variance (high certainty) are boosted, since these components of the gradient samples deserve more trust. This makes the (dashed red) natural gradient a much more trustworthy update direction than the (green) plain gradient.

problem

$$\max_{\delta\theta} J(\theta + \delta\theta) \approx J(\theta) + \delta\theta^{\top} \nabla_{\theta} J,$$ s.t. $D(\theta + \delta\theta || \theta) = \varepsilon,$

where $J(\theta)$ is the expected fitness of equation (1), and $\varepsilon$ is a small increment size. Now, we have for $\delta\theta \to 0$ ,

$$D\left(\theta + \delta\theta || \theta\right) = \frac{1}{2} \delta\theta^{\top} \mathbf{F}\left(\theta\right) \delta\theta,$$

where

$$\mathbf{F} = \int \pi \left( \mathbf{z} | \theta \right) \nabla_{\theta} \log \pi \left( \mathbf{z} | \theta \right) \nabla_{\theta} \log \pi \left( \mathbf{z} | \theta \right)^{\top} d\mathbf{z},$$ $$= \mathbb{E} \left[ \nabla_{\theta} \log \pi \left( \mathbf{z} | \theta \right) \nabla_{\theta} \log \pi \left( \mathbf{z} | \theta \right)^{\top} \right]$$

is the Fisher information matrix of the given parametric family of search distributions. The solution to this can be found using a Lagrangian multiplier (Peters, 2007), yielding the necessary condition

$$\mathbf{F}\delta\theta = \beta\nabla_{\theta}J,\tag{5}$$

for some constant $\beta > 0$ . The direction of the natural gradient $\widetilde{\nabla}_{\theta} J$ is given by $\delta \theta$ thus defined. If F is invertible^{2}, the natural gradient amounts to

$$\widetilde{\nabla}_{\theta} J = \mathbf{F}^{-1} \nabla_{\theta} J(\theta).$$

The Fisher matrix can be estimated from samples, reusing the log-derivatives $\nabla_{\theta} \log \pi(\mathbf{z}|\theta)$ that we already computed for the gradient $\nabla_{\theta}J$ . Then, updating the parameters following the natural gradient instead of the steepest gradient leads us to the general formulation of NES, as shown in Algorithm 3.

3. Performance and Robustness Techniques

In the following we will present and introduce crucial techniques to improves NES's performance and robustness. Fitness shaping (Wierstra et al., 2008) is designed to make the algorithm invariant w.r.t. arbitrary yet order-preserving fitness transformations (section 3.1). Importance mixing (Sun et al., 2009b) is designed to recycle samples so as to reduce the number of required fitness evaluations, and is subsequently presented in section 3.2. Adaptation sampling, a novel technique for adjusting learning rates online, is introduced in section 3.3, and finally restart strategies, designed to improve success rates on multimodal problems, is presented in section 3.4.

3.1 Fitness Shaping

NES utilizes rank-based fitness shaping in order to render the algorithm invariant under monotonically increasing (i.e., rank preserving) transformations of the fitness function. For

2. Care has to be taken because the Fisher matrix estimate may not be (numerically) invertible even if the exact Fisher matrix is.

Algorithm 3: Canonical Natural Evolution Strategies

input: f, \theta_{init}

repeat

for k = 1 ... \lambda do

draw sample \mathbf{z}_k \sim \pi(\cdot|\theta)

evaluate the fitness f(\mathbf{z}_k)

calculate log-derivatives \nabla_{\theta} \log \pi(\mathbf{z}_k|\theta)

end

\nabla_{\theta} J \leftarrow \frac{1}{\lambda} \sum_{k=1}^{\lambda} \nabla_{\theta} \log \pi(\mathbf{z}_k|\theta) \cdot f(\mathbf{z}_k)

\mathbf{F} \leftarrow \frac{1}{\lambda} \sum_{k=1}^{\lambda} \nabla_{\theta} \log \pi(\mathbf{z}_k|\theta) \nabla_{\theta} \log \pi(\mathbf{z}_k|\theta)^{\top}

\theta \leftarrow \theta + \eta \cdot \mathbf{F}^{-1} \nabla_{\theta} J

until stopping criterion is met

this purpose, the fitness of the population is transformed into a set of utility values $u_1 \ge \cdots \ge u_{\lambda}$ . Let $\mathbf{z}_i$ denote the $i^{th}$ best individual (the $i^{th}$ individual in the population, sorted by fitness, such that $\mathbf{z}_1$ is the best and $\mathbf{z}_{\lambda}$ the worst individual). Replacing fitness with utility, the gradient estimate of equation (2) becomes, with slight abuse of notation,

$$\nabla_{\theta} J(\theta) = \sum_{k=1}^{\lambda} u_k \, \nabla_{\theta} \log \pi(\mathbf{z}_k \,|\, \theta). \tag{6}$$

To avoid entangling the utility-weightings with the learning rate, we require that $\sum_{k=1}^{\lambda} |u_k| = 1$ .

The choice of utility function can in fact be seen as a free parameter of the algorithm. Throughout this paper we will use the following

$$u_k = \frac{\max\left(0, \log(\frac{\lambda}{2} + 1) - \log(i)\right)}{\sum_{j=1}^{\lambda} \max\left(0, \log(\frac{\lambda}{2} + 1) - \log(j)\right)} - \frac{1}{\lambda},$$

which is directly related to the one employed by CMA-ES (Hansen and Ostermeier, 2001), for ease of comparison. In our experience, however, this choice has not been crucial to performance, as long as it is monotonous and based on ranks instead of raw fitness (e.g., a function which simply increases linearly with rank).

In addition to robustness, these utility values provide us with an elegant formalism to describe the (1+1) hill-climber version of the algorithm within the same framework, by using different utility values, depending on success (see section 4.5 later in this paper).

3.2 Importance Mixing

In each batch, we evaluate $\lambda$ new samples generated from search distribution $\pi(\mathbf{z}|\theta)$ . However, since small updates ensure that the KL divergence between consecutive search distributions is generally small, most new samples will fall in the high density area of the previous

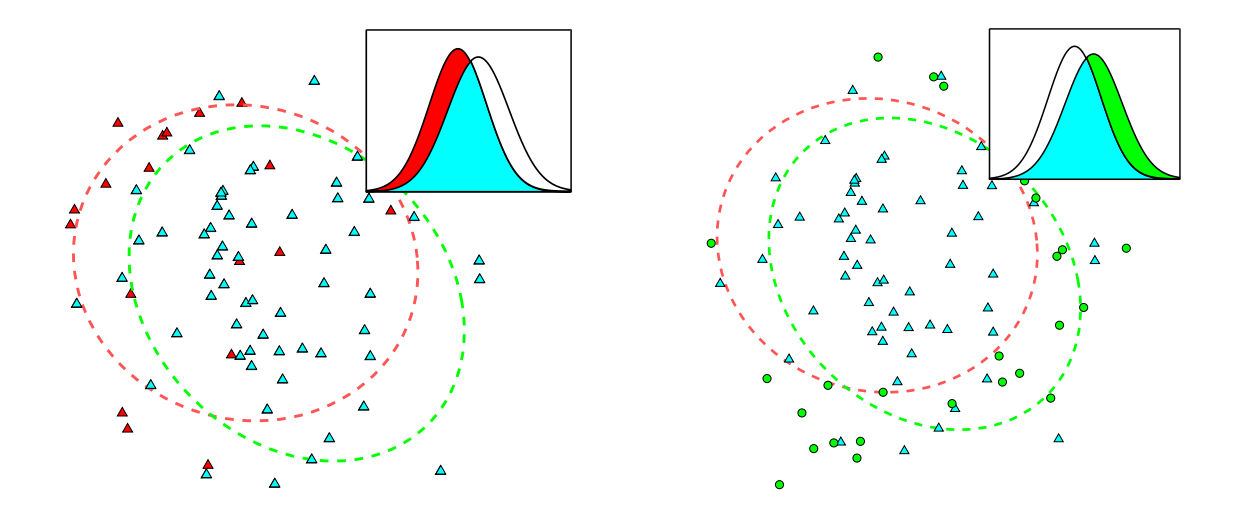

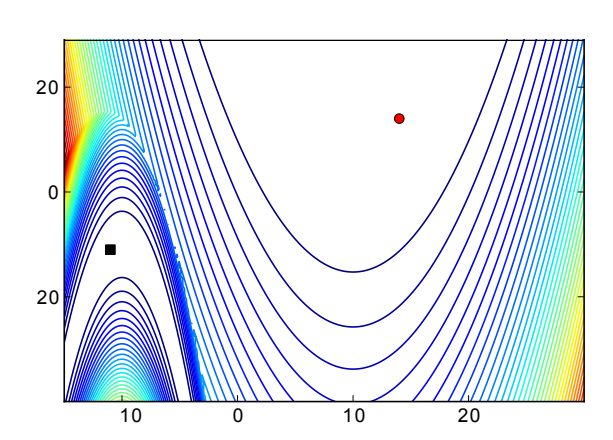

Figure 3: Illustration of importance mixing. Left: In the first step, old samples are eliminated (red triangles) according to (7), and the remaining samples (blue triangles) are reused. Right: In the second step, new samples (green circles) are generated according to (8).

search distribution $\pi(\mathbf{z}|\theta')$ . This leads to redundant fitness evaluations in that same area. We improve the efficiency with a new procedure called importance mixing, which aims at reusing fitness evaluations from the previous batch, while ensuring the updated batch conforms to the new search distribution.

Importance mixing works in two steps: In the first step, rejection sampling is performed on the previous batch, such that sample z is accepted with probability

$$\min \left\{ 1, (1 - \alpha) \frac{\pi \left( \mathbf{z} | \theta \right)}{\pi \left( \mathbf{z} | \theta' \right)} \right\}. \tag{7}$$

Here $\alpha \in [0, 1]$ is an algorithm hyperparameter called the minimal refresh rate. Let $\lambda_a$ be the number of samples accepted in the first step. In the second step, reverse rejection sampling is performed as follows: Generate samples from $\pi(\mathbf{z}|\theta)$ and accept $\mathbf{z}$ with probability

$$\max \left\{ \alpha, 1 - \frac{\pi \left( \mathbf{z} | \theta' \right)}{\pi \left( \mathbf{z} | \theta \right)} \right\} \tag{8}$$

until $\lambda - \lambda_a$ new samples are accepted. The $\lambda_a$ samples from the old batch and $\lambda - \lambda_a$ newly accepted samples together constitute the new batch. Figure 3 illustrates the procedure. Note that only the fitnesses of the newly accepted samples need to be evaluated. The advantage of using importance mixing is twofold: On the one hand, we reduce the number of fitness evaluations required in each batch, on the other hand, if we fix the number of newly evaluated fitnesses, then many more fitness evaluations can potentially be used to yield more reliable and accurate gradients.

The minimal refresh rate parameter $\alpha$ has two uses. First, it avoids too low an acceptance probability at the second step when $\pi\left(\mathbf{z}|\theta'\right)/\pi\left(\mathbf{z}|\theta\right)\simeq 1$ . And second, it permits specifying a lower bound on the expected proportion of newly evaluated samples $\rho=\mathbb{E}\left[\frac{\lambda-\lambda_a}{\lambda}\right]$ ,

namely ρ ≥ α, with the equality holding if and only if θ = θ ′ . In particular, if α = 1, all samples from the previous batch will be discarded, and if α = 0, ρ depends only on the distance between π (z|θ) and π (z|θ ′ ). Normally we set α to be a small positive number, e.g., in this paper we use α = 0.1 throughout.

It can be proven that the updated batch conforms to the search distribution π (z|θ). In the region where

$$(1 - \alpha) \frac{\pi(\mathbf{z}|\theta)}{\pi(\mathbf{z}|\theta')} \le 1,$$

the probability that a sample from previous batches appears in the new batch is

$$\pi\left(\mathbf{z}|\theta'\right)\cdot\left(1-\alpha\right)\frac{\pi\left(\mathbf{z}|\theta\right)}{\pi\left(\mathbf{z}|\theta'\right)}=\left(1-\alpha\right)\pi\left(\mathbf{z}|\theta\right).$$

The probability that a sample generated from the second step entering the batch is απ (z|θ), since

$$\max\left\{\alpha, 1 - \frac{\pi\left(\mathbf{z}|\theta'\right)}{\pi\left(\mathbf{z}|\theta\right)}\right\} = \alpha.$$

So the probability of a sample entering the batch is just p (z|θ) in that region. The same result holds also for the region where

$$(1-\alpha)\frac{\pi(\mathbf{z}|\theta)}{\pi(\mathbf{z}|\theta')} > 1.$$

When using importance mixing in the context of NES, this reduces the sensitivity to the hyperparameters, particularly the population size λ, as importance mixing implicitly adapts it to the situation by reusing some or many previously evaluated sample points.

3.3 Adaptation Sampling

To reduce the burden on determining appropriate hyper-parameters such as the learning rate, we develop a new self-adaptation or meta-learning technique (Schaul and Schmidhuber, 2010a), called adaptation sampling, that can automatically adapt the settings in a principled and economical way.

We model this situation as follows: Let πθ be a distribution with hyper-parameter θ and ψ(z) a quality measure for each sample z ∼ πθ. Our goal is to adapt θ such as to maximize the quality ψ. A straightforward method to achieve this, henceforth dubbed adaptation sampling, is to evaluate the quality of the samples z ′ drawn from πθ ′, where θ ′ 6= θ is a slight variation of θ, and then perform hill-climbing: Continue with the new θ ′ if the quality of its samples is significantly better (according, e.g., to a Mann-Whitney U-test), and revert to θ otherwise. Note that this proceeding is similar to the NES algorithm itself, but applied at a meta-level to algorithm parameters instead of the search distribution. The goal of this adaptation is to maximize the pace of progress over time, which is slightly different from maximizing the fitness function itself.

Virtual adaptation sampling is a lightweight alternative to adaptation sampling that is particularly useful whenever evaluating ψ is expensive :

• do importance sampling on the existing samples $\mathbf{z}_i$ , according to $\pi_{\theta'}$ :

$$w_i' = \frac{\pi(\mathbf{z}|\theta')}{\pi(\mathbf{z}|\theta)}$$

(this is always well-defined, because $\mathbf{z} \sim \pi_{\theta} \Rightarrow \pi(\mathbf{z}|\theta) > 0$ ).

• compare $\{\psi(\mathbf{z}_i)\}$ with weights $\{w_i = 1, \forall i\}$ and $\{\psi' = \psi(\mathbf{z}_i), \forall i\}$ with weights $\{w'_i\}$ , using a weighted version of the Mann-Whitney test, as introduced in Appendix A.

Beyond determining whether $\theta$ or $\theta'$ is better, choosing a non-trivial confidence level $\rho$ allows us to avoid parameter drift, as $\theta$ is only updated if the improvement is significant enough.

There is one caveat, however: the rate of parameter change needs to be adjusted such that the two resulting distributions are not too similar (otherwise the difference won't be statistically significant), but also not too different, (otherwise the weights w' will be too small and again the test will be inconclusive).

If, however, we explicitly desire large adaptation steps on $\theta$ , we have the possibility of interpolating between adaptation sampling and virtual adaptation sampling by drawing a few new samples from the distribution $\pi_{\theta'}$ (each assigned weight 1), where it is overlapping least with $\pi_{\theta}$ . The best way of achieving this is importance mixing, as introduced in Section 3.2, uses jointly with the reweighted existing samples.

For NES algorithms, the most important parameter to be adapted by adaptation sampling is the learning rate $\eta$ , starting with a conservative guess. This is because half-way into the search, after a local attractor has been singled out, it may well pay off to increase the learning rate in order to more quickly converge to it.

In order to produce variations $\eta'$ which can be judged using the above-mentioned Utest, we propose a procedure similar in spirit to Rprop-updates (Riedmiller and Braun, 1993; Igel and Husken, 2003), where the learning rates are either increased or decreased by a multiplicative constant whenever there is evidence that such a change will lead to better samples.

More concretely, when using adaptation sampling for NES we test for an improvement with the hypothetical distribution $\theta'$ generated with $\eta' = 1.5\eta$ . Each time the statistical test is successful with a confidence of at least $\rho = \frac{1}{2} - \frac{1}{3(d+1)}$ (this value was determined empirically) we increase the learning rate by a factor of $c^+ = 1.1$ , up to at most $\eta = 1$ . Otherwise we bring it closer to its initial value: $\eta \leftarrow 0.9\eta + 0.1\eta_{init}$ .

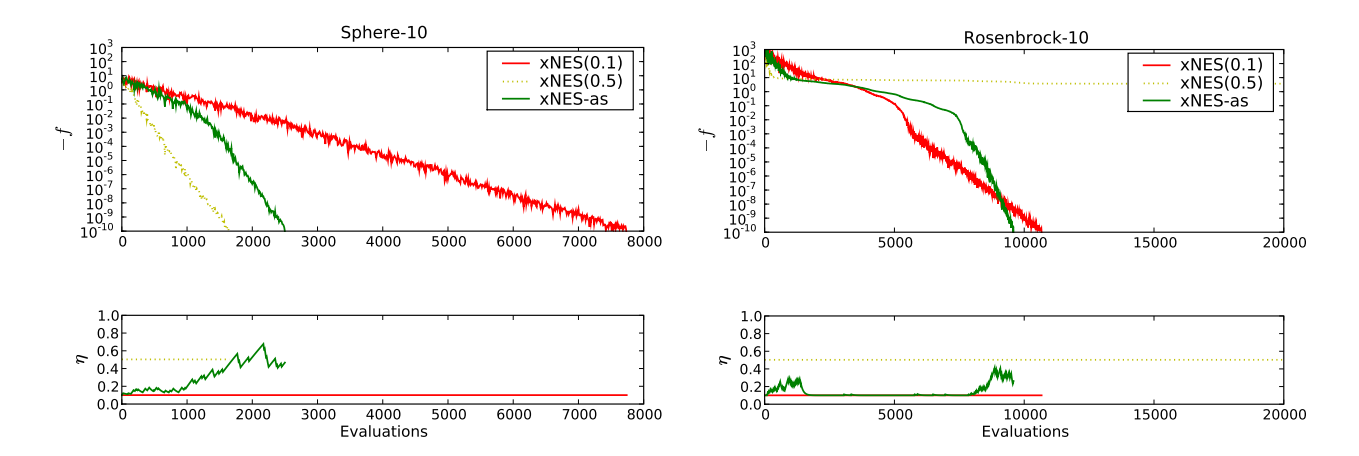

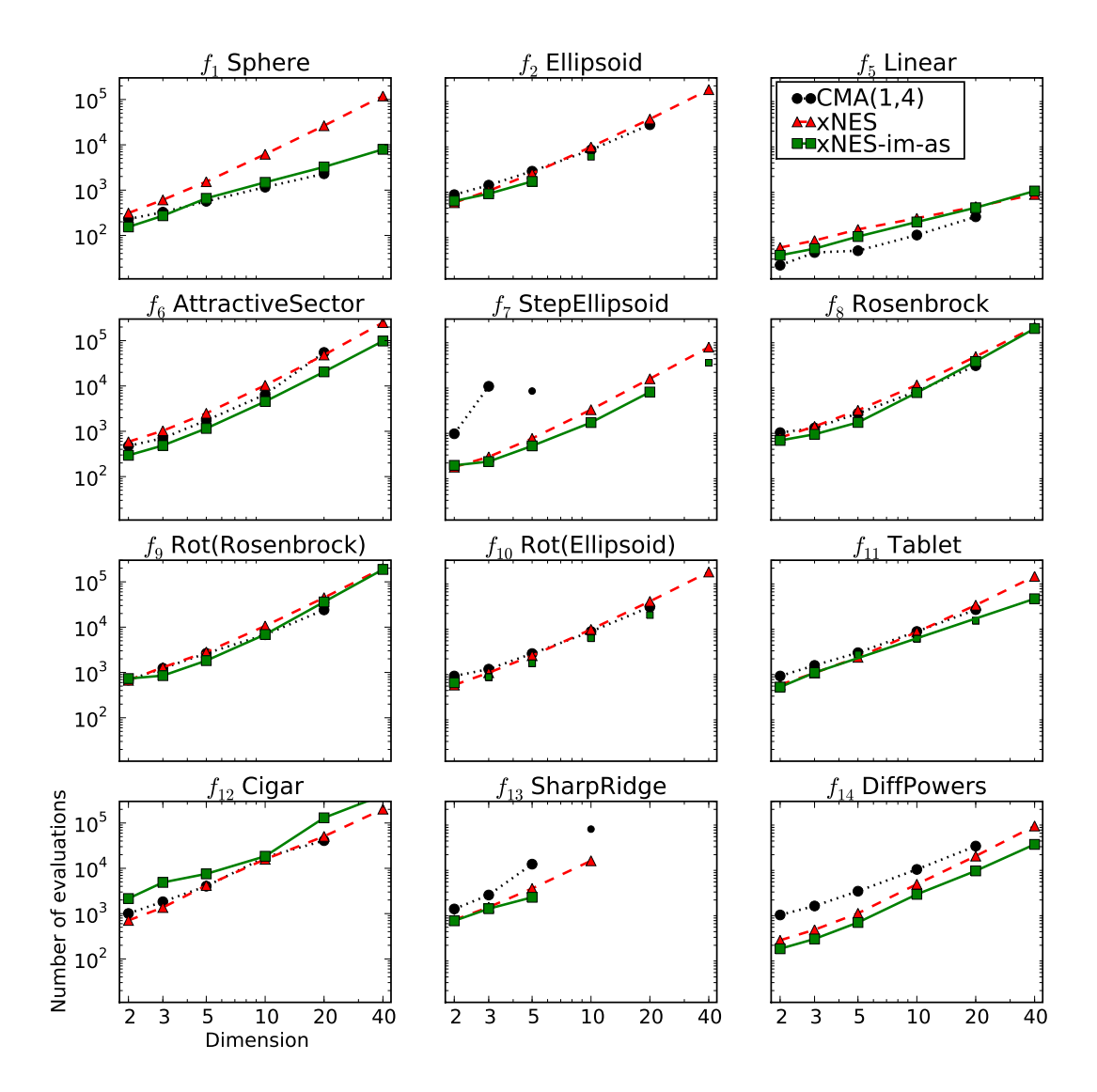

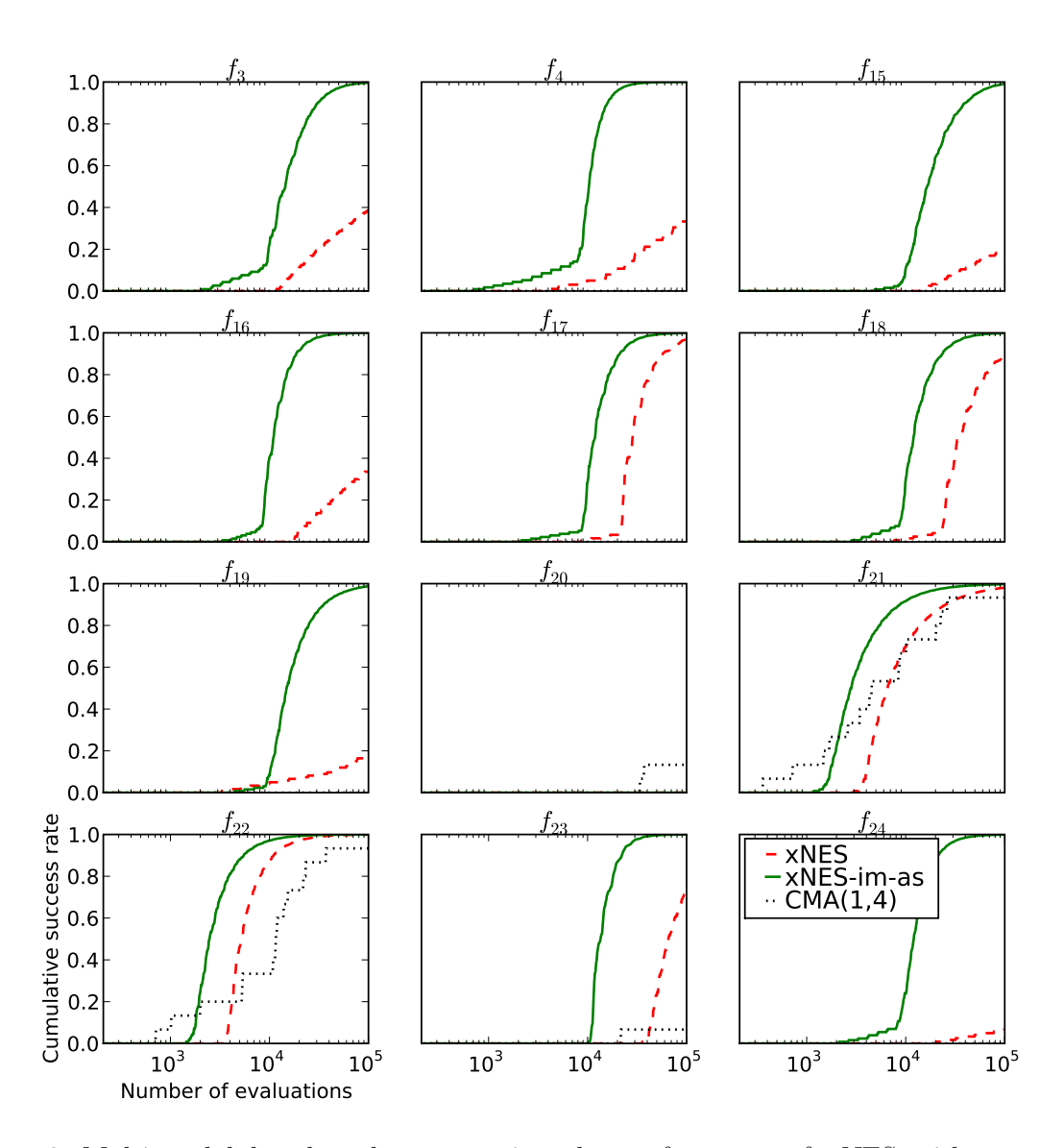

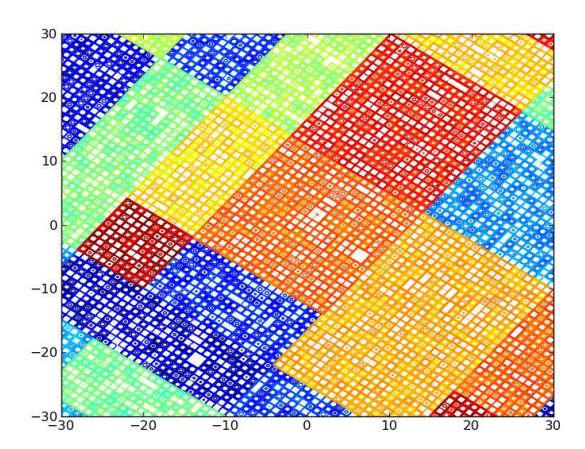

Figure 4 illustrates the effect of the virtual adaptation sampling strategy on two different 10-dimensional unimodal benchmark functions, the Sphere function $f_1$ and the Rosenbrock function $f_8$ (see section 6.2 for details). We find that, indeed, adaptation sampling boosts the learning rates to the appropriate high values when quick progress can be made (in the presence of an approximately quadratic optimum), but keeps them at carefully low values otherwise.

3.4 Restart Strategies

A simple but widespread method for mitigating the risk of finding only local optima in a strongly multi-modal scenario is to restart the optimization algorithm a number of times

Figure 4: Illustration of the effect of adaptation sampling. We show the increase in fitness during a NES run (above) and the corresponding learning rates (below) on two setups: 10-dimensional sphere function (left), and 10-dimensional Rosenbrock function (right). Plotted are three variants of xNES (algorithm 4): fixed default learning rate of $\eta=0.1$ (dashed, red) fixed large learning rate of $\eta=0.5$ (dotted, yellow), and an adaptive learning rate starting at $\eta=0.1$ (green). We see that for the (simple) Sphere function, it is advantageous to use a large learning rate, and adaptation sampling automatically finds that one. However, using the overly greedy updates of a large learning rate fails on harder problems (right). Here adaptation sampling really shines: it boosts the learning rate in the initial phase (entering the Rosenbrock valley), then quickly reduces it while the search needs to carefully navigate the bottom of the valley, and boosts it again at the end when it has located the optimum and merely needs to zoom in precisely.

with different initializations, or just with a different random seed. This is even more useful in the presence of parallel processing resources, in which case multiple runs are executed simultaneously.

In practice, where the parallel capacity is limited, we need to decide when to stop or suspend an ongoing optimization run and start or resume another one. In this section we provide one such restart strategy that takes those decisions. Inspired by recent work on practical universal search (Schaul and Schmidhuber, 2010b), this results in an effective use of resources independently of the problem.

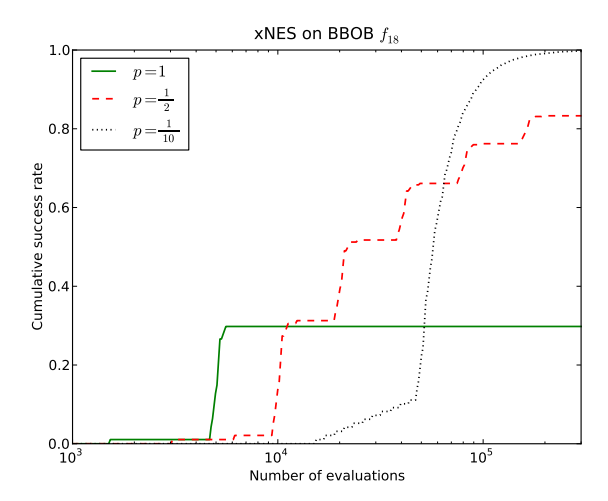

The strategy consists in reserving a fixed fraction p of the total time for the first run, and then subdividing the remaining time 1-p in the same way, recursively (i.e. $p(1-p)^{i-1}$ for the $i^{th}$ run). The recursive decomposition of the time budget stops when the assigned time-slice becomes smaller than the overhead of swapping out different runs. In this way, the number of runs with different seeds remains finite, but increases over time, as needed. Figure 5 illustrates the effect of the restart strategy, for different values of p, on the example of a multi-modal benchmark function $f_{18}$ (see section 6.2 for details), where most runs get caught in local optima. Whenever used in the rest of the paper, the fraction is $p = \frac{1}{5}$ .

Figure 5: Illustrating the effect of different restart strategies. Plotted is the cumulative empirical success probability, as a function of the total number of evaluations used. Using no restarts, corresponding to p=1 (green) is initially faster but unreliable, whereas $p=\frac{1}{10}$ (dotted black) reliably finds the optimum within 300000 evaluations, but at the cost of slowing down success for all runs by a factor 10. In-between these extremes, $p=\frac{1}{2}$ (broken line, red) trades off slowdown and reliability.

Let s(t) be the success probability of the underlying search algorithm at time t. Here, time is measured by the number of generations or fitness evaluations. Accordingly, let $S_p(t)$ be the boosted success probability of the restart scheme with parameter p. Approximately, that is, assuming continuous time, the probabilities are connected by the formula

$$S_p(t) = 1 - \prod_{i=1}^{\infty} \left[ 1 - s(p(1-p)^{i-1}t) \right].$$

Two qualitative properties of the restart strategy can be deduced from this formula, even if in discrete time the sum is finite $(i \leq \log_2(t))$ , and the times $p(1-p)^{i-1}t$ need to be rounded:

- If there exists $t_0$ such that $s(t_0) > 0$ then $\lim_{t \to \infty} S_p(t) = 1$ for all $p \in (0,1)$ .

- For sufficiently small t, we have $S_p(t) \leq s_p(t)$ .

This captures the expected effect that the restart strategy results in an initial slowdown, but eventually solves the problem reliably.

4. Techniques for Multinormal Distributions

In this section we will describe two crucial techniques to enhance performance of the NES algorithm as applied to multinormal distributions. First, the method of exponential parameterization is introduced, guaranteeing that the covariance matrix stays positive-definite.

Second, a novel method for changing the coordinate system into a "natural" one is laid out, making the algorithm computationally efficient.

4.1 Using Exponential Parameterization

Gradient steps on the covariance matrix $\Sigma$ result in a number of technical problems. When updating $\Sigma$ directly with the gradient step $\nabla_{\Sigma} J$ , we have to ensure that $\Sigma + \eta \nabla_{\Sigma} J$ remains a valid, positive definite covariance matrix. This is not guaranteed a priori, because the (natural) gradient $\nabla_{\Sigma} J$ may be any symmetric matrix. If we instead update a factor $\mathbf{A}$ of $\Sigma$ , it is at least ensured that $\mathbf{A}^{\top} \mathbf{A}$ is symmetric and positive semi-definite. But when shrinking an eigenvalue of $\mathbf{A}$ it may happen that the gradient step swaps the sign of the eigenvalue, resulting in undesired oscillations.

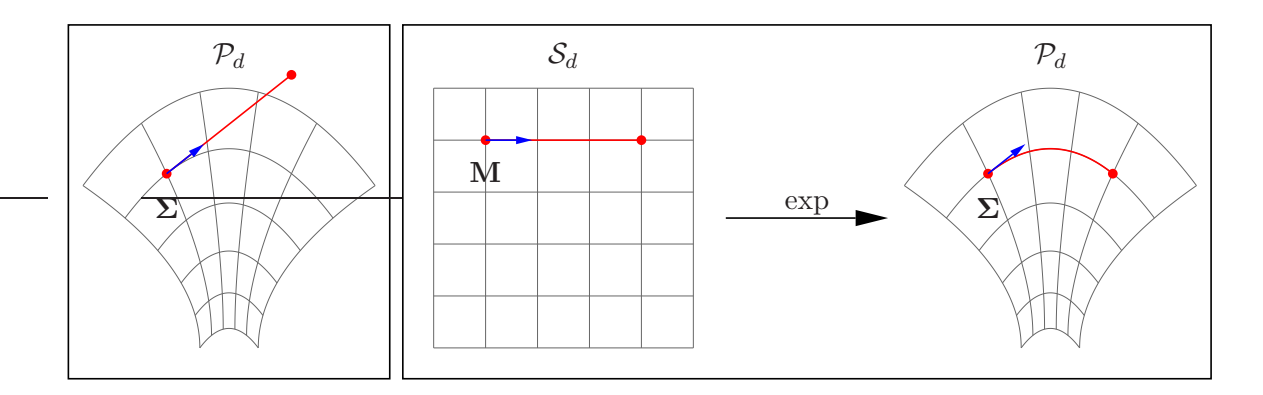

An elegant way to fix these problems is to represent the covariance matrix using the exponential map for symmetric matrices (see e.g., Glasmachers and Igel, 2005 for a related approach). Let

$$\mathcal{S}_d := \left\{ \mathbf{M} \in \mathbb{R}^{d imes d} \,\middle|\, \mathbf{M}^ op = \mathbf{M} ight\}$$

and

$$\mathcal{P}_d := \left\{ \mathbf{M} \in \mathcal{S}_d \,\middle|\, \mathbf{v}^\top \mathbf{M} \mathbf{v} > 0 \text{ for all } \mathbf{v} \in \mathbb{R}^d \setminus \{0\} \right\}$$

denote the vector space of symmetric and the (cone) manifold of symmetric positive definite matrices, respectively. Then the exponential map

$$\exp: \mathcal{S}_d \to \mathcal{P}_d , \qquad \mathbf{M} \mapsto \sum_{n=0}^{\infty} \frac{\mathbf{M}^n}{n!} \tag{9} $$

is a diffeomorphism: The map is bijective, and both exp as well as its inverse map $\log : \mathcal{P}_d \to \mathcal{S}_d$ are smooth. The mapping can be computed in cubic time, for example by decomposing the matrix $\mathbf{M} = \mathbf{U}\mathbf{D}\mathbf{U}^{\top}$ into orthogonal $\mathbf{U}$ (eigen-vectors) and diagonal $\mathbf{D}$ (eigen-values), taking the exponential of $\mathbf{D}$ (which amounts to taking the element-wise exponentials of the diagonal entries), and composing everything back^{3} as $\exp(\mathbf{M}) = \mathbf{U} \exp(\mathbf{D})\mathbf{U}^{\top}$ .

Thus, we can represent the covariance matrix $\Sigma \in \mathcal{P}_d$ as $\exp(\mathbf{M})$ with $\mathbf{M} \in \mathcal{S}_d$ . The resulting gradient update for $\mathbf{M}$ has two important properties: First, because $\mathcal{S}_d$ is a vector space, any update automatically corresponds to a valid covariance matrix.4 Second, the update of $\mathbf{M}$ makes the gradient invariant w.r.t. linear transformations of the search space $\mathbb{R}^d$ . This follows from an information geometric perspective, viewing $\mathcal{P}_d$ as the Riemannian parameter manifold equipped with the Fisher information metric. The invariance property is a direct consequence of the Cartan-Hadamard theorem (Cartan, 1928). See also Figure 6 for an illustration.

However, the exponential parameterization considerably complicates the computation of the Fisher information matrix $\mathbf{F}$ , which now involves partial derivatives of the matrix exponential (9). This can be done in cubic time per partial derivative according

3. The same computation works for the logarithm, and thus also for powers $\mathcal{P}_d \to \mathcal{P}_d$ , $\mathbf{M} \mapsto \mathbf{M}^c = \exp(c \cdot \log(\mathbf{M}))$ for all $c \in \mathbb{R}$ , for example for the (unique) square root (c = 1/2).

4. The tangent bundle $T\mathcal{P}_d$ of the manifold $\mathcal{P}_d$ is isomorphic to $\mathcal{P}_d \times \mathcal{S}_d$ and globally trivial. Thus, arbitrarily large gradient steps are meaningful in this representation.

Figure 6: Left: updating a covariance matrix $\Sigma$ directly can end up outside the manifold of symmetric positive-definite matrices $\mathcal{P}_d$ . Right: first performing the update on $\mathbf{M} = \frac{1}{2} \log(\Sigma)$ in $\mathcal{S}_d$ and then mapping back the result into the original space $\mathcal{P}_d$ using the exponential map is both safe (guaranteed to stay in the manifold) and straight (the update follows a geodesic).

to (Najfeld and Havel, 1994), resulting in an unacceptable complexity of $\mathcal{O}(d^7)$ for the computation of the Fisher matrix.

4.2 Using Natural Coordinates

In this section we describe a technique that allows us to avoid the computation of the Fisher information matrix altogether, for some specific but common classes of distributions. The idea is to iteratively change the coordinate system in such a way that it becomes possible to follow the natural gradient without any costly inverses of the Fisher matrix (actually, without even constructing it explicitly). We introduce the technique for the simplest case of multinormal search distributions, and in section 5.2, we generalize it to the whole class of distributions that they are applicable to (namely, rotationally-symmetric distributions).

Instead of using the 'global' coordinates $\Sigma = \exp(\mathbf{M})$ for the covariance matrix, we linearly transform the coordinate system in each iteration to a coordinate system in which the current search distribution is the standard normal distribution with zero mean and unit covariance. Let the current search distribution be given by $(\boldsymbol{\mu}, \mathbf{A}) \in \mathbb{R}^d \times \mathcal{P}_d$ with $\mathbf{A}^{\top} \mathbf{A} = \Sigma$ . We use the tangent space $T_{(\boldsymbol{\mu}, \mathbf{A})}(\mathbb{R}^d \times \mathcal{P}_d)$ of the parameter manifold $\mathbb{R}^d \times \mathcal{P}_d$ , which is isomorphic to the vector space $\mathbb{R}^d \times \mathcal{S}_d$ , to represent the updated search distribution as

$$(\boldsymbol{\delta}, \mathbf{M}) \mapsto (\boldsymbol{\mu}_{\text{new}}, \mathbf{A}_{\text{new}}) = \left(\boldsymbol{\mu} + \mathbf{A}^{\top} \boldsymbol{\delta}, \mathbf{A} \exp\left(\frac{1}{2}\mathbf{M}\right)\right). \tag{10} $$

This coordinate system is natural in the sense that the Fisher matrix w.r.t. an orthonormal basis of $(\boldsymbol{\delta}, \mathbf{M})$ is the identity matrix. The current search distribution $\mathcal{N}(\boldsymbol{\mu}, \mathbf{A}^{\top} \mathbf{A})$ is encoded as

$$\pi(\mathbf{z}|\boldsymbol{\delta}, \mathbf{M}) = \mathcal{N}\left(\boldsymbol{\mu} + \mathbf{A}^{\top}\boldsymbol{\delta}, \ \mathbf{A}^{\top}\exp(\mathbf{M})\mathbf{A}\right),$$

where at each step we change the coordinates such that $(\boldsymbol{\delta}, \mathbf{M}) = (0, 0)$ . In this case, it is guaranteed that for the variables $(\boldsymbol{\delta}, \mathbf{M})$ the plain gradient and the natural gradient coincide $(\mathbf{F} = \mathbb{I})$ . Consequently the computation of the natural gradient costs $\mathcal{O}(d^3)$ operations.

In the new coordinate system we produce standard normal samples $\mathbf{s} \sim \mathcal{N}(0, \mathbb{I})$ which are then mapped back into the original coordinates $\mathbf{z} = \boldsymbol{\mu} + \mathbf{A}^{\top} \cdot \mathbf{s}$ . The log-density becomes

$$\log \pi(\mathbf{z} \mid \boldsymbol{\delta}, \mathbf{M}) = -\frac{d}{2} \log(2\pi) - \log \left( \det(\mathbf{A}) \right) - \frac{1}{2} \operatorname{tr}(\mathbf{M})$$ $$-\frac{1}{2} \left\| \exp \left( -\frac{1}{2} \mathbf{M} \right) \mathbf{A}^{-\top} \cdot (\mathbf{z} - (\boldsymbol{\mu} + \mathbf{A}^{\top} \boldsymbol{\delta})) \right\|^{2},$$

and thus the log-derivatives (at $\delta = 0$ , $\mathbf{M} = 0$ ) take the following, surprisingly simple forms:

$$\nabla_{\boldsymbol{\delta}}|_{\boldsymbol{\delta}=0} \log \pi(\mathbf{z} \mid \mathbf{M} = 0, \boldsymbol{\delta}) = \nabla_{\boldsymbol{\delta}}|_{\boldsymbol{\delta}=0} \left[ -\frac{1}{2} \left\| \mathbf{A}^{-\top} \cdot (\mathbf{z} - (\boldsymbol{\mu} + \mathbf{A}^{\top} \boldsymbol{\delta})) \right\|^{2} \right]$$

$$= -\frac{1}{2} \left[ -2 \cdot \mathbf{A}^{-\top} \mathbf{A}^{\top} \cdot \mathbf{A}^{-\top} \cdot (\mathbf{z} - (\boldsymbol{\mu} + \mathbf{A}^{\top} \boldsymbol{\delta})) \right] \Big|_{\boldsymbol{\delta}=0}$$

$$= \mathbf{A}^{-\top} (\mathbf{z} - \boldsymbol{\mu})$$

$$= \mathbf{s}$$ \tag{11} $$$$ $$\nabla_{\mathbf{M}}|{\mathbf{M}=0} \log \pi(\mathbf{z} \mid \boldsymbol{\delta} = 0, \mathbf{M}) = -\frac{1}{2} \nabla{\mathbf{M}}|{\mathbf{M}=0} \left[ \operatorname{tr}(\mathbf{M}) + \left| \exp\left(-\frac{1}{2}\mathbf{M}\right) \mathbf{A}^{-\top} (\mathbf{z} - \boldsymbol{\mu}) \right|^{2} \right]$$ $$= -\frac{1}{2} \left[ \mathbb{I} + 2 \cdot \left( -\frac{1}{2} \right) \cdot \left[ \mathbf{A}^{-\top} (\mathbf{z} - \boldsymbol{\mu}) \right] \cdot \exp\left( -\frac{1}{2}\mathbf{M} \right) \cdot \left[ \mathbf{A}^{-\top} (\mathbf{z} - \boldsymbol{\mu}) \right]^{\top} \right] \Big|{\mathbf{M}=0}$$ $$= -\frac{1}{2} \left[ \mathbb{I} - \left[ \mathbf{A}^{-\top} (\mathbf{z} - \boldsymbol{\mu}) \right] \cdot \mathbb{I} \cdot \left[ \mathbf{A}^{-\top} (\mathbf{z} - \boldsymbol{\mu}) \right]^{\top} \right]$$ $$= \frac{1}{2} (\mathbf{s}\mathbf{s}^{\top} - \mathbb{I})$$ \tag{12} $$$$ These results give us the updates in the natural coordinate system <span id="page-18-2"></span><span id="page-18-1"></span> $$\nabla_{\delta} J = \sum_{k=1}^{\lambda} f(\mathbf{z}k) \cdot \mathbf{s}_k \tag{13}$$ <span id="page-18-0"></span> $$\nabla{\mathbf{M}} J = \sum_{k=1}^{\lambda} f(\mathbf{z}k) \cdot (\mathbf{s}_k \mathbf{s}_k^{\top} - \mathbb{I}) \tag{14} $$ which are then mapped back onto $(\mu, \mathbf{A})$ using equation (10): $$\begin{split} \boldsymbol{\mu}{\text{new}} \leftarrow \boldsymbol{\mu} + \mathbf{A}^{\top} \boldsymbol{\delta} &= \boldsymbol{\mu} + \eta \mathbf{A}^{\top} \nabla_{\boldsymbol{\delta}} J \ &= \boldsymbol{\mu} + \eta \mathbf{A}^{\top} \left( \frac{1}{\lambda} \sum_{k=1}^{\lambda} \nabla_{\boldsymbol{\delta}} \log \pi \left( \mathbf{z}{k} | \boldsymbol{\theta} \right) \cdot f(\mathbf{z}{k}) \right) \ &= \boldsymbol{\mu} + \frac{\eta}{\lambda} \mathbf{A}^{\top} \left( \sum_{k=1}^{\lambda} f(\mathbf{z}{k}) \cdot \mathbf{s}{k} \right) \ \mathbf{A}{\text{new}} \leftarrow \mathbf{A} \cdot \exp \left( \frac{1}{2} \mathbf{M} \right) &= \mathbf{A} \cdot \exp \left( \eta \frac{1}{2} \nabla{\mathbf{M}} J \right) \ &= \mathbf{A} \cdot \exp \left( \frac{\eta}{2} \cdot \frac{1}{\lambda} \sum_{k=1}^{\lambda} \nabla_{\mathbf{M}} \log \pi \left( \mathbf{z}{k} | \boldsymbol{\theta} \right) \cdot f(\mathbf{z}{k}) \right) \ &= \mathbf{A} \cdot \exp \left( \frac{\eta}{4\lambda} \sum_{k=1}^{\lambda} f(\mathbf{z}{k}) \cdot (\mathbf{s}{k} \mathbf{s}{k}^{\top} - \mathbb{I}) \right) \end{split}$$ #### <span id="page-19-3"></span>4.3 Orthogonal Decomposition of Multinormal Parameter Space We decompose the parameter vector space $(\boldsymbol{\delta}, \mathbf{M}) \in \mathbb{R}^d \times \mathcal{S}_d$ into the product $$\mathbb{R}^d \times \mathcal{S}_d = \underbrace{\mathbb{R}^d}{(\delta)} \times \underbrace{\mathcal{S}d^{\parallel}}{(\sigma)} \times \underbrace{\mathcal{S}d^{\perp}}{(\mathbf{B})} , \qquad (15)$$ of orthogonal subspaces. The one-dimensional space $\mathcal{S}_d^{\parallel} = \{\lambda \cdot \mathbb{I} \mid \lambda \in \mathbb{R}\}$ is spanned by the identity matrix $\mathbb{I}$ , and $\mathcal{S}_d^{\perp} = \{\mathbf{M} \in \mathcal{S}_d \mid \operatorname{tr}(\mathbf{M}) = 0\}$ denotes its orthogonal complement in $\mathcal{S}_d$ . The different components have roles with clear interpretations: The $(\delta)$ -component $\nabla_{\delta}J$ describes the update of the center of the search distribution, the $(\sigma)$ -component with value $\nabla_{\sigma}J \cdot \mathbb{I}$ for $\nabla_{\sigma}J = \operatorname{tr}(\nabla_{\mathbf{M}}J)/d$ has the role of a step size update, which becomes clear from the identity $\det(\exp(\mathbf{M})) = \exp(\operatorname{tr}(\mathbf{M}))$ , and the $(\mathbf{B})$ -component $\nabla_{\mathbf{B}}J = \nabla_{\mathbf{M}}J - \nabla_{\sigma}J$ describes the update of the transformation matrix, normalized to unit determinant, which can thus be attributed to the shape of the search distribution. This decomposition is canonical in being the finest decomposition such that updates of its components result in invariance of the search algorithm under linear transformations of the search space. On these subspaces we introduce independent learning rates $\eta_{\delta}$ , $\eta_{\sigma}$ , and $\eta_{\mathbf{B}}$ , respectively. For simplicity we also split the transformation matrix $\mathbf{A} = \sigma \cdot \mathbf{B}$ into the step size $\sigma \in \mathbb{R}^+$ and the normalized transformation matrix $\mathbf{B}$ with $\det(\mathbf{B}) = 1$ . Then the resulting update is $$\mu_{\text{new}} = \mu + \eta_{\delta} \cdot \nabla_{\delta} J = \mu + \eta_{\delta} \cdot \sum_{k=1}^{\lambda} f(\mathbf{z}k) \cdot \mathbf{s}_k \tag{16} $$ <span id="page-19-2"></span><span id="page-19-0"></span> $$\sigma{\text{new}} = \sigma \cdot \exp\left(\frac{\eta_{\sigma}}{2} \cdot \nabla_{\sigma}\right) = \sigma \cdot \exp\left(\frac{\eta_{\sigma}}{2} \cdot \frac{\operatorname{tr}(\nabla_{\mathbf{M}}J)}{d}\right) \tag{17} $$ <span id="page-19-1"></span> $$\mathbf{B}{\text{new}} = \mathbf{B} \cdot \exp\left(\frac{\eta{\mathbf{B}}}{2} \cdot \nabla_{\mathbf{B}} J\right) = \mathbf{B} \cdot \exp\left(\frac{\eta_{\mathbf{B}}}{2} \cdot \left(\nabla_{\mathbf{M}} J - \frac{\operatorname{tr}(\nabla_{\mathbf{M}} J)}{d} \cdot \mathbb{I}\right)\right), \tag{18}$$ with $\nabla_{\mathbf{M}}J$ from equation (14). In case of $\eta_{\sigma} = \eta_{\mathbf{B}}$ , in this case referred to as $\eta_{\mathbf{A}}$ , the updates (17) and (18) simplify to <span id="page-20-1"></span> $$\mathbf{A}{\text{new}} = \mathbf{A} \cdot \exp\left(\frac{\eta{\mathbf{A}}}{2} \cdot \nabla_{\mathbf{M}}\right)$$ $$= \mathbf{A} \cdot \exp\left(\frac{\eta_{\mathbf{A}}}{2} \cdot \sum_{k=1}^{\lambda} f(\mathbf{z}{k}) \cdot (\mathbf{s}{k} \mathbf{s}_{k}^{\top} - \mathbb{I})\right). \tag{19} $$

The resulting algorithm is called exponential NES (xNES), and shown in Algorithm 4. We also give the pseudocode for its hill-climber variant (see also section 4.5).

Updating the search distribution in the natural coordinate system is an alternative to the exponential parameterization (section 4.1) for making the algorithm invariant under linear transformations of the search space, which is then achieved in a direct and constructive way.

Algorithm 4: Exponential Natural Evolution Strategies (xNES), for multinormal distributions

input: f, \mu_{init}, \Sigma_{init} = \mathbf{A}^{\top} \mathbf{A}

initialize \sigma \leftarrow \sqrt[d]{\det(\mathbf{A})|}

\mathbf{B} \leftarrow \mathbf{A}/\sigma

repeat

for k = 1 \dots \lambda do

draw sample \mathbf{s}_k \sim \mathcal{N}(0, \mathbb{I})

\mathbf{z}_k \leftarrow \boldsymbol{\mu} + \sigma \mathbf{B}^{\top} \mathbf{s}_k

evaluate the fitness f(\mathbf{z}_k)

end

sort \{(\mathbf{s}_k, \mathbf{z}_k)\} with respect to f(\mathbf{z}_k) and compute utilities u_k

compute gradients \nabla_{\delta} J \leftarrow \sum_{k=1}^{\lambda} u_k \cdot \mathbf{s}_k \quad \nabla_{\mathbf{M}} J \leftarrow \sum_{k=1}^{\lambda} u_k \cdot (\mathbf{s}_k \mathbf{s}_k^{\top} - \mathbb{I})

\nabla_{\sigma} J \leftarrow \operatorname{tr}(\nabla_{\mathbf{M}} J)/d \quad \nabla_{\mathbf{B}} J \leftarrow \nabla_{\mathbf{M}} J - \nabla_{\sigma} J \cdot \mathbb{I}

\mu \leftarrow \mu + \eta_{\delta} \cdot \sigma \mathbf{B} \cdot \nabla_{\delta} J

update parameters \sigma \leftarrow \sigma \cdot \exp(\eta_{\sigma}/2 \cdot \nabla_{\sigma} J)

\mathbf{B} \leftarrow \mathbf{B} \cdot \exp(\eta_{\mathbf{B}}/2 \cdot \nabla_{\mathbf{B}} J)

until stopping criterion is met

4.4 Connection to CMA-ES.

It has been noticed independently by (Glasmachers et al., 2010a) and shortly afterwards by (Akimoto et al., 2010) that the natural gradient updates of xNES and the strategy updates of the CMA-ES algorithm (Hansen and Ostermeier, 2001) are closely connected. However, since xNES does not feature evolution paths, this connection is restricted to the so-called rank- $\mu$ -update (in the terminology of this study, rank- $\lambda$ -update) of CMA-ES.

Algorithm 5: (1+1)-xNES

input: f, \mu_{init}, \Sigma_{init} = \mathbf{A}^{\top} \mathbf{A}

f_{max} \leftarrow -\infty

repeat

\begin{vmatrix}

\text{draw sample } \mathbf{s} \sim \mathcal{N}(0, \mathbb{I}) \\

\text{evaluate the fitness } f(\mathbf{z} = \mathbf{A}^{\top} \mathbf{s} + \boldsymbol{\mu}) \\

\text{calculate log-derivatives } \nabla_{\mathbf{M}} \log \pi (\mathbf{z} | \boldsymbol{\theta}) = \frac{1}{2} (\mathbf{s} \mathbf{s}^{\top} - \mathbb{I}) \\

(\mathbf{z}_{1}, \mathbf{z}_{2}) \leftarrow (\boldsymbol{\mu}, \mathbf{z}) \\

\text{if } f_{max} < f(\mathbf{z}) \text{ then} \\

| f_{max} \leftarrow f(\mathbf{z}) \\

\mathbf{u} \leftarrow (-4, 1) \\

| \text{update mean } \boldsymbol{\mu} \leftarrow \mathbf{z} \\

\text{else} \\

| \mathbf{u} \leftarrow (\frac{4}{5}, 0) \\

\text{end} \\

\nabla_{\mathbf{M}} J \leftarrow \frac{1}{2} \sum_{k=1}^{2} \nabla_{\mathbf{M}} \log \pi (\mathbf{z}_{k} | \boldsymbol{\theta}) \cdot u_{k} = -\frac{u_{1}}{2} \mathbb{I} + \frac{u_{2}}{4} (\mathbf{s} \mathbf{s}^{\top} - \mathbb{I}) \\

\mathbf{A} \leftarrow \mathbf{A} \cdot \exp \left(\frac{1}{2} \eta \nabla_{\mathbf{M}} J\right) \\

\text{until stopping criterion is met}

First of all observe that xNES and CMA-ES share the same invariance properties. But more interestingly, although derived from different heuristics, their updates turn out to be nearly equivalent. A closer investigation of this equivalence promises synergies and new perspectives on the working principles of both algorithms. In particular, this insight shows that CMA-ES can be explained as a natural gradient algorithm, which may allow for a more thorough analysis of its updates, and xNES can profit from CMA-ES's mature settings of algorithms parameters, such as search space dimension-dependent population sizes, learning rates and utility values.

Both xNES and CMA-ES parameterize the search distribution with three functionally different parameters for mean, scale, and shape of the distribution. xNES uses the parameters $\mu$ , $\sigma$ , and $\mathbf{B}$ , while the covariance matrix is represented as $\sigma^2 \cdot \mathbf{C}$ in CMA-ES, where $\mathbf{C}$ can by any positive definite symmetric matrix. Thus, the representation of the scale of the search distribution is shared among $\sigma$ and $\mathbf{C}$ in CMA-ES, and the role of the additional parameter $\sigma$ is to allow for an adaptation of the step size on a faster time scale than the full covariance update. In contrast, the NES updates of scale and shape parameters $\sigma$ and $\mathbf{B}$ are properly decoupled.

Let us start with the updates of the center parameter $\mu$ . The update (16) is very similar to the update of the center of the search distribution in CMA-ES, see (Hansen and Ostermeier, 2001). The utility function exactly takes the role of the weights in CMA-ES, which assumes a fixed learning rate of one.

For the covariance matrix, the situation is more complicated. From equation (19) we deduce the update rule

$$\begin{split} \boldsymbol{\Sigma}_{\text{new}} = & (\mathbf{A}_{\text{new}})^{\top} \cdot \mathbf{A}_{\text{new}} \\ = & \mathbf{A}^{\top} \cdot \exp \left( \eta_{\boldsymbol{\Sigma}} \cdot \sum_{k=1}^{\lambda} u_k \left( \mathbf{s}_k \mathbf{s}_k^{\top} - \mathbb{I} \right) \right) \cdot \mathbf{A} \end{split}$$

for the covariance matrix, with learning rate $\eta_{\Sigma} = \eta_{\mathbf{A}}$ . This term is closely connected to the exponential parameterization of the natural coordinates in xNES, while CMA-ES is formulated in global linear coordinates. The connection of these updates can be shown either by applying the xNES update directly to the natural coordinates without the exponential parameterization, or by approximating the exponential map by its first order Taylor expansion. (Akimoto et al., 2010) established the same connection directly in coordinates based on the Cholesky decomposition of $\Sigma$ , see (Sun et al., 2009a,b). The arguably simplest derivation of the equivalence relies on the invariance of the natural gradient under coordinate transformations, which allows us to perform the computation, w.l.o.g., in natural coordinates. We use the first order Taylor approximation of exp to obtain

$$\exp\left(\eta_{\Sigma} \cdot \sum_{k=1}^{\lambda} u_k \left(\mathbf{s}_k \mathbf{s}_k^{\top} - \mathbb{I}\right)\right) \approx \mathbb{I} + \eta_{\Sigma} \cdot \sum_{k=1}^{\lambda} u_k \left(\mathbf{s}_k \mathbf{s}_k^{\top} - \mathbb{I}\right) ,$$

so the first order approximate update yields

$$\mathbf{\Sigma}'_{new} = \mathbf{A}^{\top} \cdot \left( \mathbb{I} + \eta_{\mathbf{\Sigma}} \cdot \sum_{k=1}^{\lambda} u_k \left( \mathbf{s}_k \mathbf{s}_k^{\top} - \mathbb{I} \right) \right) \cdot \mathbf{A}$$

$$= (1 - U \cdot \eta_{\mathbf{\Sigma}}) \cdot \mathbf{A}^{\top} \mathbf{A} + \eta_{\mathbf{\Sigma}} \cdot \sum_{k=1}^{\lambda} u_k \left( \mathbf{A}^{\top} \mathbf{s}_k \right) \left( \mathbf{A}^{\top} \mathbf{s}_k \right)^{\top}$$

$$= (1 - U \cdot \eta_{\mathbf{\Sigma}}) \cdot \mathbf{\Sigma} + \eta_{\mathbf{\Sigma}} \cdot \sum_{k=1}^{\lambda} u_k \left( \mathbf{z}_k - \boldsymbol{\mu} \right) \left( \mathbf{z}_k - \boldsymbol{\mu} \right)^{\top}$$

with $U = \sum_{k=1}^{\lambda} u_k$ , from which the connection to the CMA-ES rank- $\mu$ -update is obvious (see Hansen and Ostermeier, 2001).

Finally, the updates of the global step size parameter $\sigma$ turn out to be identical in xNES and CMA-ES without evolution paths.

Having highlighted the similarities, let us have a closer look at the differences between xNES and CMA-ES, which are mostly two aspects. CMA-ES uses the well-established technique of evolution paths to smoothen out random effects over multiple generations. This technique is particularly valuable when working with minimal population sizes, which is the default for both algorithms. Thus, evolution paths are expected to improve stability; further interpretations have been provided by (Hansen and Ostermeier, 2001). However, the presence of evolution paths has the conceptual disadvantage that the state of the CMA-ES algorithms is not completely described by its search distribution. The other difference between xNES and CMA-ES is the exponential parameterization of the updates in xNES,

which results in a multiplicative update equation for the covariance matrix, in contrast to the additive update of CMA-ES. We argue that just like for the global step size $\sigma$ , the multiplicative update of the covariance matrix is natural.

A valuable perspective offered by the natural gradient updates in xNES is the derivation of the updates of the center $\mu$ , the step size $\sigma$ , and the normalized transformation matrix B, all from the same principle of natural gradient ascent. In contrast, the updates applied in CMA-ES result from different heuristics for each parameter. Hence, it is even more surprising that the two algorithms are closely connected. This connection provides a post-hoc justification of the various heuristics employed by CMA-ES, and it highlights the consistency of the intuition behind these heuristics.

4.5 Elitism

The principle of the NES algorithm is to follow the natural gradient of expected fitness. This requires sampling the fitness gradient. Naturally, this amounts to what, within the realm of evolution strategies, is generally referred to as "comma-selection", that is, updating the search distribution based solely on the current batch of "offspring" samples, disregarding older samples such as the "parent" population. This seems to exclude approaches that retain some of the best samples, like elitism, hill-climbing, or even steady-state selection (Goldberg, 1989). In this section we show that elitism (and thus a wide variety of selection schemes) is indeed compatible with NES. We exemplify this technique by deriving a NES algorithm with (1+1) selection, i.e., a hill-climber (see also Glasmachers et al., 2010a).

It is impossible to estimate any information about the fitness gradient from a single sample, since at least two samples are required to estimate even a finite difference. The (1+1) selection scheme indicates that this dilemma can be resolved by considering two distinguished samples, namely the elitist or parent $\mathbf{z}^{\text{parent}} = \boldsymbol{\mu}$ , and the offspring $\mathbf{z}$ . Considering these two samples in the update is in principle sufficient for estimating the fitness gradient w.r.t. the parameters $\theta$ .

Care needs to be taken for setting the algorithm parameters, such as learning rates and utility values. The extremely small population size of one indicates that learning rates should generally be small in order to ensure stability of the algorithm. Another guideline is the well known observation (Rechenberg and Eigen, 1973) that a step size resulting in a success rate of roughly 1/5 maximizes progress. This indicates that a self-adaptation strategy should increase the learning rate in case of too many successes, and decrease it when observing too few successes.

Let us consider the basic case of radial Gaussian search distributions

$$\pi(\mathbf{z} \mid \boldsymbol{\mu}, \sigma) = \frac{1}{\sqrt{2\pi}\sigma} \exp\left(\frac{\|\mathbf{z} - \boldsymbol{\mu}\|^2}{2\sigma^2}\right)$$

with parameters $\boldsymbol{\mu} \in \mathbb{R}^d$ and $\sigma > 0$ . We encode these parameters as $\theta = (\boldsymbol{\mu}, \ell)$ with $\ell = \log(\sigma)$ . Let $\mathbf{s} \sim \mathcal{N}(0, 1)$ be a standard normally distributed vector, then we obtain the offspring as $\mathbf{z} = \boldsymbol{\mu} + \boldsymbol{\sigma} \cdot \mathbf{s} \sim \mathcal{N}(\boldsymbol{\mu}, \sigma)$ , and the natural gradient components are

$$\begin{split} \tilde{\nabla}_{\boldsymbol{\mu}} J &= u_1^{(\boldsymbol{\mu})} \cdot \mathbf{0} + u_2^{(\boldsymbol{\mu})} \cdot \boldsymbol{\sigma} \cdot \mathbf{s} \\ \tilde{\nabla}_{\ell} J &= u_1^{(\ell)} \cdot (-1) + u_2^{(\ell)} \cdot (\|\mathbf{s}\|^2 - 1). \end{split}$$

The corresponding strategy parameter updates read

$$\boldsymbol{\mu} \leftarrow \boldsymbol{\mu} + \eta_{\boldsymbol{\mu}} \cdot \left[ u_1^{(\boldsymbol{\mu})} \cdot \mathbf{0} + u_2^{(\boldsymbol{\mu})} \cdot \boldsymbol{\sigma} \cdot \mathbf{s} \right]$$ $$\boldsymbol{\sigma} \leftarrow \boldsymbol{\sigma} \cdot \exp \left( \eta_{\ell} \cdot \left[ u_1^{(\ell)} \cdot (-1) + u_2^{(\ell)} \cdot (\|\mathbf{s}\|^2 - 1) \right] \right).$$

The indices 1 and 2 of the utility values refer to the 'samples' $\mu$ and z, namely parent and offspring. Note that these samples correspond to the vectors $\bf 0$ and $\bf s$ in the above update equations (see also section 4.2). The superscripts of the utility values indicate the different parameters. Now elitist selection and the 1/5 rule dictate the settings of these utility values as follows:

- The elitist rule requires that the mean remains unchanged in case of no success $(u_1^{(\mu)} = 1 \text{ and } u_2^{(\mu)} = 0)$ , and that the new sample replaces the mean in case of success $(u_1^{(\mu)} = 0 \text{ and } u_2^{(\mu)} = 1)$ , with a learning rate of $\eta_{\mu} = 1$ ).

- Setting the utilities for $\ell$ to $u_1^{(\ell)} = 1$ and $u_2^{(\ell)} = 0$ in case of no success, effectively reduces the learning rate. Setting $u_1^{(\ell)} = -5$ and $u_2^{(\ell)} = 0$ in case of success has the opposite effect and roughly implements the 1/5-rule. The self-adaptation process can be stabilized with a small learning rate $\eta_{\ell}$ .

Note that we change the utility values based on success or failure of the offspring. This seems natural, since the utility of information encoded in the sample $\mathbf{z}$ depends on its success. Highlighting elitism in the selection, we call these utility values success-based. This is similar but not equivalent to rank-based utilities for the joint population $\{\mu, \mathbf{z}\}$ .

The NES hill-climber for radial Gaussian search distributions is illustrated in algorithm 6. This formulation offers a more standard perspective on the hill-climber by using explicit case distinctions for success and failure of the offspring instead of success-based utilities. The same procedure can be generalized to more flexible search distributions. A conservative strategy is to update further shape-related parameters only in case of success, which can be expressed by means of success-based utility values in the very same way. The corresponding algorithm for multi-variate Gaussian search distributions is Algorithm 5 (in section 4.2) and for multi-variate Cauchy it is Algorithm 8 (in section 5.3).

5. Beyond Multinormal Distributions

In the previous section we have seen how natural gradient ascent is applied to multi-variate normal distributions, which arguably constitute the most important class of search distributions in modern evolution strategies. In this section we expand the NES framework in breadth, motivating the usefulness and deriving a number of NES variants with different search distributions.

5.1 Separable NES

Adapting the full covariance matrix of the search distribution can be disadvantageous, particularly in high-dimensional search spaces, for two reasons.

Algorithm 6: (1+1)-NES with radial Gaussian distribution

\begin{aligned} & \textbf{input:} \ f, \ \boldsymbol{\mu}_{init}, \ \boldsymbol{\sigma}_{init} \\ & f_{best} \leftarrow f(\boldsymbol{\mu}_{init}) \\ & \textbf{repeat} \\ & \middle| & \text{draw sample } \mathbf{s} \sim \mathcal{N}(0,1) \\ & \text{create offspring } \mathbf{z} \leftarrow \boldsymbol{\mu} + \boldsymbol{\sigma} \cdot \mathbf{s} \\ & \text{evaluate the fitness } f(\mathbf{z}) \\ & \mathbf{if} \ f(\mathbf{z}) > f_{best} \ \mathbf{then} \\ & \middle| & \text{update mean } \boldsymbol{\mu} \leftarrow \mathbf{z} \\ & \middle| & \boldsymbol{\sigma} \leftarrow \boldsymbol{\sigma} \cdot \exp(5\eta_{\boldsymbol{\sigma}}) \\ & \middle| & f_{best} \leftarrow f(\mathbf{z}) \\ & \mathbf{else} \\ & \middle| & \boldsymbol{\sigma} \leftarrow \boldsymbol{\sigma} \cdot \exp(-\eta_{\boldsymbol{\sigma}}) \\ & \mathbf{end} \\ & \mathbf{until} \ stopping \ criterion \ is \ met; \end{aligned}

For many problems it can be safely assumed that the computational costs are governed by the number of fitness evaluations. This is particularly true if such evaluations rely on expensive simulations. However, for applications where fitness evaluations scale gracefully with the search space dimension, the $\mathcal{O}(d^3)$ xNES update (due to the matrix exponential) can dominate the computation. One such application is the evolutionary training of recurrent neural networks (i.e., neuroevolution), where the number of weights d in the network can grow quadratically with the number of neurons n, resulting in a complexity of $\mathcal{O}(n^6)$ for a single NES update.

A second reason not to adapt the full covariance matrix in high dimensional search spaces is sample efficiency. The covariance matrix has $d(d+1)/2 \in \mathcal{O}(d^2)$ degrees of freedom, which can be huge in large dimensions. Obtaining a stable estimate of this matrix based on samples may thus require many (costly) fitness evaluations, in turn requiring very small learning rates. As a result, the algorithm may simply not have enough time to adapt its search distribution to the problem with a given budget of fitness evaluations. In this case, it may be advantageous to restrict the class of search distributions in order to adapt at all, even if this results in a less steep learning curve in the (then practically irrelevant) limit of infinitely many fitness evaluations.

The only two distinguished parameter subsets of a multi-variate distribution that do not impose the choice of a particular coordinate system onto our search space are the 'size' $\sigma$ of the distribution, corresponding to the (2d)-th root of the determinant of the covariance matrix, and its orthogonal complement, the covariance matrix normalized to constant determinant $\mathbf{B}$ (see section 4.3). The first of these candidates results in a standard evolution strategy without covariance adaptation at all, which may indeed be a viable option in some applications, but is often too inflexible. The set of normalized covariance matrices, on the other hand, is not interesting because it is clear that the size of the distribution needs to be adjusted in order to ensure convergence to an optimum.

Thus, it has been proposed to give up some invariance properties of the search algorithm, and to adapt the class of search distribution with diagonal covariance matrices in some

predetermined coordinate system (Ros and Hansen, 2008). Such a choice is justified in many applications where a certain degree of independence can be assumed among the fitness function parameters. It has even been shown in (Ros and Hansen, 2008) that this approach can work surprisingly well even for highly non-separable fitness functions.

Restricting the class of search distributions to Gaussians with diagonal covariance matrix corresponds to restricting a general class of multi-variate search distributions to separable distributions

$$p(\mathbf{z} \mid \theta) = \prod_{i=1}^{d} \tilde{p}(\mathbf{z}_i \mid \theta_i) ,$$

where $\tilde{p}$ is a family of densities on the reals, and $\theta = (\theta_1, \dots, \theta_d)$ collects the parameters of all of these distributions. In most cases these parameters amount to $\theta_i = (\boldsymbol{\mu}_i, \boldsymbol{\sigma}_i)$ , where $\boldsymbol{\mu}_i \in \mathbb{R}$ is a position and $\boldsymbol{\sigma}_i \in \mathbb{R}^+$ is a scale parameter (i.e., mean and standard deviation, if they exist), such that $\mathbf{z}_i = \boldsymbol{\mu}_i + \boldsymbol{\sigma}_i \cdot \mathbf{s}_i \sim \tilde{p}(\cdot \mid \boldsymbol{\mu}_i, \boldsymbol{\sigma}_i)$ for $\mathbf{s}_i \sim \tilde{p}(\cdot \mid 0, 1)$ .

Algorithm 7: Separable NES (SNES)

input: f, \mu_{init}, \sigma_{init}

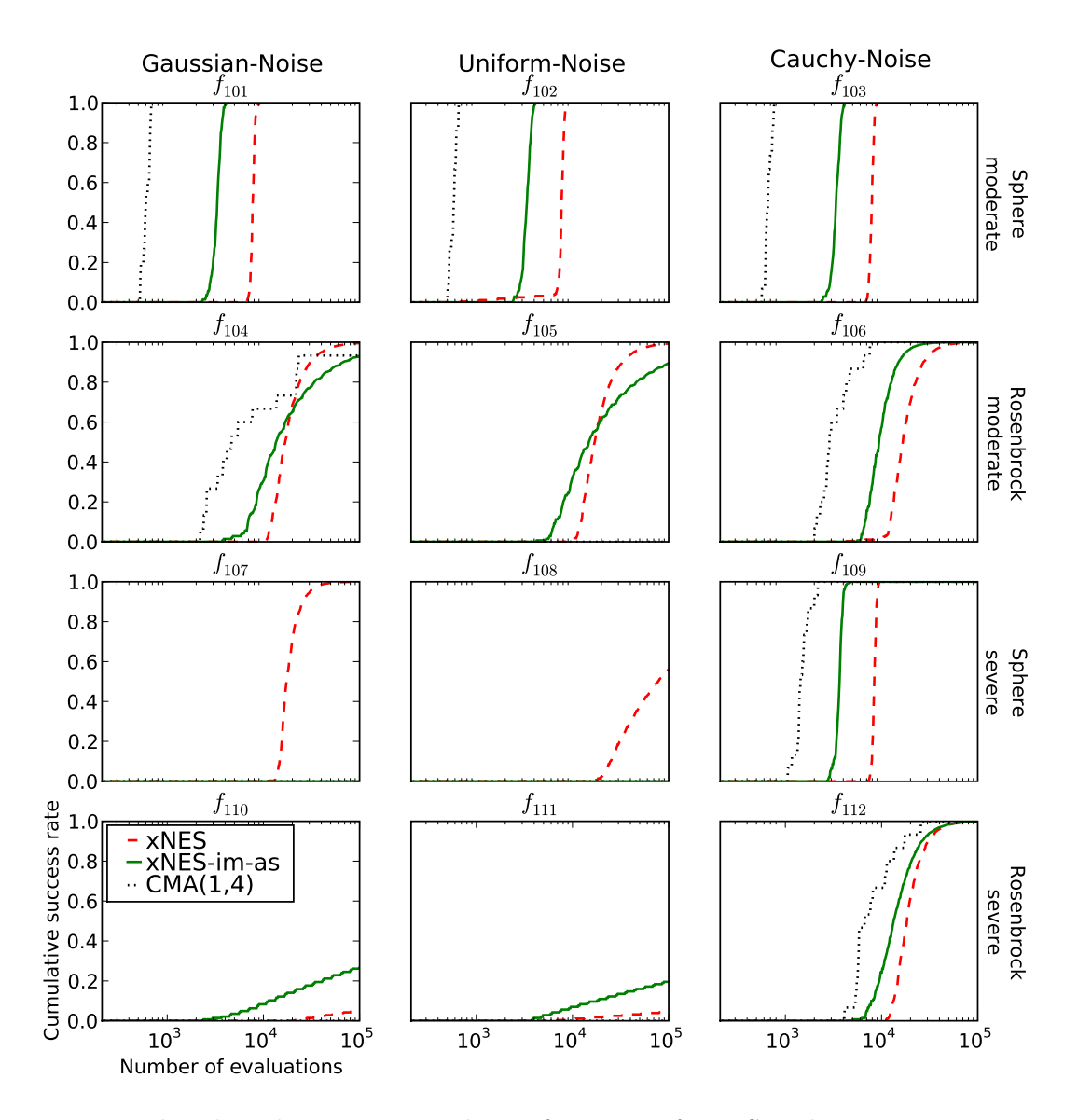

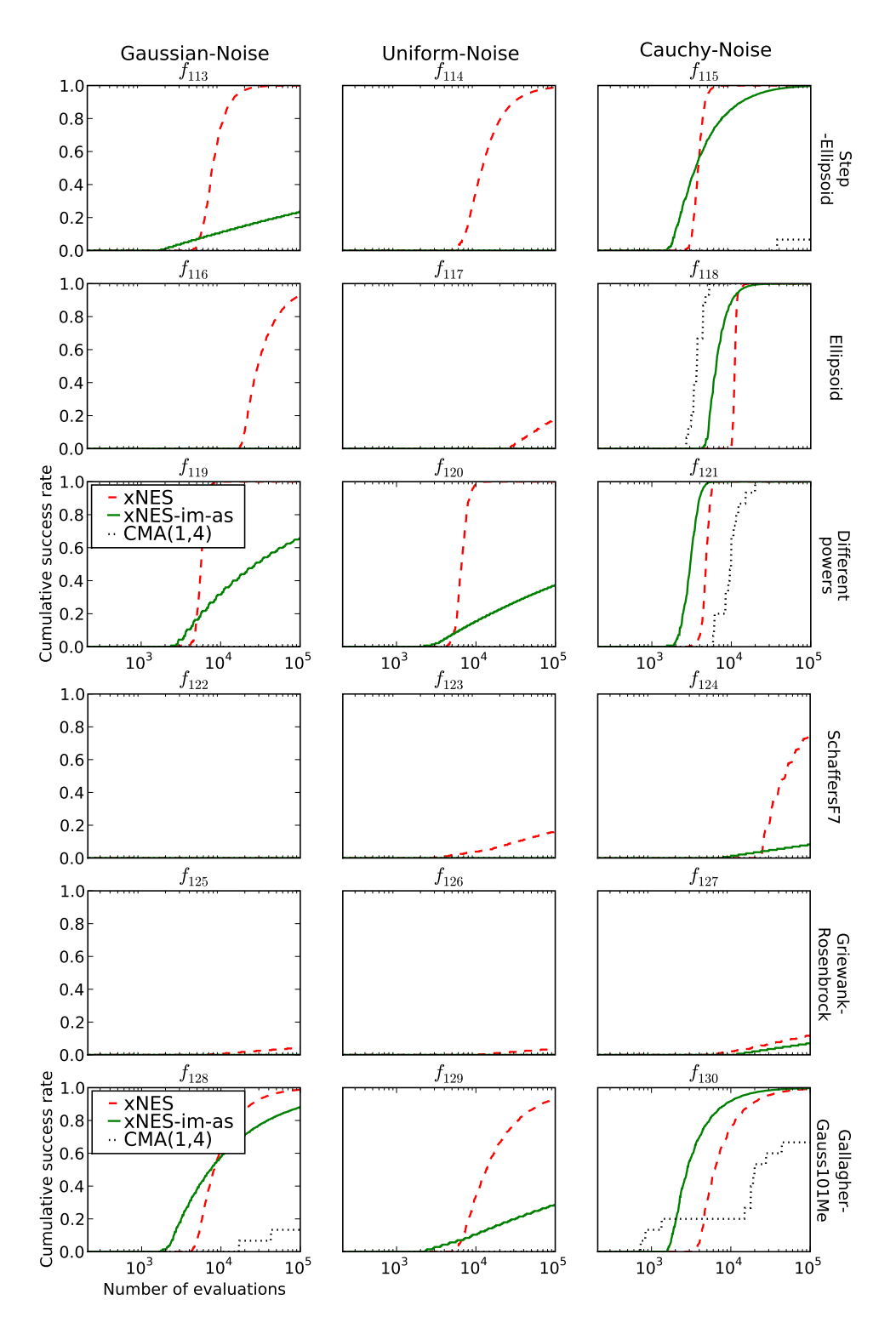

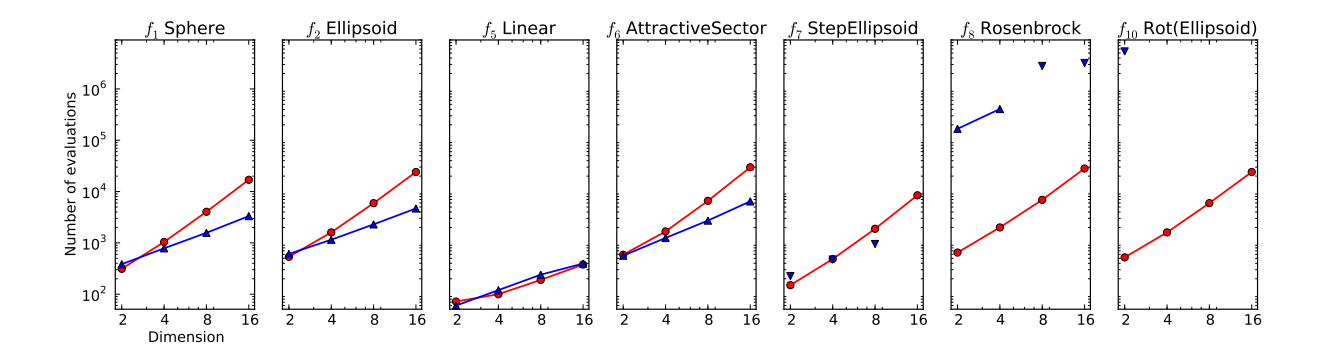

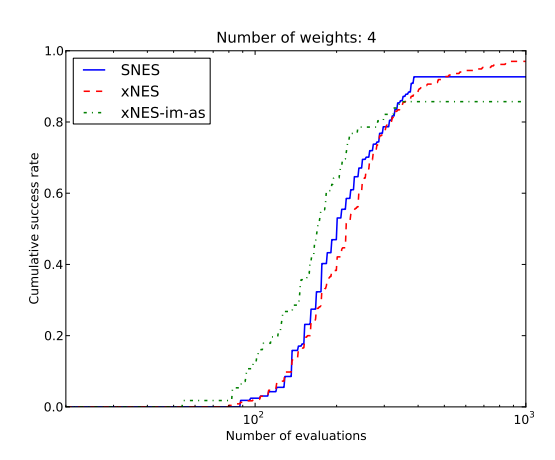

repeat